Will this help?

code = Constraint (19), message = System.Data.SQLite.SQLiteException (0x800027AF): constraint failed

UNIQUE constraint failed: DuplicateBlock.BlockID, DuplicateBlock.VolumeID

at System.Data.SQLite.SQLite3.Reset(SQLiteStatement stmt)

at System.Data.SQLite.SQLite3.Step(SQLiteStatement stmt)

at System.Data.SQLite.SQLiteDataReader.NextResult()

at System.Data.SQLite.SQLiteDataReader…ctor(SQLiteCommand cmd, CommandBehavior behave)

at System.Data.SQLite.SQLiteCommand.ExecuteNonQuery(CommandBehavior behavior)

at Duplicati.Library.Main.Database.ExtensionMethods.ExecuteNonQuery(IDbCommand self, Boolean writeLog, String cmd, Object values)

at Duplicati.Library.Main.Database.LocalRecreateDatabase.CleanupMissingVolumes()

at Duplicati.Library.Main.Operation.RecreateDatabaseHandler.DoRun(LocalDatabase dbparent, Boolean updating, IFilter filter, NumberedFilterFilelistDelegate filelistfilter, BlockVolumePostProcessor blockprocessor)

at Duplicati.Library.Main.Operation.RecreateDatabaseHandler.Run(String path, IFilter filter, NumberedFilterFilelistDelegate filelistfilter, BlockVolumePostProcessor blockprocessor)

at Duplicati.Library.Main.Operation.RepairHandler.RunRepairLocal(IFilter filter)

at Duplicati.Library.Main.Operation.RepairHandler.Run(IFilter filter)

at Duplicati.Library.Main.Controller.<>c__DisplayClass21_0.b__0(RepairResults result)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String& paths, IFilter& filter, Action1 method) at Duplicati.Library.Main.Controller.RunAction[T](T result, IFilter& filter, Action1 method)

at Duplicati.Library.Main.Controller.Repair(IFilter filter)

at Duplicati.Server.Runner.Run(IRunnerData data, Boolean fromQueue)

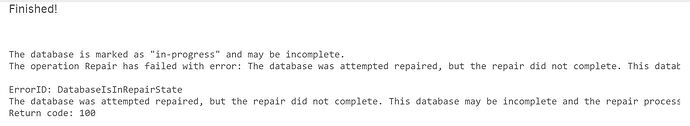

Another log report I get, when I try to delete and recreate the database, is this:

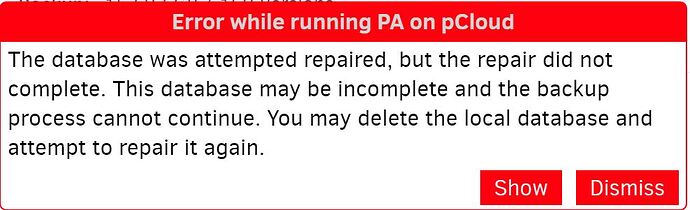

Duplicati.Library.Interface.UserInformationException: The database was attempted repaired, but the repair did not complete. This database may be incomplete and the backup process cannot continue. You may delete the local database and attempt to repair it again.

at Duplicati.Library.Main.Operation.BackupHandler.PreBackupVerify(String backendurl, Options options, BackupResults result)

at Duplicati.Library.Main.Operation.BackupHandler.RunAsync(String sources, IFilter filter, CancellationToken token)

at CoCoL.ChannelExtensions.WaitForTaskOrThrow(Task task)

at Duplicati.Library.Main.Operation.BackupHandler.Run(String sources, IFilter filter, CancellationToken token)

at Duplicati.Library.Main.Controller.<>c__DisplayClass17_0.b__0(BackupResults result)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String& paths, IFilter& filter, Action`1 method)

at Duplicati.Library.Main.Controller.Backup(String inputsources, IFilter filter)

at Duplicati.Server.Runner.Run(IRunnerData data, Boolean fromQueue)