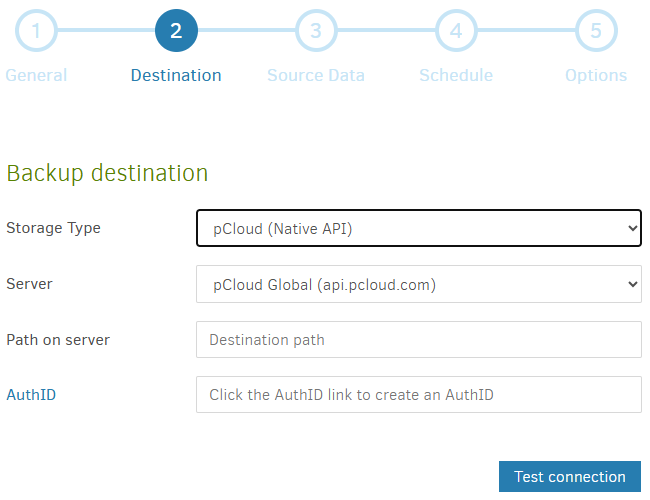

I have two backups: one local and one in the cloud. After updating to Canary, the local backup still works, but the cloud backup doesn’t. I get this:

2024-12-24 00:12:27 +08 - [Error-Duplicati.Library.Main.AsyncDownloader-FailedToRetrieveFile]: Failed to retrieve file duplicati-befc3a22ff48342b9b62e2f5f58e9cf2f.dblock.zip.aes

IOException: The request could not be performed because of an I/O device error.

I attempted a repair, and got this:

The database was attempted repaired, but the repair did not complete. This database may be incomplete and the backup process cannot continue. You may delete the local database and attempt to repair it again.

The operation Repair has failed with error: unknown error

No transaction is active on this connection => unknown error

No transaction is active on this connectioncode = Unknown (-1), message = System.Data.SQLite.SQLiteException (0x80004005): unknown error

No transaction is active on this connection

at System.Data.SQLite.SQLiteTransaction.Commit()

at Duplicati.Library.Main.Operation.RecreateDatabaseHandler.DoRun(LocalDatabase dbparent, Boolean updating, IFilter filter, NumberedFilterFilelistDelegate filelistfilter, BlockVolumePostProcessor blockprocessor)

at Duplicati.Library.Main.Operation.RecreateDatabaseHandler.Run(String path, IFilter filter, NumberedFilterFilelistDelegate filelistfilter, BlockVolumePostProcessor blockprocessor)

at Duplicati.Library.Main.Operation.RepairHandler.RunRepairLocal(IFilter filter)

at Duplicati.Library.Main.Operation.RepairHandler.Run(IFilter filter)

at Duplicati.Library.Main.Controller.<>c__DisplayClass21_0.b__0(RepairResults result)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String& paths, IFilter& filter, Action1 method) at Duplicati.Library.Main.Controller.RunAction[T](T result, IFilter& filter, Action1 method)

at Duplicati.Library.Main.Controller.Repair(IFilter filter)

at Duplicati.CommandLine.Commands.Repair(TextWriter outwriter, Action1 setup, List1 args, Dictionary2 options, IFilter filter) at Duplicati.CommandLine.Program.ParseCommandLine(TextWriter outwriter, Action1 setup, Boolean& verboseErrors, String args)

at Duplicati.CommandLine.Program.RunCommandLine(TextWriter outwriter, TextWriter errwriter, Action`1 setup, String args)

Return code: 100

Any idea what the problem is, and how to solve it?