Hello there,

A couple weeks ago i made a post about a FileNotFound exception and im not entirely sure if this is the same issue. I thought i’d link the post anyway: Keep getting FileNotFoundException

I run Duplicati in Docker on a Synology NAS. I’m trying to backup about 3TB of data currently. My backup will run for a couple hours, and then stop with an error:

System.AggregateException: Could not find file "/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462\

and

System.IO.FileNotFoundException Could not find file "/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462\

Both these error names are in the same error log. I’m running the official Duplicati/Duplicati on docker with “high privileges” as Synology calls it, and in the Synology Docker app the Duplicati container has the variables PGID = 0 and PUID = 0, i think i read somewhere that has something to do with privileges, i thought i’d at least mention it, maybe it is useful to someone reading this.

I would greatly appreciate if someone could help me solver this problem because Duplicati is pretty much my last shot at getting proper offiste backups to work.

I can still try the official Synology Duplicati Package but… it should work in Docker, right? I shouldnt have to use that version. EDIT: Never mind, i also had an issue with that one.

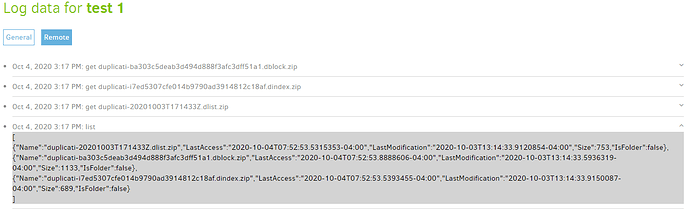

Here is the entire error log, sorry it’s very long:

{“ClassName”:“System.AggregateException”,“Message”:“One or more errors occurred.”,“Data”:null,“InnerException”:{“ClassName”:“System.AggregateException”,“Message”:“Could not find file "/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462"”,“Data”:null,“InnerException”:{“ClassName”:“System.IO.FileNotFoundException”,“Message”:“Could not find file "/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462"”,“Data”:null,“InnerException”:null,“HelpURL”:null,“StackTraceString”:" at System.IO.FileStream…ctor (System.String path, System.IO.FileMode mode, System.IO.FileAccess access, System.IO.FileShare share, System.Int32 bufferSize, System.Boolean anonymous, System.IO.FileOptions options) [0x0019e] in <3833a6edf2074b959d3dab898627f0ac>:0 \n at System.IO.FileStream…ctor (System.String path, System.IO.FileMode mode, System.IO.FileAccess access, System.IO.FileShare share) [0x00000] in <3833a6edf2074b959d3dab898627f0ac>:0 \n at (wrapper remoting-invoke-with-check) System.IO.FileStream…ctor(string,System.IO.FileMode,System.IO.FileAccess,System.IO.FileShare)\n at Duplicati.Library.Main.Volumes.VolumeReaderBase.LoadCompressor (System.String compressor, System.String file, Duplicati.Library.Main.Options options, System.IO.Stream& stream) [0x00000] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Volumes.VolumeReaderBase…ctor (System.String compressor, System.String file, Duplicati.Library.Main.Options options) [0x00007] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Volumes.BlockVolumeReader…ctor (System.String compressor, System.String file, Duplicati.Library.Main.Options options) [0x00010] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.SpillCollectorProcess+<>c__DisplayClass0_0.b__0 (<>f__AnonymousType11

2[<Input>j__TPar,<Output>j__TPar] self) [0x0023c] in <8f1de655bd1240739a78684d845cecc8>:0 \n at CoCoL.AutomationExtensions.RunTask[T] (T channels, System.Func2[T,TResult] method, System.Boolean catchRetiredExceptions) [0x000d5] in <9a758ff4db6c48d6b3d4d0e5c2adf6d1>:0 \n at Duplicati.Library.Main.Operation.BackupHandler.RunMainOperation (System.Collections.Generic.IEnumerable1[T] sources, Duplicati.Library.Snapshots.ISnapshotService snapshot, Duplicati.Library.Snapshots.UsnJournalService journalService, Duplicati.Library.Main.Operation.Backup.BackupDatabase database, Duplicati.Library.Main.Operation.Backup.BackupStatsCollector stats, Duplicati.Library.Main.Options options, Duplicati.Library.Utility.IFilter sourcefilter, Duplicati.Library.Utility.IFilter filter, Duplicati.Library.Main.BackupResults result, Duplicati.Library.Main.Operation.Common.ITaskReader taskreader, System.Int64 filesetid, System.Int64 lastfilesetid, System.Threading.CancellationToken token) [0x0035f] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.BackupHandler.RunAsync (System.String[] sources, Duplicati.Library.Utility.IFilter filter, System.Threading.CancellationToken token) [0x00a12] in <8f1de655bd1240739a78684d845cecc8>:0 ","RemoteStackTraceString":null,"RemoteStackIndex":0,"ExceptionMethod":null,"HResult":-2147024894,"Source":"mscorlib","FileNotFound_FileName":"/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462","FileNotFound_FusionLog":null},"HelpURL":null,"StackTraceString":" at Duplicati.Library.Main.Operation.BackupHandler.RunAsync (System.String[] sources, Duplicati.Library.Utility.IFilter filter, System.Threading.CancellationToken token) [0x01033] in <8f1de655bd1240739a78684d845cecc8>:0 ","RemoteStackTraceString":null,"RemoteStackIndex":0,"ExceptionMethod":null,"HResult":-2146233088,"Source":"Duplicati.Library.Main","InnerExceptions":[{"ClassName":"System.IO.FileNotFoundException","Message":"Could not find file \"/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462\"","Data":null,"InnerException":null,"HelpURL":null,"StackTraceString":" at System.IO.FileStream..ctor (System.String path, System.IO.FileMode mode, System.IO.FileAccess access, System.IO.FileShare share, System.Int32 bufferSize, System.Boolean anonymous, System.IO.FileOptions options) [0x0019e] in <3833a6edf2074b959d3dab898627f0ac>:0 \n at System.IO.FileStream..ctor (System.String path, System.IO.FileMode mode, System.IO.FileAccess access, System.IO.FileShare share) [0x00000] in <3833a6edf2074b959d3dab898627f0ac>:0 \n at (wrapper remoting-invoke-with-check) System.IO.FileStream..ctor(string,System.IO.FileMode,System.IO.FileAccess,System.IO.FileShare)\n at Duplicati.Library.Main.Volumes.VolumeReaderBase.LoadCompressor (System.String compressor, System.String file, Duplicati.Library.Main.Options options, System.IO.Stream& stream) [0x00000] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Volumes.VolumeReaderBase..ctor (System.String compressor, System.String file, Duplicati.Library.Main.Options options) [0x00007] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Volumes.BlockVolumeReader..ctor (System.String compressor, System.String file, Duplicati.Library.Main.Options options) [0x00010] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.SpillCollectorProcess+<>c__DisplayClass0_0.<Run>b__0 (<>f__AnonymousType112[j__TPar,j__TPar] self) [0x0023c] in <8f1de655bd1240739a78684d845cecc8>:0 \n at CoCoL.AutomationExtensions.RunTask[T] (T channels, System.Func2[T,TResult] method, System.Boolean catchRetiredExceptions) [0x000d5] in <9a758ff4db6c48d6b3d4d0e5c2adf6d1>:0 \n at Duplicati.Library.Main.Operation.BackupHandler.RunMainOperation (System.Collections.Generic.IEnumerable1[T] sources, Duplicati.Library.Snapshots.ISnapshotService snapshot, Duplicati.Library.Snapshots.UsnJournalService journalService, Duplicati.Library.Main.Operation.Backup.BackupDatabase database, Duplicati.Library.Main.Operation.Backup.BackupStatsCollector stats, Duplicati.Library.Main.Options options, Duplicati.Library.Utility.IFilter sourcefilter, Duplicati.Library.Utility.IFilter filter, Duplicati.Library.Main.BackupResults result, Duplicati.Library.Main.Operation.Common.ITaskReader taskreader, System.Int64 filesetid, System.Int64 lastfilesetid, System.Threading.CancellationToken token) [0x0035f] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.BackupHandler.RunAsync (System.String sources, Duplicati.Library.Utility.IFilter filter, System.Threading.CancellationToken token) [0x00a12] in <8f1de655bd1240739a78684d845cecc8>:0 “,“RemoteStackTraceString”:null,“RemoteStackIndex”:0,“ExceptionMethod”:null,“HResult”:-2147024894,“Source”:“mscorlib”,“FileNotFound_FileName”:”/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462",“FileNotFound_FusionLog”:null},{“ClassName”:“System.AggregateException”,“Message”:“One or more errors occurred.”,“Data”:null,“InnerException”:{“ClassName”:“System.Net.WebException”,“Message”:“The remote server returned an error: (504) Gateway Time-out.”,“Data”:null,“InnerException”:null,“HelpURL”:null,“StackTraceString”:" at System.Net.HttpWebRequest.GetResponseFromData (System.Net.WebResponseStream stream, System.Threading.CancellationToken cancellationToken) [0x00146] in <9b672a45b19f4d52b5f28f32c0c91d97>:0 \n at System.Net.HttpWebRequest.RunWithTimeoutWorker[T] (System.Threading.Tasks.Task1[TResult] workerTask, System.Int32 timeout, System.Action abort, System.Func1[TResult] aborted, System.Threading.CancellationTokenSource cts) [0x000f8] in <9b672a45b19f4d52b5f28f32c0c91d97>:0 \n at Duplicati.Library.Utility.AsyncHttpRequest+AsyncWrapper.GetResponseOrStream () [0x0004d] in :0 \n at Duplicati.Library.Utility.AsyncHttpRequest.GetResponse () [0x00044] in :0 \n at Duplicati.Library.Backend.WEBDAV.PutAsync (System.String remotename, System.IO.Stream stream, System.Threading.CancellationToken cancelToken) [0x001b8] in <0d600faf328943a887e690f4858efbb2>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoPut (Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Interface.IBackend backend, System.Threading.CancellationToken cancelToken) [0x00426] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader+<>c__DisplayClass17_0.b__0 () [0x0010a] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoWithRetry (System.Func1[TResult] method, Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x0017c] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoWithRetry (System.Func1[TResult] method, Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x003a3] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.UploadFileAsync (Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x000da] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.UploadVolumeWriter (Duplicati.Library.Main.Volumes.VolumeWriterBase volumeWriter, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x000b8] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.b__13_0 (<>f__AnonymousType121[<Input>j__TPar] self) [0x00847] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.<Run>b__13_0 (<>f__AnonymousType121[j__TPar] self) [0x0089e] in <8f1de655bd1240739a78684d845cecc8>:0 \n at CoCoL.AutomationExtensions.RunTask[T] (T channels, System.Func2[T,TResult] method, System.Boolean catchRetiredExceptions) [0x000d5] in <9a758ff4db6c48d6b3d4d0e5c2adf6d1>:0 ","RemoteStackTraceString":null,"RemoteStackIndex":0,"ExceptionMethod":null,"HResult":-2146233079,"Source":"mscorlib"},"HelpURL":null,"StackTraceString":null,"RemoteStackTraceString":null,"RemoteStackIndex":0,"ExceptionMethod":null,"HResult":-2146233088,"Source":null,"InnerExceptions":[{"ClassName":"System.Net.WebException","Message":"The remote server returned an error: (504) Gateway Time-out.","Data":null,"InnerException":null,"HelpURL":null,"StackTraceString":" at System.Net.HttpWebRequest.GetResponseFromData (System.Net.WebResponseStream stream, System.Threading.CancellationToken cancellationToken) [0x00146] in <9b672a45b19f4d52b5f28f32c0c91d97>:0 \n at System.Net.HttpWebRequest.RunWithTimeoutWorker[T] (System.Threading.Tasks.Task1[TResult] workerTask, System.Int32 timeout, System.Action abort, System.Func1[TResult] aborted, System.Threading.CancellationTokenSource cts) [0x000f8] in <9b672a45b19f4d52b5f28f32c0c91d97>:0 \n at Duplicati.Library.Utility.AsyncHttpRequest+AsyncWrapper.GetResponseOrStream () [0x0004d] in <b0ec73cdc8b845289fe2e9bdf696ccd0>:0 \n at Duplicati.Library.Utility.AsyncHttpRequest.GetResponse () [0x00044] in <b0ec73cdc8b845289fe2e9bdf696ccd0>:0 \n at Duplicati.Library.Backend.WEBDAV.PutAsync (System.String remotename, System.IO.Stream stream, System.Threading.CancellationToken cancelToken) [0x001b8] in <0d600faf328943a887e690f4858efbb2>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoPut (Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Interface.IBackend backend, System.Threading.CancellationToken cancelToken) [0x00426] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader+<>c__DisplayClass17_0.<UploadFileAsync>b__0 () [0x0010a] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoWithRetry (System.Func1[TResult] method, Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x0017c] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoWithRetry (System.Func1[TResult] method, Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x003a3] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.UploadFileAsync (Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x000da] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.UploadVolumeWriter (Duplicati.Library.Main.Volumes.VolumeWriterBase volumeWriter, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x000b8] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.<Run>b__13_0 (<>f__AnonymousType121[j__TPar] self) [0x00847] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.b__13_0 (<>f__AnonymousType121[<Input>j__TPar] self) [0x0089e] in <8f1de655bd1240739a78684d845cecc8>:0 \n at CoCoL.AutomationExtensions.RunTask[T] (T channels, System.Func2[T,TResult] method, System.Boolean catchRetiredExceptions) [0x000d5] in <9a758ff4db6c48d6b3d4d0e5c2adf6d1>:0 “,“RemoteStackTraceString”:null,“RemoteStackIndex”:0,“ExceptionMethod”:null,“HResult”:-2146233079,“Source”:“mscorlib”}]}]},“HelpURL”:null,“StackTraceString”:” at CoCoL.ChannelExtensions.WaitForTaskOrThrow (System.Threading.Tasks.Task task) [0x0005d] in <9a758ff4db6c48d6b3d4d0e5c2adf6d1>:0 \n at Duplicati.Library.Main.Operation.BackupHandler.Run (System.String sources, Duplicati.Library.Utility.IFilter filter, System.Threading.CancellationToken token) [0x00009] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Controller+<>c__DisplayClass14_0.b__0 (Duplicati.Library.Main.BackupResults result) [0x0004b] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Controller.RunAction[T] (T result, System.String& paths, Duplicati.Library.Utility.IFilter& filter, System.Action1[T] method) [0x0026f] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Controller.Backup (System.String[] inputsources, Duplicati.Library.Utility.IFilter filter) [0x00074] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Server.Runner.Run (Duplicati.Server.Runner+IRunnerData data, System.Boolean fromQueue) [0x00349] in <c5f097a49c0a4f1fb0f93cf3f5f218b1>:0 ","RemoteStackTraceString":null,"RemoteStackIndex":0,"ExceptionMethod":null,"HResult":-2146233088,"Source":"CoCoL","InnerExceptions":[{"ClassName":"System.AggregateException","Message":"Could not find file \"/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462\"","Data":null,"InnerException":{"ClassName":"System.IO.FileNotFoundException","Message":"Could not find file \"/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462\"","Data":null,"InnerException":null,"HelpURL":null,"StackTraceString":" at System.IO.FileStream..ctor (System.String path, System.IO.FileMode mode, System.IO.FileAccess access, System.IO.FileShare share, System.Int32 bufferSize, System.Boolean anonymous, System.IO.FileOptions options) [0x0019e] in <3833a6edf2074b959d3dab898627f0ac>:0 \n at System.IO.FileStream..ctor (System.String path, System.IO.FileMode mode, System.IO.FileAccess access, System.IO.FileShare share) [0x00000] in <3833a6edf2074b959d3dab898627f0ac>:0 \n at (wrapper remoting-invoke-with-check) System.IO.FileStream..ctor(string,System.IO.FileMode,System.IO.FileAccess,System.IO.FileShare)\n at Duplicati.Library.Main.Volumes.VolumeReaderBase.LoadCompressor (System.String compressor, System.String file, Duplicati.Library.Main.Options options, System.IO.Stream& stream) [0x00000] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Volumes.VolumeReaderBase..ctor (System.String compressor, System.String file, Duplicati.Library.Main.Options options) [0x00007] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Volumes.BlockVolumeReader..ctor (System.String compressor, System.String file, Duplicati.Library.Main.Options options) [0x00010] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.SpillCollectorProcess+<>c__DisplayClass0_0.<Run>b__0 (<>f__AnonymousType112[j__TPar,j__TPar] self) [0x0023c] in <8f1de655bd1240739a78684d845cecc8>:0 \n at CoCoL.AutomationExtensions.RunTask[T] (T channels, System.Func2[T,TResult] method, System.Boolean catchRetiredExceptions) [0x000d5] in <9a758ff4db6c48d6b3d4d0e5c2adf6d1>:0 \n at Duplicati.Library.Main.Operation.BackupHandler.RunMainOperation (System.Collections.Generic.IEnumerable1[T] sources, Duplicati.Library.Snapshots.ISnapshotService snapshot, Duplicati.Library.Snapshots.UsnJournalService journalService, Duplicati.Library.Main.Operation.Backup.BackupDatabase database, Duplicati.Library.Main.Operation.Backup.BackupStatsCollector stats, Duplicati.Library.Main.Options options, Duplicati.Library.Utility.IFilter sourcefilter, Duplicati.Library.Utility.IFilter filter, Duplicati.Library.Main.BackupResults result, Duplicati.Library.Main.Operation.Common.ITaskReader taskreader, System.Int64 filesetid, System.Int64 lastfilesetid, System.Threading.CancellationToken token) [0x0035f] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.BackupHandler.RunAsync (System.String sources, Duplicati.Library.Utility.IFilter filter, System.Threading.CancellationToken token) [0x00a12] in <8f1de655bd1240739a78684d845cecc8>:0 “,“RemoteStackTraceString”:null,“RemoteStackIndex”:0,“ExceptionMethod”:null,“HResult”:-2147024894,“Source”:“mscorlib”,“FileNotFound_FileName”:”/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462",“FileNotFound_FusionLog”:null},“HelpURL”:null,“StackTraceString”:" at Duplicati.Library.Main.Operation.BackupHandler.RunAsync (System.String sources, Duplicati.Library.Utility.IFilter filter, System.Threading.CancellationToken token) [0x01033] in <8f1de655bd1240739a78684d845cecc8>:0 “,“RemoteStackTraceString”:null,“RemoteStackIndex”:0,“ExceptionMethod”:null,“HResult”:-2146233088,“Source”:“Duplicati.Library.Main”,“InnerExceptions”:[{“ClassName”:“System.IO.FileNotFoundException”,“Message”:“Could not find file "/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462"”,“Data”:null,“InnerException”:null,“HelpURL”:null,“StackTraceString”:” at System.IO.FileStream…ctor (System.String path, System.IO.FileMode mode, System.IO.FileAccess access, System.IO.FileShare share, System.Int32 bufferSize, System.Boolean anonymous, System.IO.FileOptions options) [0x0019e] in <3833a6edf2074b959d3dab898627f0ac>:0 \n at System.IO.FileStream…ctor (System.String path, System.IO.FileMode mode, System.IO.FileAccess access, System.IO.FileShare share) [0x00000] in <3833a6edf2074b959d3dab898627f0ac>:0 \n at (wrapper remoting-invoke-with-check) System.IO.FileStream…ctor(string,System.IO.FileMode,System.IO.FileAccess,System.IO.FileShare)\n at Duplicati.Library.Main.Volumes.VolumeReaderBase.LoadCompressor (System.String compressor, System.String file, Duplicati.Library.Main.Options options, System.IO.Stream& stream) [0x00000] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Volumes.VolumeReaderBase…ctor (System.String compressor, System.String file, Duplicati.Library.Main.Options options) [0x00007] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Volumes.BlockVolumeReader…ctor (System.String compressor, System.String file, Duplicati.Library.Main.Options options) [0x00010] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.SpillCollectorProcess+<>c__DisplayClass0_0.b__0 (<>f__AnonymousType11`2[j__TPar,j__TPar] self) [0x0023c] in <8f1de655bd1240739a78684d845cecc8>:0 \n at CoCoL.AutomationExtensions.RunTask[T] (T channels, System.Func`2[T,TResult] method, System.Boolean catchRetiredExceptions) [0x000d5] in <9a758ff4db6c48d6b3d4d0e5c2adf6d1>:0 \n at Duplicati.Library.Main.Operation.BackupHandler.RunMainOperation (System.Collections.Generic.IEnumerable`1[T] sources, Duplicati.Library.Snapshots.ISnapshotService snapshot, Duplicati.Library.Snapshots.UsnJournalService journalService, Duplicati.Library.Main.Operation.Backup.BackupDatabase database, Duplicati.Library.Main.Operation.Backup.BackupStatsCollector stats, Duplicati.Library.Main.Options options, Duplicati.Library.Utility.IFilter sourcefilter, Duplicati.Library.Utility.IFilter filter, Duplicati.Library.Main.BackupResults result, Duplicati.Library.Main.Operation.Common.ITaskReader taskreader, System.Int64 filesetid, System.Int64 lastfilesetid, System.Threading.CancellationToken token) [0x0035f] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.BackupHandler.RunAsync (System.String sources, Duplicati.Library.Utility.IFilter filter, System.Threading.CancellationToken token) [0x00a12] in <8f1de655bd1240739a78684d845cecc8>:0 “,“RemoteStackTraceString”:null,“RemoteStackIndex”:0,“ExceptionMethod”:null,“HResult”:-2147024894,“Source”:“mscorlib”,“FileNotFound_FileName”:”/tmp/dup-6f255783-2945-47fe-8786-8f3f19ece462",“FileNotFound_FusionLog”:null},{“ClassName”:“System.AggregateException”,“Message”:“One or more errors occurred.”,“Data”:null,“InnerException”:{“ClassName”:“System.Net.WebException”,“Message”:“The remote server returned an error: (504) Gateway Time-out.”,“Data”:null,“InnerException”:null,“HelpURL”:null,“StackTraceString”:" at System.Net.HttpWebRequest.GetResponseFromData (System.Net.WebResponseStream stream, System.Threading.CancellationToken cancellationToken) [0x00146] in <9b672a45b19f4d52b5f28f32c0c91d97>:0 \n at System.Net.HttpWebRequest.RunWithTimeoutWorker[T] (System.Threading.Tasks.Task`1[TResult] workerTask, System.Int32 timeout, System.Action abort, System.Func`1[TResult] aborted, System.Threading.CancellationTokenSource cts) [0x000f8] in <9b672a45b19f4d52b5f28f32c0c91d97>:0 \n at Duplicati.Library.Utility.AsyncHttpRequest+AsyncWrapper.GetResponseOrStream () [0x0004d] in :0 \n at Duplicati.Library.Utility.AsyncHttpRequest.GetResponse () [0x00044] in :0 \n at Duplicati.Library.Backend.WEBDAV.PutAsync (System.String remotename, System.IO.Stream stream, System.Threading.CancellationToken cancelToken) [0x001b8] in <0d600faf328943a887e690f4858efbb2>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoPut (Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Interface.IBackend backend, System.Threading.CancellationToken cancelToken) [0x00426] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader+<>c__DisplayClass17_0.b__0 () [0x0010a] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoWithRetry (System.Func`1[TResult] method, Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x0017c] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoWithRetry (System.Func`1[TResult] method, Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x003a3] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.UploadFileAsync (Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x000da] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.UploadVolumeWriter (Duplicati.Library.Main.Volumes.VolumeWriterBase volumeWriter, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x000b8] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.b__13_0 (<>f__AnonymousType12`1[j__TPar] self) [0x00847] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.b__13_0 (<>f__AnonymousType12`1[j__TPar] self) [0x0089e] in <8f1de655bd1240739a78684d845cecc8>:0 \n at CoCoL.AutomationExtensions.RunTask[T] (T channels, System.Func`2[T,TResult] method, System.Boolean catchRetiredExceptions) [0x000d5] in <9a758ff4db6c48d6b3d4d0e5c2adf6d1>:0 “,“RemoteStackTraceString”:null,“RemoteStackIndex”:0,“ExceptionMethod”:null,“HResult”:-2146233079,“Source”:“mscorlib”},“HelpURL”:null,“StackTraceString”:null,“RemoteStackTraceString”:null,“RemoteStackIndex”:0,“ExceptionMethod”:null,“HResult”:-2146233088,“Source”:null,“InnerExceptions”:[{“ClassName”:“System.Net.WebException”,“Message”:“The remote server returned an error: (504) Gateway Time-out.”,“Data”:null,“InnerException”:null,“HelpURL”:null,“StackTraceString”:” at System.Net.HttpWebRequest.GetResponseFromData (System.Net.WebResponseStream stream, System.Threading.CancellationToken cancellationToken) [0x00146] in <9b672a45b19f4d52b5f28f32c0c91d97>:0 \n at System.Net.HttpWebRequest.RunWithTimeoutWorker[T] (System.Threading.Tasks.Task`1[TResult] workerTask, System.Int32 timeout, System.Action abort, System.Func`1[TResult] aborted, System.Threading.CancellationTokenSource cts) [0x000f8] in <9b672a45b19f4d52b5f28f32c0c91d97>:0 \n at Duplicati.Library.Utility.AsyncHttpRequest+AsyncWrapper.GetResponseOrStream () [0x0004d] in :0 \n at Duplicati.Library.Utility.AsyncHttpRequest.GetResponse () [0x00044] in :0 \n at Duplicati.Library.Backend.WEBDAV.PutAsync (System.String remotename, System.IO.Stream stream, System.Threading.CancellationToken cancelToken) [0x001b8] in <0d600faf328943a887e690f4858efbb2>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoPut (Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Interface.IBackend backend, System.Threading.CancellationToken cancelToken) [0x00426] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader+<>c__DisplayClass17_0.b__0 () [0x0010a] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoWithRetry (System.Func`1[TResult] method, Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x0017c] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoWithRetry (System.Func`1[TResult] method, Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x003a3] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.UploadFileAsync (Duplicati.Library.Main.Operation.Common.BackendHandler+FileEntryItem item, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x000da] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.UploadVolumeWriter (Duplicati.Library.Main.Volumes.VolumeWriterBase volumeWriter, Duplicati.Library.Main.Operation.Backup.BackendUploader+Worker worker, System.Threading.CancellationToken cancelToken) [0x000b8] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.b__13_0 (<>f__AnonymousType12`1[j__TPar] self) [0x00847] in <8f1de655bd1240739a78684d845cecc8>:0 \n at Duplicati.Library.Main.Operation.Backup.BackendUploader.b__13_0 (<>f__AnonymousType12`1[j__TPar] self) [0x0089e] in <8f1de655bd1240739a78684d845cecc8>:0 \n at CoCoL.AutomationExtensions.RunTask[T] (T channels, System.Func`2[T,TResult] method, System.Boolean catchRetiredExceptions) [0x000d5] in <9a758ff4db6c48d6b3d4d0e5c2adf6d1>:0 ",“RemoteStackTraceString”:null,“RemoteStackIndex”:0,“ExceptionMethod”:null,“HResult”:-2146233079,“Source”:“mscorlib”}]}]}]}