I don’t think an official how-to has been written. Part of your confusion may be that you are new to docker. I’ve been there myself. Take a little time to learn about it and I think you’ll find it a great platform! Being able to install and upgrade software packages at ease without worrying about software dependencies or conflicts is so nice.

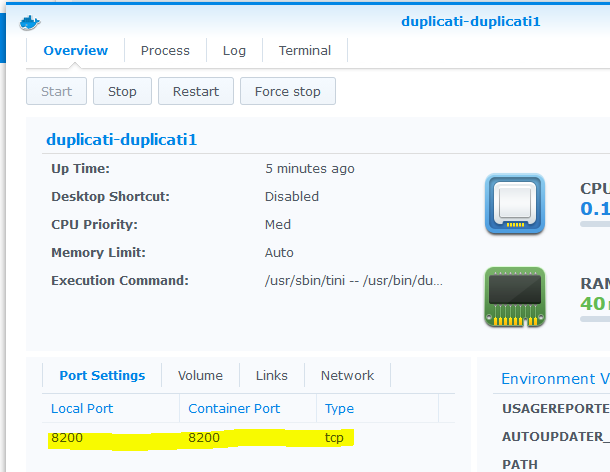

If you wanted to use a web browser on the NAS itself, you would point to http://localhost:port where port is the tcp port assigned to the docker container and mapped to 8200 within the container. And you should be able to access it by going to http://nas-ip:port as well, but that may require you to set the “allow remote access” option in Duplicati Settings.

No, docker containers are entirely self-contained and have all of the necessary software to run the application. It wouldn’t help anyway as containers are isolated from each other.

When you use docker containers and they want to listen on one or more network ports (like tcp 8200), it will be mapped to ports on the host (NAS). If the port is in use on the host, then it will be given an alternate port number.

Are you sure you didn’t actually have Duplicati still running directly on the NAS? If so it was utilizing tcp 8200 on the NAS, and your docker version would have been forced to have another port. Make sure you stop the normal Duplicati synology package (and perhaps uninstall it).

Here’s a screen shot of mine showing that it had no problem utilizing 8200 on the host:

Since you are new to docker some other important things you need to know:

Containers are designed to be disposable

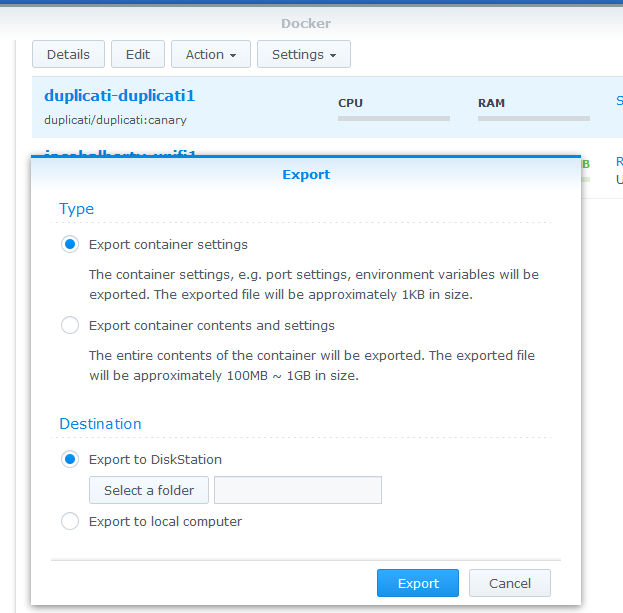

When a new version comes out, you simply delete the existing container, download the new image version, and launch a new one from that fresh image. Export the container settings before you delete so you can easily spin up a new container:

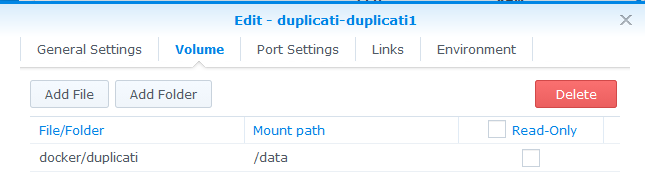

Data stored within the container will be deleted (this is by design - docker way of thinking). So it’s important that you map certain folders out to the host system, so the data is stored outside of the container and will survive container deletions/recreations (like when you upgrade).

Here’s how I mapped the /data folder inside the container to docker/duplicati on the NAS. This causes things like the sqlite databases, etc to be stored outside of the container on the host NAS filesystem:

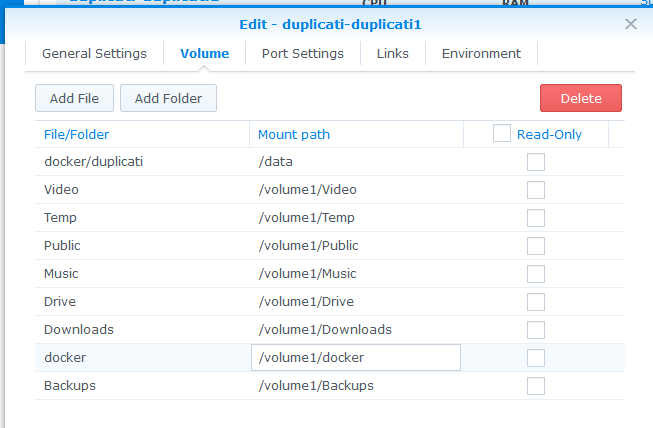

Containers don’t have access to the host by default

Again this is and intentional and important design of the docker architecture. Containers are supposed to be isolated processes that contain everything they need to run, and not be dependent on the host for anything.

In the case of a container like Duplicati, you’ll want it to have access to the host files so you can actually back them up. You do this in the same volume mapping area I talked about above.

On my NAS I have a single huge SHR2 volume mounted at /volume1. Synology’s Docker implementation doesn’t seem to let me map to that directly, so I have to map each of the top level shared folders that I want to back up:

Then within the Duplicati software I tell it to back up /volume1/Video, /volume1/Temp, etc like normal. (It just so happens that how I’ve mapped these folders makes the paths look the same on the NAS and within the container, but that’s not required.)