I’m having issues completing backup job runs of an existing backup job. There are other posts describing somewhat similar issues (backup appears stuck / status not clear 1 2) but I could not really extract from those posts what I should do in my specific case.

Here is an extensive description of the context of my problem - feel free to first jump to the description of my questions and come back later here to understand the scenario:

I have a backup job (job 1) backing up data to a USB-connected remote.

As described previously, I recently moved to new hardware for the source device and after migrating Duplicati application data incl. jobs to the new hardware, used the most recently run backup of job 1 to restore the backed up data on the new source device.

After initial trouble caused by my restore strategy I decided to delay all backup runs of the existing job for some weeks and to create an additional job 2, with an increased blocksize and another remote destination, and added all source folders of the job 1 iteratively with backup runs in between to ensure the single backup runs do run only a couple of hours. Having two remotes for the same data is part of my redundancy strategy, so I want both jobs 1 & 2 to be run in the future.

Within the past month after moving to the new source device, I have added some new source folders for jobs 1 & 2 even though only the latter job was actually scheduled to run. That went generally well, so I assumed I’m ready to try to un-retire job 1 which had originally run on the old source device. Knowing that the source of job 1 prior to the backup run was about 80 GB smaller than the one of job 2, I removed some of the source folders from job 1 (with the idea to add them step by step, that is, run the backup job multiple times and each time add some of the source folders to ensure managable run times of each backup run).

The issue:

- First attempt of job run:

Even though I expected not too much changes to have occured to be captured by backup job 1, it initially showed 1xx GB to be backed up and ran for about 1.5 hours until (according to the GUI progress bar) 0 bytes remained. From then on, the GUI did no more give away what was happening but there was no more traffic to the remote observable for most of the time. After an additional hour of patience, I decided to abort the job run (first using the option “after current file”, then the ASAP option). Three hours later the job ended with the error message The channel “BackendRequests” is retired and an exception logged in About → Show Log → Stored:

Apr 24, 2024 9:29 PM: Failed while executing "Backup" with id: 2

CoCoL.RetiredException: The channel "BackendRequests" is retired

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

at CoCoL.Channel`1.<WriteAsync>d__32.MoveNext()

--- End of stack trace from previous location where exception was thrown ---

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

at Duplicati.Library.Main.Operation.BackupHandler.<FlushBackend>d__19.MoveNext()

--- End of stack trace from previous location where exception was thrown ---

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

at Duplicati.Library.Main.Operation.BackupHandler.<RunAsync>d__20.MoveNext()

--- End of stack trace from previous location where exception was thrown ---

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at CoCoL.ChannelExtensions.WaitForTaskOrThrow(Task task)

at Duplicati.Library.Main.Controller.<>c__DisplayClass14_0.<Backup>b__0(BackupResults result)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Action`1 method)

at Duplicati.Library.Main.Controller.Backup(String[] inputsources, IFilter filter)

at Duplicati.Server.Runner.Run(IRunnerData data, Boolean fromQueue)

- Second attempt of job run:

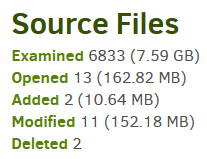

The next day, I reduced the list of source folders for the job one more time and gave it a second try. This time, the size of data to be backed up indicated in the progress bar was only about 20 GB and after only a few minutes the GUI status indicated that 0 bytes are remaining. On the remote, 5 files had been created in that time. Afterwards, nothing observable happened. On the remote, there was zero traffic for hours. Duplicati however actively used the CPU and the local database was still open (with a -journal temporary file existing).

After some hours, I lost my patience and tried to abort the backup. Again, there was no indicator of the abort ongoing except for the live log stating that the abort request had been received. There was also no more traffic to the remote and no files were written there. After about seven hours, I paused and resumed the job using the GUI option, hoping for a sign of a changed status. The only effect of that was that Duplicati then did not use considerable amounts of CPU anymore (even after resume), and the GUI interface was no more responsible (reloading the localhost webpage took minutes). After two more hours in which no data had been written to remote, the local database files last modified timestamps being unchanged and the GUI still not responsive, I quit the tray icon application and shut down the source device.

So that is the current status:

- Duplicati reports three partial backups:

– Two from the first attempt with same time according to the GUI dropdown - not sure why it is two of them

– One from the second attempt - The local temp DB file with the “-journal” suffix seems to have vanished away.

- Log → General for the backup job does not mention those backups.

- Log → Remote does look quite normal for me but reflects the long intervals in which nothing visible has happened in the GUI and on the remote (unfortunately too long to paste it directly):

job_remote_log.zip (23.6 KB)

Consequently, I do have a couple of questions:

- I’m unsure what this state means for the integrity of the remote data and of the job as such and thus do not know how to proceed the best. Should I do any checking, cleanup or repair before proceeding?

- What would be the best way to proceed with the backup job? Would it help to reduce the source folder list to a minimal set of data (lets say, a single file) for the next run? Should I enable any logging and if so, where and how (GUI live log vs. logging to file via command line option)?

- I am also asking myself how I can ensure that my attempts and partial backups do not overwrite the data of previous successful backup runs. Should I turn off smart retention temporarily or is there any option to flag a specific backup revision as undeletable?

I am okay with any attempt of the next job run to take up to 24 hours, more is not really feasible for me.

If there is any data missing in the description, let me know.

Thanks for your advice!

Edit: Found & added exception message from first attempt of job run.