Hi,

One of my backups has started failing every time it runs. It’s one I had to recreate due to being unable to rebuild the database (it took well over a month so I gave up).

I’m running Duplicati - 2.0.3.11_canary_2018-09-05.

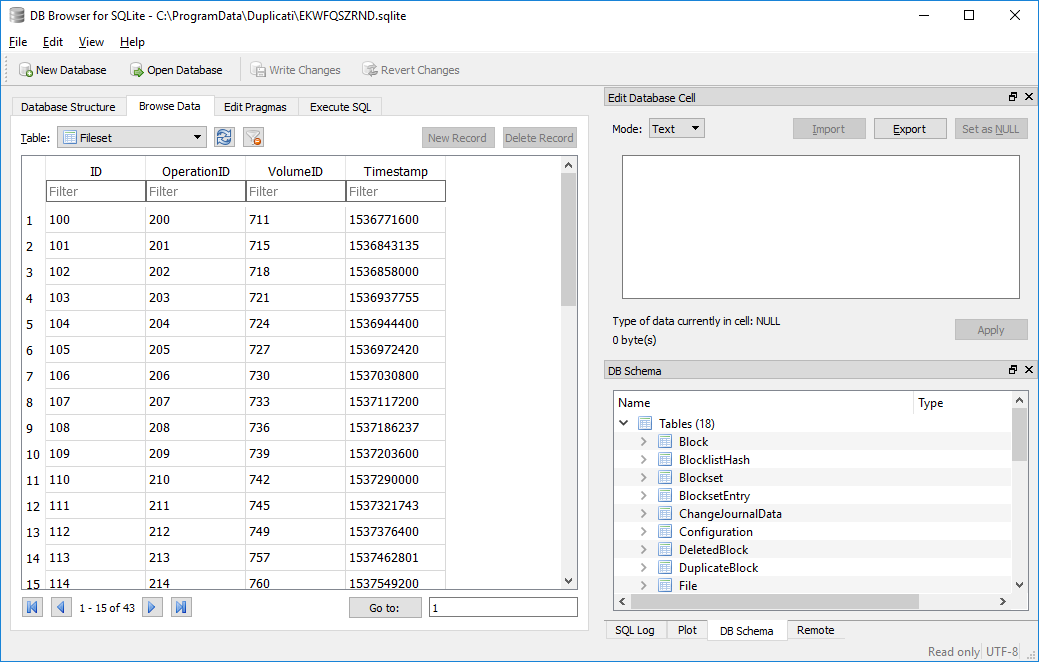

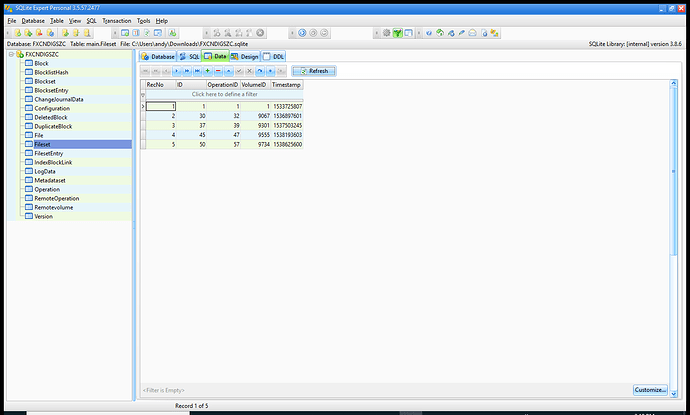

Failed: Unexpected difference in fileset 50, found 4013 entries, but expected 4014 Details: System.Exception: Unexpected difference in fileset 50, found 4013 entries, but expected 4014 at Duplicati.Library.Main.Database.LocalDatabase.VerifyConsistency (System.Int64 blocksize, System.Int64 hashsize, System.Boolean verifyfilelists, System.Data.IDbTransaction transaction) [0x002bc] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 at Duplicati.Library.Main.Operation.Backup.BackupDatabase+<>c__DisplayClass32_0.<VerifyConsistencyAsync>b__0 () [0x00000] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 at Duplicati.Library.Main.Operation.Common.SingleRunner+<>c__DisplayClass3_0.<RunOnMain>b__0 () [0x00000] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 at Duplicati.Library.Main.Operation.Common.SingleRunner+<DoRunOnMain>d__2

1[T].MoveNext () [0x000b0] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 --- End of stack trace from previous location where exception was thrown --- at Duplicati.Library.Main.Operation.BackupHandler+<RunAsync>d__19.MoveNext () [0x01037] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 --- End of stack trace from previous location where exception was thrown --- at CoCoL.ChannelExtensions.WaitForTaskOrThrow (System.Threading.Tasks.Task task) [0x00050] in <6973ce2780de4b28aaa2c5ffc59993b1>:0 at Duplicati.Library.Main.Operation.BackupHandler.Run (System.String[] sources, Duplicati.Library.Utility.IFilter filter) [0x00008] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 at Duplicati.Library.Main.Controller+<>c__DisplayClass13_0.<Backup>b__0 (Duplicati.Library.Main.BackupResults result) [0x00035] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 at Duplicati.Library.Main.Controller.RunAction[T] (T result, System.String[]& paths, Duplicati.Library.Utility.IFilter& filter, System.Action1[T] method) [0x0011d] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 Log data: 2018-10-05 14:00:38 +01 - [Error-Duplicati.Library.Main.Operation.BackupHandler-FatalError]: Fatal error System.Exception: Unexpected difference in fileset 50, found 4013 entries, but expected 4014 at Duplicati.Library.Main.Database.LocalDatabase.VerifyConsistency (System.Int64 blocksize, System.Int64 hashsize, System.Boolean verifyfilelists, System.Data.IDbTransaction transaction) [0x002bc] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 at Duplicati.Library.Main.Operation.Backup.BackupDatabase+<>c__DisplayClass32_0.<VerifyConsistencyAsync>b__0 () [0x00000] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 at Duplicati.Library.Main.Operation.Common.SingleRunner+<>c__DisplayClass3_0.<RunOnMain>b__0 () [0x00000] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 at Duplicati.Library.Main.Operation.Common.SingleRunner+<DoRunOnMain>d__2`1[T].MoveNext () [0x000b0] in <a152cea42b4d4c1f8c59ea4a34e06889>:0 — End of stack trace from previous location where exception was thrown — at Duplicati.Library.Main.Operation.BackupHandler+<RunAsync>d__19.MoveNext () [0x003d6] in <a152cea42b4d4c1f8c59ea4a34e06889>:0

Any suggestions?

Thanks

Andy