I have just started to use Duplicati - 2.0.1.73_experimental_2017-07-15 and find it extremely slow. I started to backup to my NAS systen via FTP (1.4Tb) 24 hours ago and it now has458717 files (1.01 TB) to go ??

Please assist if there is any settings I can change to improve the backup time. This is the first full backup.

I remember that the FTP performance is quite shaky. Some server limit the bandwith to 200k/s. And sometimes there are just combinations of FTP servers and clients that do not work well. Have you checked what the speed of the FTP server is if you connect to it directly?

Good day Rene

Since I placed the message up untill now there is still

450058 Files (901.84 GB) to go

FTP is done with FreeNas 11.0-U2

Rx is 22.1 M Avg Bits / S

Please point me in the rite direction so that this backup can just get done.

Today 14-08-2017 @ 06:38 AND STILL 396364 files (593.84 GB) to go???

What to do? Cancel the backup and turf the program or…What do you suggest???

Is it something I am doing or not doing or is it the program??

It depends on what is causing the slowdown. Based on your line speed (22Mbit/s) you should get just under 3MiB/s. The 1.4Tb is 1.468.006 MiB, so it should take around 136 hours (or 5 and half day) to transfer the data.

If my math is correct your transfers should be done in 2-3 days from now.

My sentiments exactly

The backed-up computer and the NAS computer are connected via a normal Ethernet connection (cable) with only a switch in between and are about 2 meters apart. Using FTP as described previously??

Don’t know how to help with that. I think you should do some speed measurements with something like FileZilla to see if you can pinpoint the performance problem.

FileZilla runs perfectly and is the reason why I chose the FTP route.

But what speed do you get on transfers through FileZilla ?

Same problem here.

112019 files (426.42 GB) to go at 5.70 KB/s

Pure internal network.

FTP on the target machine does put w/ 100MByte/sec

Is there any default throttling on Duplicati with ftp?

THanks,

Mike

Currently I am suspecting it is the prep work Duplicati does on the machine itself. It is Duplicati 2.0.2.8 with QMono 4.2.1.0 running on a QNAP TS-559 Pro+

All ftp tests using the same route showed >60MByte/sec.

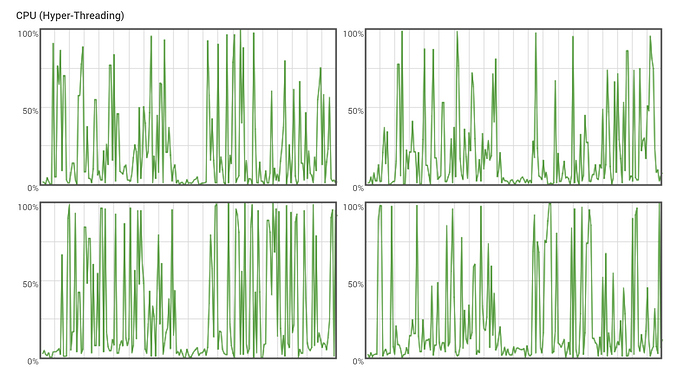

Duplicati seems to run only on one core at a time (poor overlap on peaks on cores) and a lot of idle time…

I already set the options:

–zip-compression-level=0

–disable-streaming-transfers=false

–synchronous-upload=false

–thread-priority=abovenormal

–throttle-upload=100MB

Any further thoughts?

Thanks,

Mike

What version of Duplicati are you using? I believe there are some multi-thread options that are available (or may soon appear) in the Canary versions.

My scenario isn’t exactly the same as my back end/target is a Windows 10 netbook running Bitvise WinSSHD and I’m using SFTP instead of straight FTP. Figured it was worth mentioning though as I didn’t observe any slowness and was able to max out the target’s 100Mbp/s network adapter.

My initial backup set (source was also a Win10 desktop) was about 2.5TB (not sure exactly, but about 300K files) and it would upload at about 11MB/s actual throughput. As you observed the CPU and network usage was a bit “saw toothed” due to the singled threaded nature of the process, but not enough to significantly impact overall throughput.

What are you using as your FTP destination?

I’m also running defaults for all the settings you listed. Do you see any significant difference one way or the other if you remove all the custom advanced settings?

@JonMikelV Version see above: 2.0.2.8_canary_2017-0-20

@sanderson ftp target is a newer QNAP 100MByte/sec ftp upload. Also tested from the shell on the other machine (where duplicati runs) and it is definitely NOT the bottleneck.

Disks in the QNAP running Duplicati are a 4 disk RAID setup WD Red3TB also no bottleneck.

I think Duplicati is somewhat slow in preparing the data.

Could the Momo version have an impact?

I am not encrypting and I am ot compressing, so it should really be a piece of cake to prep the data for ftp…

Mike

Thanks for the added detail.

I know mono version can make a difference in some areas, like SSL certificates, but don’t know if it effects speed.

Duplicati is currently (mostly) single threaded. I am working on a version that is multithreaded. My guess is that the CPU is too slow on one core, which is why it does not upload faster (there is not enough data).

I have not heard of slow-downs due to old Mono, but you could try the “aFTP” backend, as it implements the transfers a bit different.

What volume size are you using within Duplicati? For local (or local network) backups I believe it should be most efficient to increase the volume size by quite a bit compared to the default of 50MB - for my local (attached) HDD backup, for example, I had good success with 2GB volumes. I can’t prove that that’s an optimal size or anything, but my theory is that at 50MB, duplicati is spending a lot of time bouncing around between uploading one volume and then prepping / uploading the next one, etc, and at larger volume sizes, it will spend more time in the “sprint” of uploading hopefully utilizing the full upload bandwidth.

Just be aware that with default settings but 2G volume sizes you’ll be needing 8G of “temp” space (2G each x 4 ‘work ahead’ volumes) in which to hold the compressed files while waiting for uploads to finish. Oh - and of course when it comes time to verify files (assuming you haven’t disabled that) you’ll be transfering 2G chunks as well.

If space is an issue yet you still want to use the larger volume sizes I believe there are some parameters to help get around that (such as --asynchronous-upload-folder, --asynchronous-upload-limit, and tempdir ).

Good point, though I’ve previously overridden my Temp folder to use a special temp folder on my secondary (spinning) HDD, which at 3TB is only around half full. I would’ve assumed (though maybe wrongly) that 8GB of temp space wouldn’t be too much of a blocker for most use cases.

This is why I recommend this large volume size only for local / very fast backup destinations - for instance on B2, you start paying (pennies) for download bandwidth after 1GB per day, so a lower volume size is optimal there.

i’ve tried the last canary version duplicati-2.0.3.7_canary_2018-06-17.spk on my Synology 218play:

the cpu usage now is around 6-7% while before the update was always around 1-2%.

To backup 850Gb with no compression over LAN it taken more then 4 days to backup the first 400Gb…

with thread-priority=abovenormal

zip-compression-level=0

chunk size= 100Mb

i think the problem is still in the multithreaded mode…

are you still working on it?