Not a fact. I test database recreate each hour. Excessive, but I look for bugs.

More reasonable would be at least one DR test, and I’m sorry you had none.

That would have given some idea of DR procedures and time (barring bugs).

Good practices for well-maintained backups has my thoughts on that issue…

A mission-critical system should IMO have multiple backups, not simply one.

Any software may have bugs, and Duplicati is still in Beta partly due to them.

This is somewhat aside from the scaling-to-large-backups issue that was hit.

Your main scaling problem is the --blocksize is giving far too many blocks,

which is an open issue linked above, but we’re not sure how to tell everyone.

Some could have been found in testing, but DB recreate testing wasn’t done.

You “might” have lost a dindex file, which caused dblock downloads to occur,

however a backup initially done on an earlier Duplicati might have other ways.

Sorry about the rough ride. I think Duplicati is OK for small less critical backup

where price might also be an issue, but sounds a bad choice for your usage…

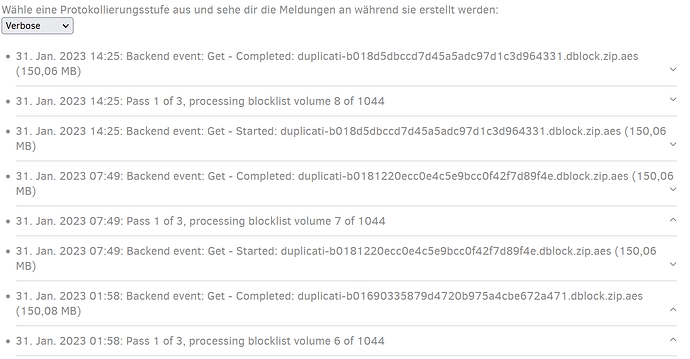

It’s still a database recreate, so has risk of a scaling issue. How did below go?

Did it finish the partial temporary database creation, then was slow in restore?

Alternatively, if slow in DB recreate, then no need to time a --version=0 repair.

There’s also the normal-slow in the first 70% (also improvable with blocksize).

This part worked in the full database recreate, then got slower in the last 30%.

After DB (permanent or temporary), restore will still be slower than a file copy.

There’s a lot more work to do, building the files back up from individual blocks

where the advantage of that is that it’s a compact way to store many versions.

That would completely bypass the need to recreate. You can try it if you like…