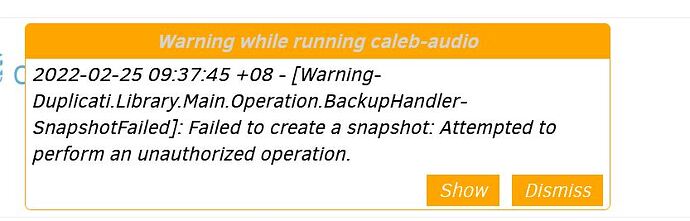

I know this has been asked a hundred times here, but I’ve read all the threads I could find on the issue, and none seem to be related. I’m not trying to send emails on upload finish, so no email errors are probably causing this. I’m not uploading to megaz, so that’s not it.

I’m running duplicati 2.0.6.3_beta_2021-06-17 on Windows 10. I’m attempting to backup to Backblaze b2. My full config is below, but perhaps relevant is my blocksize at 5MB and my dblock-size (volume size?) at 100MB.

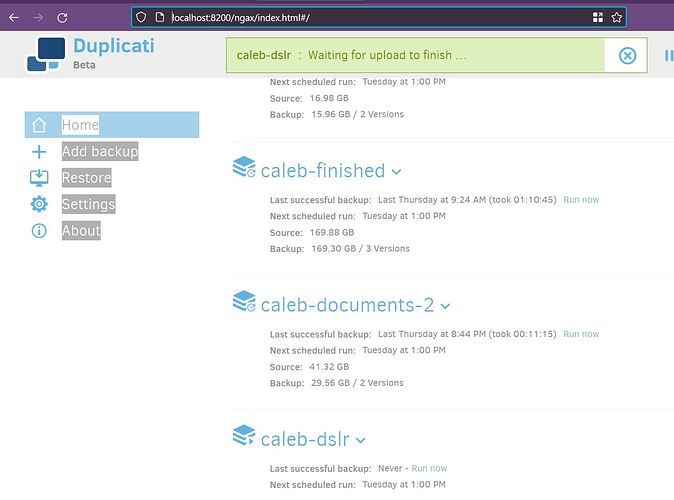

I’ve had this issue with two backups so far: one at 17GB, another at around 200GB.

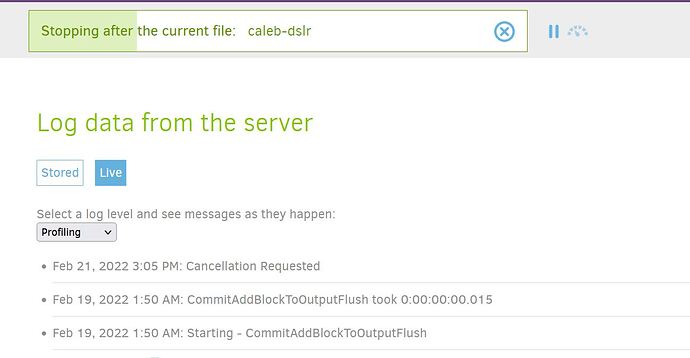

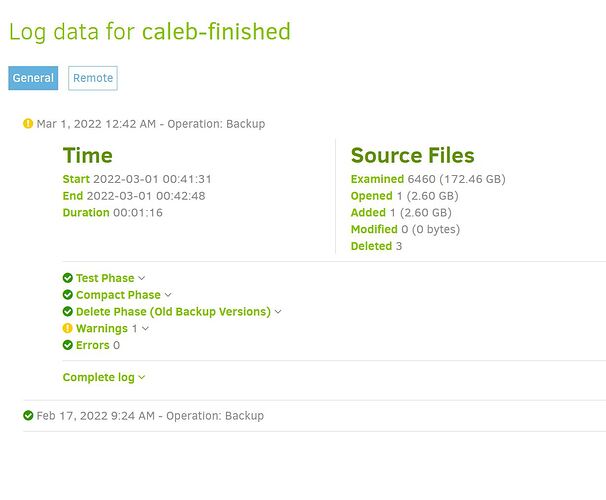

The 200GB is the one I’m stuck waiting on right now. Overnight it seemed to upload a great deal of volumes, I can see them in backblaze, and I see lots of successful PUTs messages in my logs. However, it’s been “Waiting for upload to finish” for I believe 2 hours. The last log says:

Feb 16, 2022 8:11 AM: Backend event: Put - Completed: duplicati-i4026597c5ec241fd97c154707c4bc57d.dindex.zip.aes (4.22 KB)

at log level verbose. It’s 10:00 am here now for reference.

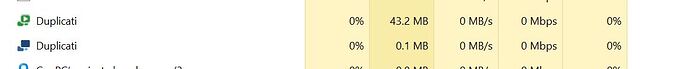

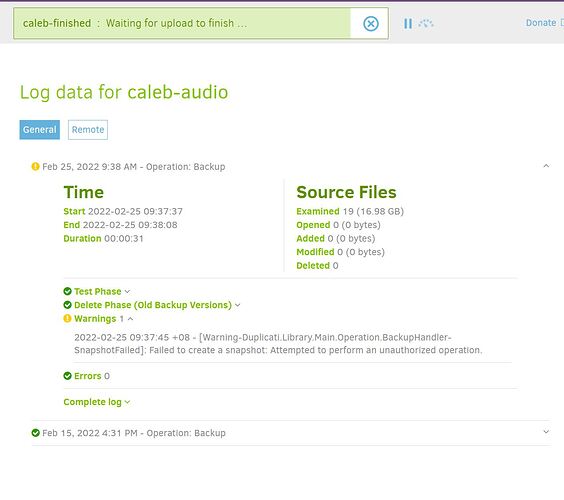

The previous backup this happened on, I let it sit in “Waiting for upload to finish” mode for 8 hours, on a 17GB backup, based on threads here that mentioned it might just take a while if there’s lots of files to index (though that backup was comprised of few relatively large audio files). I eventually tried to stop it, though the UI was non responsive, so I force killed the Duplicati processes (I have two running that i can see in task manager), which resulted in the Duplicati UI reporting the backup as a “successful backup,” though the source and backup sizes are different…

Last successful backup:

Yesterday at 4:31 PM (took 00:00:31)

[Run now](http://localhost:8200/ngax/index.html#/)

Next scheduled run:

Tuesday at 1:00 PM

Source:

16.98 GB

Backup:

15.96 GB / 2 Versions

I somehow doubt I could restore from that.

Anyway, what can I do? What further debugging can I attempt? I really like this tooling solution and I’d love to get it working.

Here’s my config, minus passwords:

{

"CreatedByVersion": "2.0.6.3",

"Schedule": {

"ID": 2,

"Tags": [

"ID=5"

],

"Time": "2022-02-15T05:00:00Z",

"Repeat": "1W",

"LastRun": "0001-01-01T00:00:00Z",

"Rule": "AllowedWeekDays=Monday,Tuesday,Wednesday,Thursday,Friday,Saturday,Sunday",

"AllowedDays": [

"mon",

"tue",

"wed",

"thu",

"fri",

"sat",

"sun"

]

},

"Backup": {

"ID": "5",

"Name": "caleb-finished",

"Description": "",

"Tags": [],

"TargetURL": "b2://xxx-xxx?auth-username=xxxxxx",

"DBPath": "C:\\Users\\calebjay\\AppData\\Local\\Duplicati\\LAQIYFIORO.sqlite",

"Sources": [

"E:\\Pictures\\finished\\"

],

"Settings": [

{

"Filter": "",

"Name": "encryption-module",

"Value": "aes",

"Argument": null

},

{

"Filter": "",

"Name": "compression-module",

"Value": "zip",

"Argument": null

},

{

"Filter": "",

"Name": "dblock-size",

"Value": "100MB",

"Argument": null

},

{

"Filter": "",

"Name": "keep-versions",

"Value": "2",

"Argument": null

},

{

"Filter": "",

"Name": "--blocksize",

"Value": "5MB",

"Argument": null

},

{

"Filter": "",

"Name": "--exclude-files-attributes",

"Value": "hidden,temporary,system",

"Argument": null

}

],

"Filters": [],

"Metadata": {},

"IsTemporary": false

},

"DisplayNames": {

"E:\\Pictures\\finished\\": "finished"

}

}

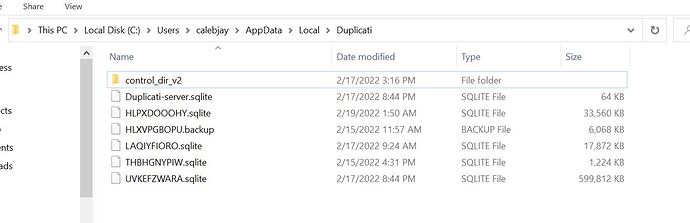

EDIT: I’m noticing the config doesn’t mention that my temporary directory (where I guess duplicati stores zipped up volumes before sending them off and deleting them locally) is on a massive harddrive with terabytes of space remaining, so I don’t think it’s getting stuck there. I am noticing however that the DBPath is set to my C:/ drive which does have limited space, it really only has my OS on it, and has 10GB of free space left. I wonder if that’s an issue? I’ll try clearing some space there.

EDIT: Yeah nearing 12 hours and hasn’t moved ![]() no new logs either

no new logs either