Note that Duplicati does need to run elevated in order to be able to create snapshots.

I launched as administrator now, and it’s gotten stuck again, at waiting for upload to finish

Log:

Again launched as admin, again stuck for several hours on “waiting for upload to finish.” Considering writing a script that detects this, then force restarts duplicati, as this seems to be the required fix on this system. Would love some help finding more information than what’s available in these logs. Error message… something.

I think we still need to figure out why it’s stalling in the first place. Trying to automate a forced kill/restart of Duplicati when it hangs doesn’t seem like the best approach.

Unfortunately I’m out of ideas! Maybe someone else has another idea of how to troubleshoot this.

Here’s hoping ![]()

More logs, another stuck, 9 hours now

I need to find a way to get full logs, because just now when I force quit and restarted, I saw it “finishing previous backup,” but I missed the intermittent logs there - what did it need to finish? How was it able to do those steps this time? Unfortunately the web logs cap out.

EDIT: I modified the poll request but it seems the server caps out at 500. Will have to be ready next time with the network tab open to save the requests.

EDIT: Unbelievable, I missed just now the logs of a full, never before run backup, that completed successfully! No sticking. THe next backup is 4.85 TB lol. Oh well, will check back in a day.

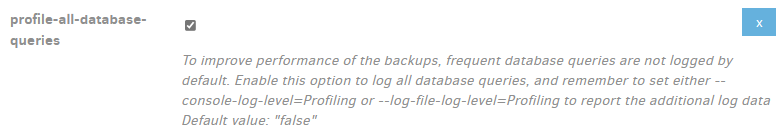

log-file=<path> log-file-log-level=profiling and optionally for even more fullness (even at block level)

Intermittent problems are a real pain to debug. The ideal problem is 100% reproducible-from-scratch.

Logs can identify the neighborhood but can’t always identify stuck spot. That might need a debugger.

Other hard options include trying to get info from looking at job database with DB Browser for SQLite

while the backup is sitting there stuck (for example, what is the State of final dlist file at the time)?

Or try to make some sense of the dup-* files in Temp. Some will probably be .zip files, some .aes, however the suffix for that won’t be present. To see if it’s encrypted, try decrypting with AES Crypt, or

look inside to see if it says AES at the start. A .zip file says PK at start. Or copy, fix suffix, try to open.

Or try changing the setup (e.g. what’s backed up, Advanced options, etc.) to see if it affects the issue.

You can compare the log from a working backup to the log from one that gets stuck to note difference.

Yours so far (thanks for supplying) look like it’s finishing up, plans to upload a dlist, but then doesn’t.

Here’s one of my Backblaze B2 backups. The steps at top show it’s getting ready to finish. Ordinarily a dblock file upload starts before its associated dindex file (they come in pairs), and dlist goes at end.

2022-03-03 07:53:48 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-bdf22bfe740e043b88e311697f36f9253.dblock.zip.aes (25.15 MB)

...

2022-03-03 07:53:48 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.BackupHandler-BackupMainOperation]: BackupMainOperation took 0:00:02:05.250

2022-03-03 07:53:49 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.BackupHandler-VerifyConsistency]: Starting - VerifyConsistency

...

2022-03-03 07:53:50 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.BackupHandler-VerifyConsistency]: VerifyConsistency took 0:00:00:00.665

2022-03-03 07:53:50 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UpdateChangeStatistics]: Starting - UpdateChangeStatistics

...

2022-03-03 07:53:55 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UpdateChangeStatistics]: UpdateChangeStatistics took 0:00:00:05.139

2022-03-03 07:53:55 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UploadNewFileset]: Starting - Uploading a new fileset

2022-03-03 07:53:57 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitUpdateRemoteVolume

2022-03-03 07:53:58 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitUpdateRemoteVolume took 0:00:00:00.978

2022-03-03 07:53:58 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UploadNewFileset]: Uploading a new fileset took 0:00:00:02.804

2022-03-03 07:53:58 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitUpdateRemoteVolume

2022-03-03 07:53:58 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitUpdateRemoteVolume took 0:00:00:00.000

2022-03-03 07:54:37 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 25.15 MB in 00:00:49.0612625, 524.96 KB/s

2022-03-03 07:54:37 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-bdf22bfe740e043b88e311697f36f9253.dblock.zip.aes (25.15 MB)

2022-03-03 07:54:37 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitAfterUpload

2022-03-03 07:54:37 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitAfterUpload took 0:00:00:00.104

2022-03-03 07:54:37 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteScalarInt64]: Starting - ExecuteScalarInt64: INSERT INTO "Remotevolume" ("OperationID", "Name", "Type", "State", "Size", "VerificationCount", "DeleteGraceTime") VALUES (55, "duplicati-i4e4ab43031454fc4873cafe362f9059b.dindex.zip.aes", "Index", "Temporary", -1, 0, 0); SELECT last_insert_rowid();

2022-03-03 07:54:37 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteScalarInt64]: ExecuteScalarInt64: INSERT INTO "Remotevolume" ("OperationID", "Name", "Type", "State", "Size", "VerificationCount", "DeleteGraceTime") VALUES (55, "duplicati-i4e4ab43031454fc4873cafe362f9059b.dindex.zip.aes", "Index", "Temporary", -1, 0, 0); SELECT last_insert_rowid(); took 0:00:00:00.010

2022-03-03 07:54:38 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i4e4ab43031454fc4873cafe362f9059b.dindex.zip.aes (42.18 KB)

2022-03-03 07:54:42 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 42.18 KB in 00:00:04.7580200, 8.87 KB/s

2022-03-03 07:54:42 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i4e4ab43031454fc4873cafe362f9059b.dindex.zip.aes (42.18 KB)

2022-03-03 07:54:42 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitAfterUpload

2022-03-03 07:54:42 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitAfterUpload took 0:00:00:00.107

2022-03-03 07:54:43 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-20220303T125003Z.dlist.zip.aes (867.34 KB)

2022-03-03 07:54:47 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 867.34 KB in 00:00:04.4742195, 193.85 KB/s

2022-03-03 07:54:47 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-20220303T125003Z.dlist.zip.aes (867.34 KB)

2022-03-03 07:54:47 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitAfterUpload

2022-03-03 07:54:47 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitAfterUpload took 0:00:00:00.104

2022-03-03 07:54:47 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitAfterUpload

2022-03-03 07:54:47 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitAfterUpload took 0:00:00:00.000

2022-03-03 07:54:47 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: Starting - ExecuteReader: SELECT "IsFullBackup", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC

2022-03-03 07:54:47 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: ExecuteReader: SELECT "IsFullBackup", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC took 0:00:00:00.001

2022-03-03 07:54:47 -05 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-StartCheck]: Start checking if backups can be removed

shows the phase of your longer status, and the below seems to be where one would see that phase:

This chase would benefit a lot from a developer who knows the backend processing well, as I do not…

@all

The tons of reading post lol…

I’ve already successfully narrowed down Duplicati never finishing due to certain issues during its WhenAll() use causing it to never exit. Duplicati just gets stuck and will stay that way until its force killed manually or system reboot.

If there’s another code area where its getting stuck on, it could be, but Duplicati runs the backup file crawl + container all within the WhenAll() (it also by default uploads during this as well). The WhenAll() will easily become forever stuck if things don’t go well in a certain way. There’s too much code complexity happening within it. There’s also more WhenAll() uses that I never looked into since I 100% solved the stuck problem I was seeing (though never fixed the Duplicati code).

You can do a force stop of the WhenAll(), however any backups actually still working will get force stopped should the time run past it. The timeout variable below is set to 60 minutes. This can result in db corruption. However, it getting stuck will do the same and can also cause the computer to not suspend as well which is also not good (it might stop eventually after a very lengthy time but I never found out).

If you wish to narrow down each problem resulting in the WhenAll() never exiting (or whatever) then you can certainly do so. The following code are main backup file crawl functions with loops that all spaghetti together. Then you have the tons of other things outside of this including each remote connection type and much more as it jumps all around like a hurdler at the Olympics.

You might also be able to solve this outside of the code by using a different connection type or not backing up certain files or using different settings. But, certain issues will cause it to get stuck and that’s the fault of the code.

If the timeout below doesn’t cause Duplicati to become unstuck, I’d be surprised. But, that would mean it exits in this instance and what comes after has another stuck point (or that you wrongly implemented it).

Have fun and hope that helps. I can’t really help you be better at debugging but you will need to add logs in this instance. You could try looking for any running threads or something once it gets stuck though but it might not help here.

Also want to add that when Duplicati works, its actually very worth using. They just have a few sticking points to fix and a little hardening of the code. Not much but a bit of a pain those are to fix.

Task all;

using (new ChannelScope())

{

var timeout = TimeSpan.FromMinutes(60);

all = Task.WhenAny(Task.WhenAll(

new[]

{

Backup.DataBlockProcessor.Run(database, options, taskreader),

Backup.FileBlockProcessor.Run(snapshot, options, database, stats, taskreader, token),

Backup.StreamBlockSplitter.Run(options, database, taskreader),

Backup.FileEnumerationProcess.Run(sources, snapshot, journalService,

options.FileAttributeFilter, sourcefilter, filter, options.SymlinkPolicy,

options.HardlinkPolicy, options.ExcludeEmptyFolders, options.IgnoreFilenames,

options.ChangedFilelist, taskreader, token),

Backup.FilePreFilterProcess.Run(snapshot, options, stats, database),

Backup.MetadataPreProcess.Run(snapshot, options, database, lastfilesetid, token),

Backup.SpillCollectorProcess.Run(options, database, taskreader),

Backup.ProgressHandler.Run(result)

}

// Spawn additional block hashers

.Union(

Enumerable.Range(0, options.ConcurrencyBlockHashers - 1).Select(x =>

Backup.StreamBlockSplitter.Run(options, database, taskreader))

)

// Spawn additional compressors

.Union(

Enumerable.Range(0, options.ConcurrencyCompressors - 1).Select(x =>

Backup.DataBlockProcessor.Run(database, options, taskreader))

)

), Task.Delay(timeout));

await all;

...

Somehow my experience is different and I do not suspect a Duplicaty problem.

I am using 2 cloud services, with several backups on each of them, each backup around 5GB. About 10 days ago (around the start of the war in Ukraine - timing???) MEGA.NZ started giving me “Waiting for upload to finish” messages. This is after months of smooth nightly operation. The other service I am using has no problem. I created a test backup on Box.com - again - no problem.

I suspected a DDOS on MEGA.nz but no-one else reported any problem. Also - MEGA works OK interactively. I suspect they changed something in their operating environment.

What do you think?

Thank you for showing your custom WhenAll workaround. Do you recall what status it stuck at before?

Most of the time of the backup appears to be spent in “Phase”: “Backup_ProcessingFiles”, moving into “Phase”: “Backup_WaitForUpload” towards the end, and that’s the “Waiting for upload to finish” status.

Above was from a Wireshark capture on Duplicati’s connection (typically on the loopback at port 8200), however the long phase is easy to see at About → System Info in the lastPgEvent saying for example “Phase”:“Backup_ProcessingFiles”. You can use browser search to find it, or look for its extremely long line where one can sort of use the horizontal scrollbar to see that this phase is the majority of a backup.

Ordinarily "Phase”: “Backup_WaitForUpload” goes by pretty fast (which is why I watched the network), however in a “stuck” case, I assume this screen would show it while status bar shows longer message.

@calebjay and @Canada1 can check their screen if a backup gets stuck, to see if this lines up so far. Alternatively @Xavron (who has had success reproducing this) can try, but if it needs encouragement could probably change the rescue-timeout into a sabotage-delay to see how stuck WhenAll messages.

Unfortunately it’s probably not a guaranteed thing that all “stuck” end results come due to same causes. Getting good test cases for an expert developer to put a debugger on would help, but we didn’t get that. What we did get was quite a number of profiling logs, and from this it seems we can get some thoughts.

Looking for the WhenAll in question, I think we’re at the below

which is run from

and at the end we join up with the code I cited , showing how it reaches the phase of status message.

Question to @Xavron is – if it got stuck at WhenAll in RunMainOperation at the top of above citation, wouldn’t it be at a different message than “Waiting for upload to finish”, or is there some explanation?

is the one and only place where this phase is set, and that’s just a little before the RunMainOperation, presumably (and verified experimentally two ways) staying until WhenAll finishes, and phase changes.

That’s not likely to happen without a C# developer plus some complicated build mechanics and delays. There is certainly precedent from lots of years ago to add debugs to production code, but shipping it is going to need at least a Canary build to be rolled out for a typical user to test. Let’s try existing log first.

easy come - easy go!

I was running a small test backup every day. In day 18 (two days ago) the problem disappeared. I run the full backup - all is good.

I did not change anything in my duplicati installation and continue to suspect that the backup web hosting fixed a problem

My Conclusion: every problem has two (maybe more) sides. Jumping on the (self) closest one may be premature

Same problem here. 500GB dataset, 500KB block size, 50MB remove volume size.

Stuck at waiting for upload to finish.

Cancel backup does not have any effect in this state. Had to kill duplicati.

-

27 de may. de 2022 10:04: Cancellation Requested

-

27 de may. de 2022 10:03: Cancellation Requested

-

27 de may. de 2022 9:54: Cannot find any MS SQL Server instance. MS SQL Server is probably not installed.

-

27 de may. de 2022 9:54: Cannot open WMI provider \localhost\root\virtualization\v2. Hyper-V is probably not installed.

-

27 de may. de 2022 9:54: Using WMI provider \localhost\root\virtualization\v2

-

27 de may. de 2022 9:53: Cannot open WMI provider \localhost\root\virtualization\v2. Hyper-V is probably not installed.

-

27 de may. de 2022 9:53: Cannot find any MS SQL Server instance. MS SQL Server is probably not installed.

-

27 de may. de 2022 9:53: Using WMI provider \localhost\root\virtualization\v2

-

27 de may. de 2022 9:53: Cannot open WMI provider \localhost\root\virtualization\v2. Hyper-V is probably not installed.

-

27 de may. de 2022 9:53: Cannot find any MS SQL Server instance. MS SQL Server is probably not installed.

-

27 de may. de 2022 9:53: Using WMI provider \localhost\root\virtualization\v2

-

27 de may. de 2022 9:24: Cannot open WMI provider \localhost\root\virtualization\v2. Hyper-V is probably not installed.

-

27 de may. de 2022 9:24: Cannot find any MS SQL Server instance. MS SQL Server is probably not installed.

-

27 de may. de 2022 9:24: Using WMI provider \localhost\root\virtualization\v2

-

27 de may. de 2022 9:22: Cannot open WMI provider \localhost\root\virtualization\v2. Hyper-V is probably not installed.

-

27 de may. de 2022 9:22: Cannot find any MS SQL Server instance. MS SQL Server is probably not installed.

-

27 de may. de 2022 9:22: Using WMI provider \localhost\root\virtualization\v2

-

27 de may. de 2022 9:19: Cannot open WMI provider \localhost\root\virtualization\v2. Hyper-V is probably not installed.

-

27 de may. de 2022 9:19: Cannot find any MS SQL Server instance. MS SQL Server is probably not installed.

-

27 de may. de 2022 9:19: Using WMI provider \localhost\root\virtualization\v2

-

27 de may. de 2022 5:40: CommitUpdateRemoteVolume took 0:00:00:00.000

-

27 de may. de 2022 5:40: Starting - CommitUpdateRemoteVolume

-

27 de may. de 2022 5:40: Uploading a new fileset took 0:00:01:34.060

-

27 de may. de 2022 5:40: CommitUpdateRemoteVolume took 0:00:00:00.015

-

27 de may. de 2022 5:40: Starting - CommitUpdateRemoteVolume

-

27 de may. de 2022 5:38: Starting - Uploading a new fileset

had somene found any real fix yet?

there is no ‘real fix’ since there is no real problem…

If the upload is stuck the most probable reasons are either because there is a configuration error and no upload can work at all, or because some network or server problem caused the network exchange to hang.

If the config is wrong, correct it.

If the reliability of the network or server is dubious, it is necessary to add some network timeout in the Duplicati configuration.

For example, no sftp setup should fail to include the advanced option ssh-operation-timeout, the value of which will depend on the connection speed and the maximum block size - if you have a very slow upload like Adsl, the ssh-operation-timeout should be huge to reflect the fact that 50 MB (the default block size) takes at least 10 minutes to send, so let’s say 15 minutes. For Http backends, the timeout can be setup differently (http-operation-timeout).

I got it fixed with making http-operation-timeout 1 minute.

Settings → Add advanced option

I never faced the problem since then, only weird remote error code 500 from time to time but doesn’t affect my database or anything else.

I created an account just to mention that @IbrahemGamel’s solution worked for me.

I’m using an AWS S3 bucket and was getting stuck on “Waiting for upload to finish”. I run Duplicati in a docker container, so I opened an interactive terminal to the container, installed the AWS CLI, and configured it with the same ID/key I used in Duplicati, targeting the same bucket. I was able to list and upload files.

This confirmed that the connection wasn’t the issue. Consequently, I decided to try setting the http-operation-timeout to 1 minute, and it worked flawlessly.

Thanks, @IbrahemGamel

No Real Problem ?! really ?

what about FTP i have same issue with ftp now , and i didnt find any special options for this protocol. I think duplicati must have global preconfigured timeouts and must not stuck, connection timeout looks much cleaner than incomprehensible program stuck, and sure we know that any connection may be unstable some time, if browser hangs every time then network connection is broken is ok too ?