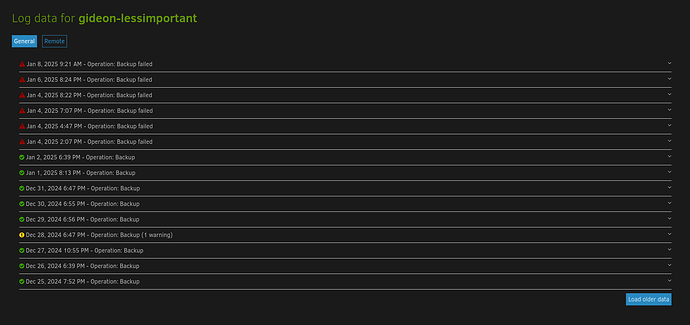

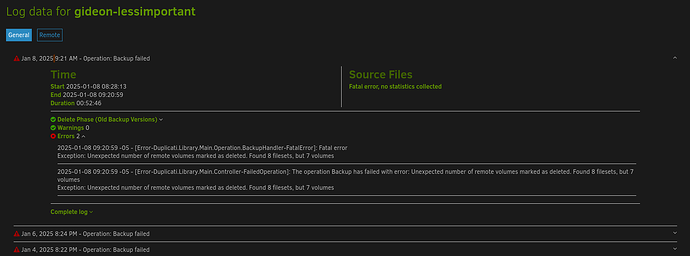

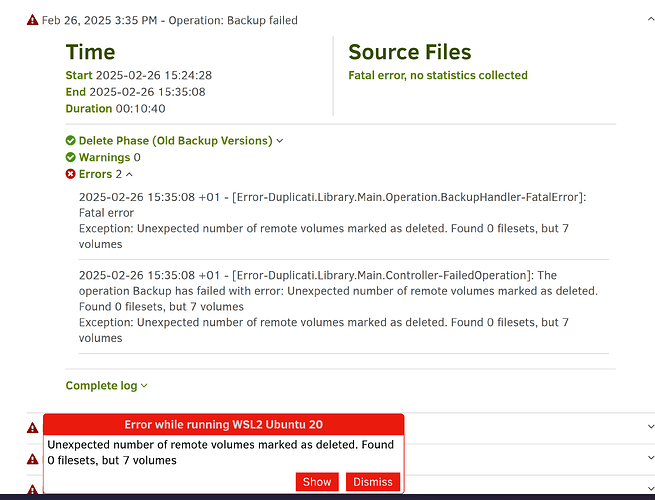

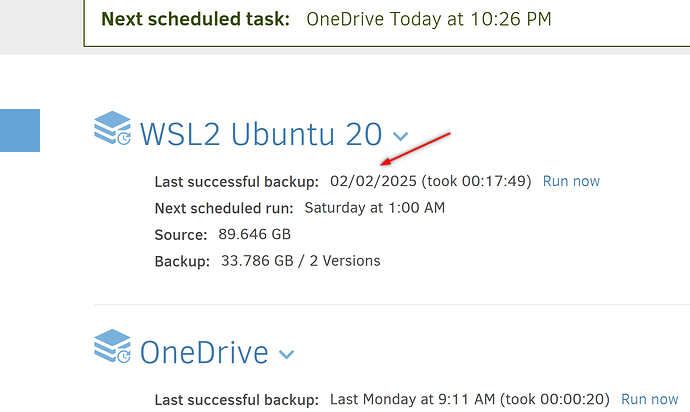

I’ll provide the first error’s “Complete log” JSON below. Not sure how helpful it will be, however. That said, my setup is kinda unique and may have brought this on myself. It’s proven so easy to bloat my Linux OS in past years that I started leaning heavily on distrobox to run app instances in podman containers. So basically any app that installs a lot of dependencies gets “containerized.” Duplicati is one of these. It has run flawlessly for years, so I don’t think that is the issue. Rather, my increasingly careless attitude towards putting my computer into standby mode while a backup job is running or finishing up is more likely the root cause. I run backups frequently enough that an occasional failure doesn’t bother me. This, however, was the first time I noticed consecutive failures. Duplicati was unable to recover or overcome the initial failure. I am glad it was easy to solve, but I think the lesson for me is to adjust my backup schedules to avoid the occasional times I put the computer to sleep or dual boot to Windows in order to avoid having to wait for a job to finish or interrupt it.

{

"DeletedFiles": 335,

"DeletedFolders": 60,

"ModifiedFiles": 616,

"ExaminedFiles": 390175,

"OpenedFiles": 1160,

"AddedFiles": 544,

"SizeOfModifiedFiles": 6460082964,

"SizeOfAddedFiles": 278793571,

"SizeOfExaminedFiles": 318915143297,

"SizeOfOpenedFiles": 6785078874,

"NotProcessedFiles": 0,

"AddedFolders": 43,

"TooLargeFiles": 0,

"FilesWithError": 0,

"ModifiedFolders": 0,

"ModifiedSymlinks": 0,

"AddedSymlinks": 0,

"DeletedSymlinks": 0,

"PartialBackup": false,

"Dryrun": false,

"MainOperation": "Backup",

"CompactResults": null,

"VacuumResults": null,

"DeleteResults": {

"DeletedSetsActualLength": 0,

"DeletedSets": null,

"Dryrun": false,

"MainOperation": "Delete",

"CompactResults": null,

"ParsedResult": "Success",

"Interrupted": false,

"Version": "2.0.7.103 (2.0.7.103_canary_2024-04-19)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2025-01-04T19:05:42.088823Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null,

"BackendStatistics": {

"RemoteCalls": 48,

"BytesUploaded": 2262273683,

"BytesDownloaded": 0,

"FilesUploaded": 47,

"FilesDownloaded": 0,

"FilesDeleted": 0,

"FoldersCreated": 0,

"RetryAttempts": 0,

"UnknownFileSize": 0,

"UnknownFileCount": 0,

"KnownFileCount": 10353,

"KnownFileSize": 532683907015,

"LastBackupDate": "2025-01-03T22:22:18-05:00",

"BackupListCount": 66,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Interrupted": false,

"Version": "2.0.7.103 (2.0.7.103_canary_2024-04-19)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2025-01-04T18:22:41.01632Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null

}

},

"RepairResults": null,

"TestResults": null,

"ParsedResult": "Fatal",

"Interrupted": false,

"Version": "2.0.7.103 (2.0.7.103_canary_2024-04-19)",

"EndTime": "2025-01-04T19:07:06.701142Z",

"BeginTime": "2025-01-04T18:22:41.016313Z",

"Duration": "00:44:25.6848290",

"MessagesActualLength": 106,

"WarningsActualLength": 0,

"ErrorsActualLength": 2,

"Messages": [

"2025-01-04 13:22:41 -05 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started",

"2025-01-04 13:29:35 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()",

"2025-01-04 13:29:39 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (10.11 KB)",

"2025-01-04 13:29:40 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-KeepIncompleteFile]: keeping protected incomplete remote file listed as Uploading: duplicati-20250104T032218Z.dlist.zip.aes",

"2025-01-04 13:29:43 -05 - [Information-Duplicati.Library.Main.Operation.Backup.UploadSyntheticFilelist-PreviousBackupFilelistUpload]: Uploading filelist from previous interrupted backup",

"2025-01-04 13:30:19 -05 - [Information-Duplicati.Library.Main.Operation.Backup.RecreateMissingIndexFiles-RecreateMissingIndexFile]: Re-creating missing index file for duplicati-bc5a64c91f87c4c51a3621334afb0795f.dblock.zip.aes",

"2025-01-04 13:30:20 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i3d48a5d4f335426d85ce9239852bbbd3.dindex.zip.aes (204.47 KB)",

"2025-01-04 13:30:22 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i3d48a5d4f335426d85ce9239852bbbd3.dindex.zip.aes (204.47 KB)",

"2025-01-04 13:42:59 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-beb23e6393348447194f51ea0c4f26201.dblock.zip.aes (99.94 MB)",

"2025-01-04 13:42:59 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b4772d13cf1544c6aa22c98368096704b.dblock.zip.aes (99.95 MB)",

"2025-01-04 13:43:03 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b2dbf8b8f58644395b63b1a65d70db9ef.dblock.zip.aes (99.94 MB)",

"2025-01-04 13:47:02 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-beb23e6393348447194f51ea0c4f26201.dblock.zip.aes (99.94 MB)",

"2025-01-04 13:47:02 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i3fe93b5f71d241908c6a3742d9c982b9.dindex.zip.aes (280.01 KB)",

"2025-01-04 13:47:07 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i3fe93b5f71d241908c6a3742d9c982b9.dindex.zip.aes (280.01 KB)",

"2025-01-04 13:47:07 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-bb1074b0a515d4317bcea0a86d0abb839.dblock.zip.aes (99.95 MB)",

"2025-01-04 13:48:23 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b2dbf8b8f58644395b63b1a65d70db9ef.dblock.zip.aes (99.94 MB)",

"2025-01-04 13:48:24 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i4798b8e914d046c5aa305ec6fc3130c6.dindex.zip.aes (94.56 KB)",

"2025-01-04 13:48:25 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i4798b8e914d046c5aa305ec6fc3130c6.dindex.zip.aes (94.56 KB)",

"2025-01-04 13:48:25 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b20bda26db13a4afe9b2c549332c3f553.dblock.zip.aes (99.93 MB)",

"2025-01-04 13:48:36 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b4772d13cf1544c6aa22c98368096704b.dblock.zip.aes (99.95 MB)"

],

"Warnings": [],

"Errors": [

"2025-01-04 14:06:52 -05 - [Error-Duplicati.Library.Main.Operation.BackupHandler-FatalError]: Fatal error\nException: Unexpected number of remote volumes marked as deleted. Found 2 filesets, but 1 volumes",

"2025-01-04 14:07:06 -05 - [Error-Duplicati.Library.Main.Controller-FailedOperation]: The operation Backup has failed with error: Unexpected number of remote volumes marked as deleted. Found 2 filesets, but 1 volumes\nException: Unexpected number of remote volumes marked as deleted. Found 2 filesets, but 1 volumes"

],

"BackendStatistics": {

"RemoteCalls": 48,

"BytesUploaded": 2262273683,

"BytesDownloaded": 0,

"FilesUploaded": 47,

"FilesDownloaded": 0,

"FilesDeleted": 0,

"FoldersCreated": 0,

"RetryAttempts": 0,

"UnknownFileSize": 0,

"UnknownFileCount": 0,

"KnownFileCount": 10353,

"KnownFileSize": 532683907015,

"LastBackupDate": "2025-01-03T22:22:18-05:00",

"BackupListCount": 66,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Interrupted": false,

"Version": "2.0.7.103 (2.0.7.103_canary_2024-04-19)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2025-01-04T18:22:41.01632Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null

}

}