Duplicati 2.1.0.107 on Windows 10 going to Backblaze B2 native did some strange things.

Allowable retries are set to 0 to invite issues, and flaky Wi-Fi probably started this.

Evidence of a network problem was pretty solid, with speeds a fraction of the expected.

Fixing just took putting the system to sleep then letting it wake, but damage was done.

My logging and error detection plan worked imperfectly, but there’s still a lot.

First failed job log has times of:

Start 2025-02-16 07:20:55

End 2025-02-16 07:35:15

Duration 00:14:20

Messages in server Profiling log with BackendEvent and BackendUploader tags are:

2025-02-16 07:21:04 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()

2025-02-16 07:21:06 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (493 bytes)

2025-02-16 07:23:56 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-be476ffad0d544711a050b3a356b03f60.dblock.zip.aes (50.01 MiB)

2025-02-16 07:23:56 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-bed1695fdb3d64f728d1e0751a8b0ee6b.dblock.zip.aes (50.03 MiB)

2025-02-16 07:24:57 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b125bf82ede184f1bbfc31eb05ca2783e.dblock.zip.aes (23.25 MiB)

2025-02-16 07:25:28 -05 - [Retry-Duplicati.Library.Main.Operation.Backup.BackendUploader-RetryPut]: Operation Put with file duplicati-b125bf82ede184f1bbfc31eb05ca2783e.dblock.zip.aes attempt 1 of 1 failed with message: The operation was canceled.

2025-02-16 07:34:25 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 50.01 MiB in 00:10:29.2687382, 81.39 KiB/s

2025-02-16 07:34:25 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-be476ffad0d544711a050b3a356b03f60.dblock.zip.aes (50.01 MiB)

2025-02-16 07:34:25 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i2d1174abd6ca41519e7a7dff8383afb0.dindex.zip.aes (27.25 KiB)

2025-02-16 07:34:27 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 50.03 MiB in 00:10:30.8189377, 81.21 KiB/s

2025-02-16 07:34:27 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-bed1695fdb3d64f728d1e0751a8b0ee6b.dblock.zip.aes (50.03 MiB)

2025-02-16 07:34:27 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i85a7b316825e4b6bb617b6c0db4bd59a.dindex.zip.aes (35.01 KiB)

2025-02-16 07:34:29 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 27.25 KiB in 00:00:03.6168321, 7.53 KiB/s

2025-02-16 07:34:29 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i2d1174abd6ca41519e7a7dff8383afb0.dindex.zip.aes (27.25 KiB)

2025-02-16 07:34:30 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 35.01 KiB in 00:00:02.9578903, 11.84 KiB/s

2025-02-16 07:34:30 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i85a7b316825e4b6bb617b6c0db4bd59a.dindex.zip.aes (35.01 KiB)

2025-02-16 07:34:30 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-20250216T122100Z.dlist.zip.aes (948.67 KiB)

2025-02-16 07:34:38 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 948.67 KiB in 00:00:07.7408506, 122.55 KiB/s

2025-02-16 07:34:38 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-20250216T122100Z.dlist.zip.aes (948.67 KiB)

2025-02-16 07:34:49 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Started: duplicati-20240216T132340Z.dlist.zip.aes (938.00 KiB)

2025-02-16 07:34:52 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Completed: duplicati-20240216T132340Z.dlist.zip.aes (938.00 KiB)

2025-02-16 07:34:52 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Started: duplicati-20250202T122003Z.dlist.zip.aes (947.98 KiB)

2025-02-16 07:34:53 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Completed: duplicati-20250202T122003Z.dlist.zip.aes (947.98 KiB)

2025-02-16 07:34:55 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Started: duplicati-b3df2619cb6264febba5affb69d66510c.dblock.zip.aes (16.93 MiB)

2025-02-16 07:34:56 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Completed: duplicati-b3df2619cb6264febba5affb69d66510c.dblock.zip.aes (16.93 MiB)

2025-02-16 07:34:56 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Started: duplicati-i89772384028847d28da08e28ad9cfcc7.dindex.zip.aes (57.37 KiB)

2025-02-16 07:34:57 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Completed: duplicati-i89772384028847d28da08e28ad9cfcc7.dindex.zip.aes (57.37 KiB)

2025-02-16 07:34:58 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-verification.json (102.96 KiB)

2025-02-16 07:35:15 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Failed: duplicati-verification.json (102.96 KiB)

The detail on the dblock error was:

2025-02-16 07:24:57 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b125bf82ede184f1bbfc31eb05ca2783e.dblock.zip.aes (23.25 MiB)

...

2025-02-16 07:25:28 -05 - [Retry-Duplicati.Library.Main.Operation.Backup.BackendUploader-RetryPut]: Operation Put with file duplicati-b125bf82ede184f1bbfc31eb05ca2783e.dblock.zip.aes attempt 1 of 1 failed with message: The operation was canceled.

System.Threading.Tasks.TaskCanceledException: The operation was canceled.

---> System.Threading.Tasks.TaskCanceledException: The operation was canceled.

---> System.IO.IOException: Unable to read data from the transport connection: The I/O operation has been aborted because of either a thread exit or an application request..

---> System.Net.Sockets.SocketException (995): The I/O operation has been aborted because of either a thread exit or an application request.

--- End of inner exception stack trace ---

at System.Net.Sockets.Socket.AwaitableSocketAsyncEventArgs.ThrowException(SocketError error, CancellationToken cancellationToken)

at System.Net.Sockets.Socket.AwaitableSocketAsyncEventArgs.System.Threading.Tasks.Sources.IValueTaskSource<System.Int32>.GetResult(Int16 token)

at System.Net.Security.SslStream.EnsureFullTlsFrameAsync[TIOAdapter](CancellationToken cancellationToken, Int32 estimatedSize)

at System.Runtime.CompilerServices.PoolingAsyncValueTaskMethodBuilder`1.StateMachineBox`1.System.Threading.Tasks.Sources.IValueTaskSource<TResult>.GetResult(Int16 token)

at System.Net.Security.SslStream.ReadAsyncInternal[TIOAdapter](Memory`1 buffer, CancellationToken cancellationToken)

at System.Runtime.CompilerServices.PoolingAsyncValueTaskMethodBuilder`1.StateMachineBox`1.System.Threading.Tasks.Sources.IValueTaskSource<TResult>.GetResult(Int16 token)

at System.Net.Http.HttpConnection.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

--- End of inner exception stack trace ---

at System.Net.Http.HttpConnection.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpConnectionPool.SendWithVersionDetectionAndRetryAsync(HttpRequestMessage request, Boolean async, Boolean doRequestAuth, CancellationToken cancellationToken)

at System.Net.Http.RedirectHandler.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at Microsoft.Extensions.Http.Logging.LoggingHttpMessageHandler.<SendCoreAsync>g__Core|5_0(HttpRequestMessage request, Boolean useAsync, CancellationToken cancellationToken)

at Microsoft.Extensions.Http.Logging.LoggingScopeHttpMessageHandler.<SendCoreAsync>g__Core|5_0(HttpRequestMessage request, Boolean useAsync, CancellationToken cancellationToken)

at System.Net.Http.HttpClient.<SendAsync>g__Core|83_0(HttpRequestMessage request, HttpCompletionOption completionOption, CancellationTokenSource cts, Boolean disposeCts, CancellationTokenSource pendingRequestsCts, CancellationToken originalCancellationToken)

--- End of inner exception stack trace ---

at System.Net.Http.HttpClient.HandleFailure(Exception e, Boolean telemetryStarted, HttpResponseMessage response, CancellationTokenSource cts, CancellationToken cancellationToken, CancellationTokenSource pendingRequestsCts)

at System.Net.Http.HttpClient.<SendAsync>g__Core|83_0(HttpRequestMessage request, HttpCompletionOption completionOption, CancellationTokenSource cts, Boolean disposeCts, CancellationTokenSource pendingRequestsCts, CancellationToken originalCancellationToken)

at Duplicati.Library.JsonWebHelperHttpClient.GetResponseAsync(HttpRequestMessage req, CancellationToken cancellationToken)

at Duplicati.Library.JsonWebHelperHttpClient.ReadJsonResponseAsync[T](HttpRequestMessage req, CancellationToken cancellationToken)

at Duplicati.Library.JsonWebHelperHttpClient.GetJsonDataAsync[T](String url, CancellationToken cancellationToken, Action`1 setup)

at Duplicati.Library.JsonWebHelperHttpClient.PostAndGetJsonDataAsync[T](String url, Object item, CancellationToken cancellationToken)

at Duplicati.Library.Backend.Backblaze.B2.ListAsync(CancellationToken cancellationToken)

at Duplicati.Library.Backend.Backblaze.B2.PutAsync(String remotename, Stream stream, CancellationToken cancelToken)

at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoPut(FileEntryItem item, IBackend backend, CancellationToken cancelToken)

at Duplicati.Library.Main.Operation.Backup.BackendUploader.<>c__DisplayClass25_0.<<UploadFileAsync>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Duplicati.Library.Main.Operation.Backup.BackendUploader.DoWithRetry(Func`1 method, FileEntryItem item, Worker worker, CancellationToken cancelToken)

and possibly was caused by this new timeout:

C:\Duplicati\duplicati-2.1.0.107_canary_2025-01-17-win-x64-gui>Duplicati.CommandLine help read-write-timeout

--read-write-timeout (Timespan): Set the read/write timeout for the connection

The read/write timeout is the maximum amount of time to wait for any activity during a transfer. If no activity is

detected for this period, the connection is considered broken and the transfer is aborted. Set to 0s to disabled

* default value: 30s

My first surprise was it kept going after a Put failure. Didn’t it used to stop?

What it wound up doing was uploading dlist referencing dblock that wasn’t there.

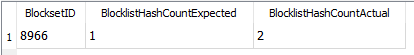

This was reported by my backup sanity checker script. Let’s look at some errors:

Missing dlist blocklist from dindex vol/ blocks {'ci8QqioewhgvToVX0PxXlWxWlVc59fOJe8XOpTsYHck=', '6PPDzlV79kzDgSc5ICBaht4nwph2hmFNYMnbSZzYF/8='}

In database history in the 7:25, both blocklist hashes lead to the missing file.

Missing small file data block from dindex vol/ blocks {'DFMUdE9GfSZ9koSo/tK98uF7dEdEaSZvp/kBNQ4nY/g='}

Missing metadata block from dindex vol/ blocks {'SxktK2gNItsQDoOC3aFkkLzpnZRSQNF181j0uau4BwQ='}

Both also in missing dblock file.

Duplicati tries to clean file issues at the start of each backup. Profiling log:

2025-02-16 08:52:42 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b125bf82ede184f1bbfc31eb05ca2783e.dblock.zip.aes

It doesn’t actually wind up doing delete, because the dblock was never uploaded.

I think I overwrote one historical database, but regardless, bad dblock is gone.

The BlocklistHash table no longer has either reference pointing to missing file.

The dblock to dindex mappings look like this, based on looking at vol in dindex:

duplicati-bed1695fdb3d64f728d1e0751a8b0ee6b.dblock.zip.aes

duplicati-i85a7b316825e4b6bb617b6c0db4bd59a.dindex.zip.aes

duplicati-be476ffad0d544711a050b3a356b03f60.dblock.zip.aes

duplicati-i2d1174abd6ca41519e7a7dff8383afb0.dindex.zip.aes

duplicati-b125bf82ede184f1bbfc31eb05ca2783e.dblock.zip.aes

(which failed upload, at least seemed to not put a dindex)

So is all fixed? Probably not, because duplicati-20250216T122100Z.dlist.zip.aes

still has references into the dblock that didn’t get uploaded (while dlist did).

I used BackendTool to get a current copy, got its filelist.json, and found this:

"blocklists":["ci8QqioewhgvToVX0PxXlWxWlVc59fOJe8XOpTsYHck="]

"blocklists":["6PPDzlV79kzDgSc5ICBaht4nwph2hmFNYMnbSZzYF/8="]

So I suspect Recreate would be messy. I won’t immediately try, as B2 added this:

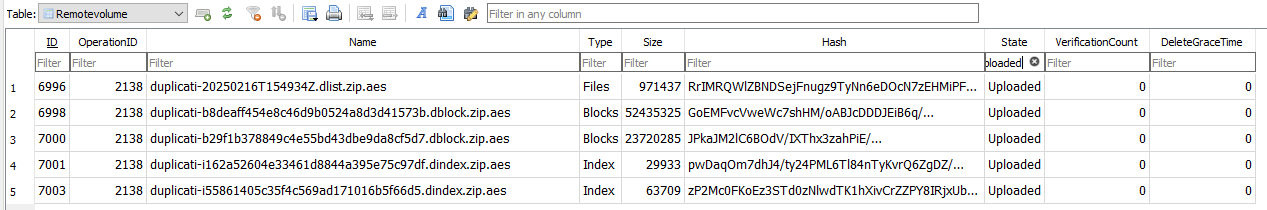

Found 5 files that are missing from the remote storage, please run repair

Red popup error on a backup after getting the Wi-Fi working again. Live log has:

Feb 16, 2025 10:55 AM: The operation Test has failed with error: Found 5 files that are missing from the remote storage, please run repair

Feb 16, 2025 10:55 AM: Found 5 files that are missing from the remote storage, please run repair

Feb 16, 2025 10:55 AM: Missing file: duplicati-i55861405c35f4c569ad171016b5f66d5.dindex.zip.aes

Feb 16, 2025 10:55 AM: Missing file: duplicati-i162a52604e33461d8844a395e75c97df.dindex.zip.aes

Feb 16, 2025 10:55 AM: Missing file: duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes

Feb 16, 2025 10:55 AM: Missing file: duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes

Feb 16, 2025 10:55 AM: Missing file: duplicati-20250216T154934Z.dlist.zip.aes

Profiling log has:

2025-02-16 10:51:41 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i55861405c35f4c569ad171016b5f66d5.dindex.zip.aes (62.22 KiB)

2025-02-16 10:51:41 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i55861405c35f4c569ad171016b5f66d5.dindex.zip.aes (62.22 KiB)

2025-02-16 10:51:48 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-i55861405c35f4c569ad171016b5f66d5.dindex.zip.aes

2025-02-16 10:55:56 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-i55861405c35f4c569ad171016b5f66d5.dindex.zip.aes

2025-02-16 10:51:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i162a52604e33461d8844a395e75c97df.dindex.zip.aes (29.23 KiB)

2025-02-16 10:51:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i162a52604e33461d8844a395e75c97df.dindex.zip.aes (29.23 KiB)

2025-02-16 10:51:48 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-i162a52604e33461d8844a395e75c97df.dindex.zip.aes

2025-02-16 10:55:56 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-i162a52604e33461d8844a395e75c97df.dindex.zip.aes

2025-02-16 10:51:36 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes (22.62 MiB)

2025-02-16 10:51:40 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes (22.62 MiB)

2025-02-16 10:51:48 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes

2025-02-16 10:55:56 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes

2025-02-16 10:50:54 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes (50.01 MiB)

2025-02-16 10:51:11 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes (50.01 MiB)

2025-02-16 10:51:48 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes

2025-02-16 10:55:56 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes

2025-02-16 10:51:41 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-20250216T154934Z.dlist.zip.aes (948.67 KiB)

2025-02-16 10:51:42 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-20250216T154934Z.dlist.zip.aes (948.67 KiB)

2025-02-16 10:51:48 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-20250216T154934Z.dlist.zip.aes

2025-02-16 10:55:56 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-20250216T154934Z.dlist.zip.aes

I can’t find any of those files in the Backblaze B2 web UI for the bucket and folder either.

This isn’t the sort of thing I usually see Duplicati or B2 native doing, so it’s pretty odd.

EDIT 1:

Here are the Put - Completed. It seemingly finished 7 successfully, but only 5 are around:

2025-02-16 10:51:11 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes (50.01 MiB)

2025-02-16 10:51:16 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b5a714fb2fee94bf1b6fb131d48d23a65.dblock.zip.aes (49.98 MiB)

2025-02-16 10:51:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-ib1a923650b104045a91fb8992fb81af1.dindex.zip.aes (24.62 KiB)

2025-02-16 10:51:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i162a52604e33461d8844a395e75c97df.dindex.zip.aes (29.23 KiB)

2025-02-16 10:51:40 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes (22.62 MiB)

2025-02-16 10:51:41 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i55861405c35f4c569ad171016b5f66d5.dindex.zip.aes (62.22 KiB)

2025-02-16 10:51:42 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-20250216T154934Z.dlist.zip.aes (948.67 KiB)

2025-02-16 10:51:47 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-verification.json (104.96 KiB)

These backup data files actually are around, according to B2 web UI:

duplicati-b5a714fb2fee94bf1b6fb131d48d23a65.dblock.zip.aes

duplicati-ib1a923650b104045a91fb8992fb81af1.dindex.zip.aes

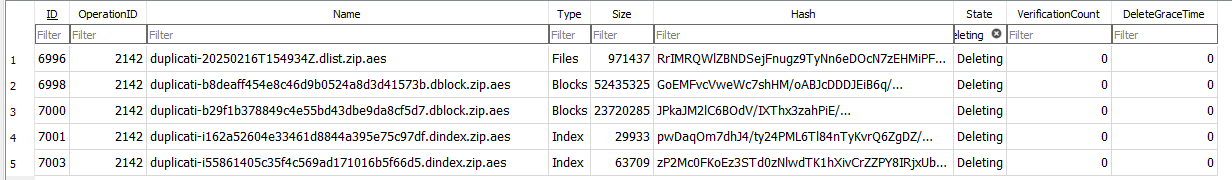

EDIT 2:

As expected, DB after that backup shows five missing files as Uploaded, not making it as far as Verified, which is the State of the two that actually uploaded. There was a recent forum topic:

Random files not found on Backblaze target except it used B2 S3, and seemed to show the files actually there. In my case here, even new login to the Backblaze site browser doesn’t see them.

duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes is roughly 50 MB and its upload duration seems to reflect that compared to the others. It times like uploading, but where’d file go?

EDIT 3:

Here are the uploads of the two files that showed up, to see if maybe times of Put give any clues:

2025-02-16 10:50:56 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b5a714fb2fee94bf1b6fb131d48d23a65.dblock.zip.aes (49.98 MiB)

2025-02-16 10:51:16 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b5a714fb2fee94bf1b6fb131d48d23a65.dblock.zip.aes (49.98 MiB)

2025-02-16 10:51:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-ib1a923650b104045a91fb8992fb81af1.dindex.zip.aes (24.62 KiB)

2025-02-16 10:51:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-ib1a923650b104045a91fb8992fb81af1.dindex.zip.aes (24.62 KiB)

Not clear to me. Maybe someone else’s look will be better, but passes and fails seem intermixed.

BackendTest run is completely fine at default settings. Google isn’t finding a burst of complaints of missing files from Backblaze B2 uploads, so this second-part-of-the-issue is still pretty mysterious.

If need be, maybe the focus can be on first part, but I don’t know if it could have caused second. There’s no network trace to help with second, but TrayIcon has no lingering connections with B2.