Thank-you for the advice, I re-ran the backup with verbose logging and the compact function does seem to be enabled, but it’s only basing it a very small percentage of the files in the S3 folder:

2025-03-05 07:57:01 +01 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-StartCheck]: Start checking if backups can be removed

2025-03-05 07:57:01 +01 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-FramesAndIntervals]: Time frames and intervals pairs: 7.00:00:00 / 1.00:00:00, 31.00:00:00 / 7.00:00:00, 365.00:00:00 / 31.00:00:00

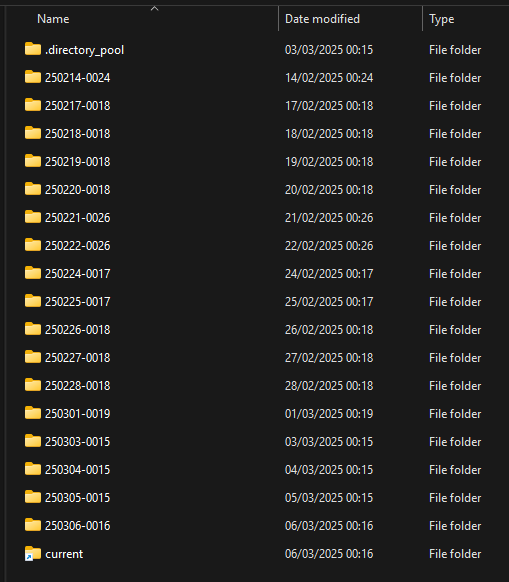

2025-03-05 07:57:01 +01 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-BackupList]: Backups to consider: 05/03/2025 05:07:04, 04/03/2025 05:06:56, 03/03/2025 03:06:39, 01/03/2025 03:06:38, 27/02/2025 03:06:36, 20/02/2025 03:06:41, 13/02/2025 03:06:09, 05/02/2025 03:06:02, 29/01/2025 03:04:49, 28/12/2024 03:12:56, 26/11/2024 03:23:26, 30/10/2024 06:36:09, 25/10/2024 12:56:49, 10/09/2024 06:07:18, 01/09/2024 06:01:54, 04/08/2024 06:03:23, 03/07/2024 06:06:21, 01/06/2024 06:03:00, 29/04/2024 06:16:49, 13/04/2024 06:26:39, 22/03/2024 06:19:37

2025-03-05 07:57:01 +01 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-BackupsToDelete]: Backups outside of all time frames and thus getting deleted:

2025-03-05 07:57:01 +01 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-AllBackupsToDelete]: All backups to delete:

2025-03-05 07:57:50 +01 - [Information-Duplicati.Library.Main.Operation.DeleteHandler-DeleteResults]: No remote filesets were deleted

2025-03-05 07:59:21 +01 - [Verbose-Duplicati.Library.Main.Database.LocalDeleteDatabase-FullyDeletableCount]: Found 0 fully deletable volume(s)

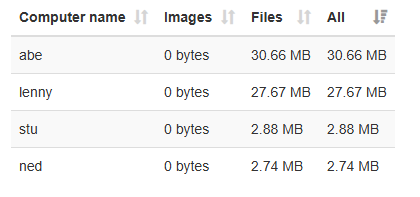

2025-03-05 07:59:21 +01 - [Verbose-Duplicati.Library.Main.Database.LocalDeleteDatabase-SmallVolumeCount]: Found 10 small volumes(s) with a total size of 79.213 MiB

2025-03-05 07:59:21 +01 - [Verbose-Duplicati.Library.Main.Database.LocalDeleteDatabase-WastedSpaceVolumes]: Found 1335 volume(s) with a total of 10.31% wasted space (121.570 GiB of 1.152 TiB)

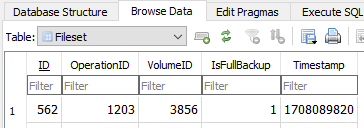

Do you think I should try a Recreate of the database, perhaps with verbose logging if I can figure that out? When I check the job log the values seem to match what I see on Wasabi so I’m not sure if that would even have an effect:

"UnknownFileSize": 0,

"UnknownFileCount": 0,

"KnownFileCount": 33370,

"KnownFileSize": 1162292137522,

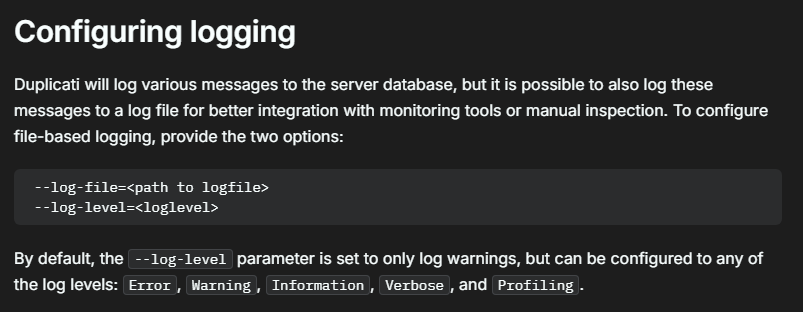

Looks like this needs updating on the documentation site to avoid a deprecation warning: 2025-03-05 07:55:21 +01 - [Warning-Duplicati.Library.Main.Controller-DeprecatedOption]: The option --log-level has been deprecated: Use the options --log-file-log-level and --console-log-level instead.