Oh, ya I was just referring to this work I have been doing:

If you could post more info about that registering missing blocks and maybe track down the exact message and post it there that would be great.

Oh, ya I was just referring to this work I have been doing:

If you could post more info about that registering missing blocks and maybe track down the exact message and post it there that would be great.

This is explained (not solved though) on the forum, and is easier to see in the GUI or with logging.

Recreate end has 3 phases. On the GUI, from 70% to 100% as three 10% steps on progress bar,

dblock files are being downloaded in search of missing information. The final 10% is exhaustive…

Sometimes exhaustive isn’t enough, and there are still missing blocks. Sometimes long work fixes.

Blocks should never go missing in an ideal world, but that’s not what we have here, so look above.

There are multiple aspects to recreate improvements. One (being worked on) is SQL performance, however another (probably awaiting developer) is whether any improvements can be made to stop exhaustive search when it becomes ridiculously long. Sometimes it may be better to give up on it…

and in my view waiting out the third one (90% and up on progress bar) is sometimes not worthwhile.

The problem is that sometimes it is. I’ve seen a post saying that it checks for success frequently, but looking at the code (to the extent I can), it looks like it doesn’t check for success in pass 3, only after.

Checking occasionally during pass 3 would speed up some successful cases, but not the futile cases.

Futile cases in an ideal world should never happen, but all we can do is knock them down as isolated.

Even doing that requires good isolation, and expert developers with time. I’ve written a lot about both.

Although how the blocklist data went bad is still a mystery (would be less so if we had more history), reproducing the final failure turns out to be easy if one manually inserts a bad blocklist into a dindex.

My goal with this was to have a small test case that would be easier to trace (even with a debugger).

The small test is easy to play with in variations, and it’s just dummy data so there’s no confidentiality.

Putting every recreate problem in one catch-all issue seems wrong, and I’d feel weird about opening another issue on basically garbage-in-garbage-out, but I’d do it. Or should we just get into this here?

The recipe summary is set blocksize=1024, backup a 1025 byte file to force a blocklist in list folder, extract the blocklist, double it with a hex editor, put it back, recreate DB, and watch the recreate error.

I also get the -1 VolumeID (which I might see a source of) and the negative Size that still puzzles me.

Do you still have the original data set with its dblock files? I was trying to see what else could be found in previous materials that you shared, and found that the extraneous blocklist referenced in image earlier is the last file in the broken dindex, and maybe this is significant. Can you look in the dblock for that block?

duplicati-b83bdf633ed5a424e929c69e5257d94f3.dblock.zip.aes would be the dblock that is indexed by duplicati-ibd07684ff5734e8f8cd14d6d7f1428a4.dindex.zip.aes that somehow got a bad entry at its end.

You can probably look for the block in the dblock file by name (which is the Base64 of hash, as dindex). Failing to find it that way, you could try by size. In dindex, the excess file is the only file with size 29632.

I’m still not seeing this blocklist in the database, and I don’t have prior databases. I showed how I save databases in a series so I can go back – I also save deleted files with rclone, but that’s harder to set up. Ideally this would be entirely reproducible with exact steps in a debug environment. We’re not so lucky.

I don’t know if this can be solved with available information, but I thought I’d fish for more, and request additional information collection (if practical) in case this happens again. If not, it might get stuck again.

Unfortunately I don’t have the original one anymore. Strange, ok, that’s not exactly what I expected that to be. It’s actually more interesting. Why would index file have a invalid reference, probably some other bug somewhere else, and not directly related to the compaction abort I were blaming so many issues with.

But, I might have already more similar cases. Yet it’s unfortunately likely that those will be a lot larger data sets, and therefore harder to analyze. That one I provided you, was so wonderful because it was really small set.

But this is probably the secondary cause, which is causing some of the backups to be extremely slow to restore, and unreliable. On top of the missing files after compaction.

But currently I don’t know any other problems than these two. Doesn’t sound too bad after all. I just yesterday run 100% restore tests again for all the sets, and these were the two cases. Missing blocks, which then can or can’t be restored and database locked error after compaction abort (clear bug!) on next backup run, which is trivial to remedy with repair.

Ref: FTP delete not atomic / verified / transactional (?) - #31 by Sami_Lehtinen

Edit continued:

Yes, it seems that we’ve already got such case. Registering missing blocks, insane amount of single threaded CPU waiting and then finally error. Yet again, this is data set which does pass backup. I actually can run test right now, I’ll do it.

Error on restore:

ErrorID: DatabaseIsBrokenConsiderPurge

Recreated database has missing blocks and 3 broken filelists. Consider using "list-broken-files" and "purge-broken-files" to purge broken data from the remote store and the database.

Checked:

Backup runs just fine, restore fails, list-broken-files good, purge-broken files good, repair didn’t fix a thing (no errors to fix) and test (I’ll update when done, this is going to take some time). Yes, test passed without errors. - Still totally logically and deadly dangerously broken.

@ts678 ts678, I can save this state temporarily, both, sqlitedb and full backkup storage. But this data set is around 5 GB. But as mentioned I can’t provide dblocks but otherwise the db contains nothing especially sensitive. Path / file / server -names, aren’t that sensitive.

I temporarily save snapshot of this state.

I’ve got again five new backup sets, all pass test, but can’t be restored. - Actually I’ve got nothing to add at this point, except it would be nice to hear that the basic logic has been fixed.

Any interrupted backup nearby? There’s one issue on GitHub with that message, but it’s thought fixed, although in release after Oct 30, 2020, so whatever you’re seeing now is probably still requiring debug.

How exactly to debug is a good question. A smaller dataset would help. Looking over history since last successful restore might be easier, so we’re again talking logs and possibly some database snapshots, although doing a recreate is going to break the change trail. Still, comparison of old vs. new might help.

Full-size zeroed-out dblock files aren’t very useful (so far) except to see the names. They can’t be read. Possibly using empty files will make the data set smaller, but it’s still not possible to recreate from them.

It would be nice if there was a developer who knew the recreate code. The original one is rarely around, however maybe some of the SQL folks who have begun looking at performance will learn the approach.

I just did run some tests, and it’s still systemically broken. Compact destroys data beyond repair. Restore fails, but test lies to the user it’s good. Probably two overlapping extremely simple issues.

Read: A classic example of broken code and basic logic.

It seems that only way to fix the data set, is full delete and recreate. It’s FUBARed in classic computing terms.

This means that there are two overlapping issues:

… But it’s pointless to discuss these matters, because it’s not rocket science …

When you say “test” I think of more than an error in normal use. Please describe test, and provide

whatever information might be useful to investigate. Just complaining (even loudly) does not solve.

As I’ve written to you before, test cases that are reproducible from scratch by developers can help,

however as I write here in the forum constantly, progress relies on volunteers. Help where you can.

Rather than post more heat than light, please consider aiding progress. You seek that, I’d assume.

Posting guidelines are rather generic, but give some good advice on general ways to interact here:

Just cross linking: FTP delete not atomic / verified / transactional (?) - #35 by Sami_Lehtinen

Tested with latest version, repair / test / restore functions still inconsistent.

Test → Ok

Successfully verified 70 remote files

Examined 70 files and found no errors

Repair → Ok

Listing remote folder ...

Restore → Fail

Recreated database has missing blocks and 13 broken filelists. Consider using "list-broken-files" and "purge-broken-files" to purge broken data from the remote store and the database.

This test was completed using the latest version ( v2.0.7.100-2.0.7.100_canary_2023-12-27 ). Yet, the issue was probably created with this specific data set by the older version. But even latest version doesn’t catch or fix or workaround that deadly treacherous problem.

Do you use the new ListAndIndexes option for the full-remote-verification ? it is supposed to allow to do a fuller test than ‘False’ (the default value) while not taking too much time (as with ‘True’) because it skips on data blocks. The idea is to select it even for normal backups, so that problems are caught straight away (when using it for backups, since the ‘all’ code used for test is not available, it’s necessary to use instead backup-test-percentage = 100 - I find this discrepancy irritating but it’s how it is for now)

makes me think that the mentioned Restore is a Direct restore from backup files, e.g. due to disaster.

Being able to test backups well enough to know they can do that is important. Does test do enough?

I don’t know exactly what it does, beyond file hash checks and some compares of blocks to expected.

There are several different test approaches. GetBrokenFilesets looks to be in *-broken-filesets.

Does The LIST-BROKEN-FILES command help any to do what the documentation claims it could do?

Checks the database for missing data that cause files not not be restoreable. Files can become unrestoreable if remote data files are defect or missing.

If you moved your database out of the way before the direct restore test, you’ll need to put it back first. Success here would be a problem detection. There’s a separate issue of how the problem got created.

EDIT:

You could actually try list-broken-files on the recreated database if you like, to see what that gets.

Early detection is of course better. I made a Python script that tries to do this, but it wants unencrypted dlist and dindex files to see if a dlist references any missing blocks (possibly the problem you’re hitting).

If this is a direct restore, which recreates part of the database, this is likely an issue that was found recently about incorrect index files. I got the same message with recreate. There is a fix, but it is not tested sufficiently:

This should prevent this incorrect index generation and also contains additional checks to detect existing damage.

We did not yet see this bug in existing backups that seem fine to the test command. If you want to test the fix, I can make a build which you can use for a direct restore. It also tells you which files are broken.

Thanks! Certainly a possible explanation. I’m not even sure list-broken-files can find a bad dindex.

It might still be worth a try, or OP could try my Python script with some extra prints finally uncommented.

and in this case (per messages) I think you mean destination dindex files, and offer repair fix directions. Anything else possible without extra or modified software of some sort? I’m running out on ideas now…

EDIT 1:

Actually, OP does databases. Can you say how DB looks after failed recreate due to corrupted dindex?

EDIT 2:

@Jojo-1000 Do you have a test case for the compact bug? Actually, this whole thing could use a little more formality (such as an Issue) after we got done discussing it on the forum (which was a bit easier).

I piggybacked it on #3202 which is also linked in the PR. There is other discussion about changes, but the error seems consistent on first glance and it is so old, we are not going to find out otherwise.

The compact bug is only theoretical now, but likely. To find reproduction steps I would do the steps to get a repair error, which we know generates incorrect index files. Then cause the relevant file to be in a compact, which removes the original index and creates a new, broken one.

Maybe this will work (off the top of my head, not tested):

--blocksize=1KB (to allow smaller files), --threshold=1, --keep-versions=1a (or filled with any text character): This is 1 byte more than will fit in one blocklistb that is roughly the same size, but different contenta to 1bIt might be that keeping only one version screws with the reproduction, then you have to fiddle with it a bit.

I decided not to pursue compact test case because it makes more sense to develop that, then test with the pull request which builds on the recent AddTempBlockListHash speedup which I’m not familiar with.

What I’m testing instead is a backup of a file of 32769 a characters with blocksize 1KB, similar to older case. The dlist has two blocklist hash references, and I’ll break the first one by editing its Base64 hash, changing the initial D to an E. You’ll see the whole reference in some of the test reports posted later…

Recreate got red popup: Recreated database has missing blocks and 1 broken filelists. Consider using “list-broken-files” and “purge-broken-files” to purge broken data from the remote store and the database

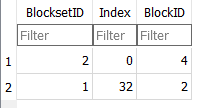

In database, File view takes me to blockset 1. Blockset table has it as length 32769, but BlocksetEntry skipped the first 32768 bytes (whose blocklist reference I broke in filelist.json), so has only final 1 byte:

One GUI test is to click Verify files which made red popup: Found inconsistency in the following files while validating database: C:\backup source\32769.txt, actual size 32769, dbsize 1, blocksetid: 1 . Run repair to fix it.

GUI Commandline test all shows that (probably from the same test underneath before downloading):

System.IO.InvalidDataException: Found inconsistency in the following files while validating database:

C:\backup source\32769.txt, actual size 32769, dbsize 1, blocksetid: 1

. Run repair to fix it.

at Duplicati.Library.Main.Database.LocalDatabase.VerifyConsistency(Int64 blocksize, Int64 hashsize, Boolean verifyfilelists, IDbTransaction transaction)

at Duplicati.Library.Main.Operation.TestHandler.Run(Int64 samples)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Action`1 method)

at Duplicati.Library.Main.Controller.Test(Int64 samples)

at Duplicati.CommandLine.Commands.Test(TextWriter outwriter, Action`1 setup, List`1 args, Dictionary`2 options, IFilter filter)

at Duplicati.CommandLine.Program.ParseCommandLine(TextWriter outwriter, Action`1 setup, Boolean& verboseErrors, String[] args)

at Duplicati.CommandLine.Program.RunCommandLine(TextWriter outwriter, TextWriter errwriter, Action`1 setup, String[] args)

Return code: 100

GUI Commandline list-broken-files says:

0 : 1/4/2024 6:57:59 AM (1 match(es))

C:\backup source\32769.txt (32.00 KB)

Return code: 0

Let’s see what my Python script has to say:

5 blocks seen in dindex files

1 blocklists seen in dindex files

2 blocks used by dlist files blocklists

Missing dlist blocklist from dindex vol/ blocks {'EWypxEXA7TXbM2azclwoTH+Y7rEgA9kbDa4/K3ycNdA='}

1 blocks used by dlist large files data

0 blocks used by dlist small files data

1 blocks used by dlist files metadata

2 blocks unused

1 large blocksets in dlist files

0 small blocksets in dlist files

small file blocksets that are also metadata blocksets: set()

small file blocksets that are also blocklists: set()

or if I add --verbose which is supposed to ignore less serious oddities, it looks like

5 blocks seen in dindex files

1 blocklists seen in dindex files

2 blocks used by dlist files blocklists

Missing dlist blocklist from dindex list/ cache {'EWypxEXA7TXbM2azclwoTH+Y7rEgA9kbDa4/K3ycNdA='}

Missing dlist blocklist from dindex vol/ blocks {'EWypxEXA7TXbM2azclwoTH+Y7rEgA9kbDa4/K3ycNdA='}

1 blocks used by dlist large files data

0 blocks used by dlist small files data

1 blocks used by dlist files metadata

2 blocks unused

1 large blocksets in dlist files

0 small blocksets in dlist files

small file blocksets that are also metadata blocksets: set()

small file blocksets that are also blocklists: set()

Now see if problem removal works. The test command suggested Repair, so push Repair. Red popup: The database was attempted repaired, but the repair did not complete. This database may be incomplete and the repair process is not allowed to alter remote files as that could result in data loss.

Now try purge-broken-files with console-log-level=Information to get a better view of the work:

The operation PurgeBrokenFiles has started

Found 1 broken filesets with 1 affected files, purging files

Removing entire fileset 1 as all 1/4/2024 11:57:59 AM file(s) are broken

Preventing removal of last fileset, use --allow-full-removal to allow removal ...

No remote filesets were deleted

Database was previously marked as in-progress, checking if it is valid after purging files

System.IO.InvalidDataException: Found inconsistency in the following files while validating database:

C:\backup source\32769.txt, actual size 32769, dbsize 1, blocksetid: 1

. Run repair to fix it.

at Duplicati.Library.Main.Database.LocalDatabase.VerifyConsistency(Int64 blocksize, Int64 hashsize, Boolean verifyfilelists, IDbTransaction transaction)

at Duplicati.Library.Main.Operation.PurgeBrokenFilesHandler.Run(IFilter filter)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Action`1 method)

at Duplicati.Library.Main.Controller.PurgeBrokenFiles(IFilter filter)

at Duplicati.CommandLine.Commands.PurgeBrokenFiles(TextWriter outwriter, Action`1 setup, List`1 args, Dictionary`2 options, IFilter filter)

at Duplicati.CommandLine.Program.ParseCommandLine(TextWriter outwriter, Action`1 setup, Boolean& verboseErrors, String[] args)

at Duplicati.CommandLine.Program.RunCommandLine(TextWriter outwriter, TextWriter errwriter, Action`1 setup, String[] args)

Return code: 100

so maybe it tried, but tripped on a safety which I’ll remove as suggested, with --allow-full-removal

The operation PurgeBrokenFiles has started

Found 1 broken filesets with 1 affected files, purging files

Removing entire fileset 1 as all 1/4/2024 11:57:59 AM file(s) are broken

Deleting 1 remote fileset(s) ...

Backend event: Delete - Started: duplicati-20240104T115759Z.dlist.zip (733 bytes)

Deleting file duplicati-20240104T115759Z.dlist.zip (733 bytes) ...

Backend event: Delete - Completed: duplicati-20240104T115759Z.dlist.zip (733 bytes)

Deleted 1 remote fileset(s)

Database was previously marked as in-progress, checking if it is valid after purging files

Purge completed, and consistency checks completed, marking database as complete

Return code: 0

So success, except purging the only file in the only version didn’t leave me with much backup left.

I’m not sure which approaches @Sami_Lehtinen prefers to pursue, but tests like these are some.

Let’s start with something that could actually make an difference.

This so much, yes please. And if there’s any confusion about the error I pasted below, you could also add specific debug rows to find out, what’s exactly wrong with it.

I’ve verified with latest canary version, and this doesn’t seem to be true. test pass, but restore doesn’t work.

OR

Restore is just broken and doesn’t work correctly and claims that backup is broken. I think that this might be likely, because the backup restore recovery tool (why we even need such program(?!?)) restored backup which was broken.

Anyway, in the latest test set with latest version I caught this from restore log, which might be the key:

Unexpected changes caused by block duplicati-b0366966e93db4129b6ce9f5798fbe9d2.dblock.zip.aes

I don’t recall (at least right now) seeing this specific error earlier. Maybe this helps catching the error or at least narrowing down the parts in the source which might cause this in restore routine? (That’s what I do with my programs, if I’ve got unexplained situations, just add debug log entries until I can figure out how the things are related).

Yet, I also verified from verify / test log, that the file was verified and passed.

Which one holds true? Either restore is broken, or verify is broken, because this mismatch can’t be true. But as said, this is still extremely dangerous and deceptive thing for 99,99% users whom don’t do full restore tests regularly. - But enough about that, I assume we all acknowledge how serious this is.

In my case the list-broken-files and purge-broken-files, basically never does anything at all. At least when run from the source. If you want I can run that in the restore system as well. Or I could just manually delete the offending dblock file (which sounds actually a very bad idea). The dblock file is also from the “initial backup set”, so I’m quite sure, there are still several valid references pointing to blocks in that file.

I did read the advanced help about this a few times. Unfortunately I really didn’t understand what it would change and how. Could you give me a pro-tip? I

If you mean that the data integrity should be verified immediately after upload. No, I haven’t enabled that. Yet, as mentioned several times. This seems to be completely logical problem, not real data corruption issue. The test should anyway reveal if the data content / hash doesn’t match, even if it’s done at alter time. But that’s not the case. Something is just logically broken.

I tried --repair-force-block-use it didn’t change anything during any of the steps in practical terms.

I already fixed the broken backups sets (deleting and recreating completely), except one, which I kept for testing, because it’s quite small dataset and quick for me to test & copy & restore.

This is a message I added to last version when I changed the recreate database process to not run very expensive queries for each block when the database is very damaged. The idea is that while we don’t know how it is damaged, we can at least accelerate the process and give information on the damaged block so it can be examined and what’s wrong understood.

Now ideally what you could do is to decrypt the file and the associated index (if you need help figuring out the related index, ask, it’s in the IndexBlockLink table - I know that I could have added this in the log message…) and post the link to the files. If it’s somewhat secret, use PM on this forum. If it’s so secret that you can’t share it with anyone, too bad.

The database recreation does a first quick step reading the list and index files and attempting to recreate the database from them. If this fails, there is the third step involving datablocks that was before last canary an extremely long step (weeks) and is still not fast (1 or 2 days).

Previously testing the database was enough to check that it could be restored without the third step only if you did a full test (‘all’ + full remote block testing). That’s very powerful, but unfortunately as slow as a full backup of all the data, it means potentially terabytes of download.

That means that it was totally unpractical to do a full testing of the backup with each backup (by specifying 100% - instead of ‘all’ when testing - + full remote block testing).

By restricting the testing to the dlist and dindex files, doing a 100% testing + ‘list and index’ for remote block, that is making adding to each backup a test step that ensures that the database can be recreated, is limited to small dlist and dindex files. This is much faster (not instantaneous - but doable in minutes for small backups, I have timed it at less than 15’ for a 3 Terabytes one on a local LAN). If the test fails, the backup will emit a warning. While this does not ensures that all data can be restored, at least it’s a first step in securing restores in catching problems early.

Here is the build (valid for 3 days):

You should be able to run a direct restore with this version, and if it is the same bug, it will print a warning with the broken index file.

If it doesn’t have a warning, you might need to do a full database recreate.

After a full recreate, you can rename that index file to remove it from the backup (like _duplicati-xxx). Then run repair and it will create this file again, but it should work correctly. After that, restore from the release version should work again. It would be helpful if you can compare the old and new index file, to see if the error is consistent with our expectations.