Great finding! I can’t imagine how much trial and error that was to find. I was able to reproduce this with a simple text file with all ‘a’, changed the first letter to ‘b’.

I commented out the check to see what goes wrong in the repaired index file:

Original blocklist v106_7c-_S7Gw2rTES3ZM-_tY8Thy__PqI4nWcFE8tg= (hex view):

CA978112CA1BBDCAFAC231B39A23DC4D

A786EFF8147C4E72B9807785AFEE48BB

Recreated (which fails hash check):

CA978112CA1BBDCAFAC231B39A23DC4D

A786EFF8147C4E72B9807785AFEE48BB

CA978112CA1BBDCAFAC231B39A23DC4D

A786EFF8147C4E72B9807785AFEE48BB

So obviously, it seems that the repair duplicated the blocklist.

Technical stuff

It comes down to this SQL query from LocalDatabase.GetBlocklists:

SELECT "A"."Hash", "C"."Hash" FROM (SELECT "BlocklistHash"."BlocksetID", "Block"."Hash", * FROM "BlocklistHash","Block" WHERE "BlocklistHash"."Hash" = "Block"."Hash" AND "Block"."VolumeID" = ?) A, "BlocksetEntry" B, "Block" C WHERE "B"."BlocksetID" = "A"."BlocksetID" AND "B"."Index" >= ("A"."Index" * 32) AND "B"."Index" < (("A"."Index" + 1) * 32) AND "C"."ID" = "B"."BlockID" ORDER BY "A"."BlocksetID", "B"."Index";

This already returns the row twice:

Hash Hash

DWypxEXA7TXbM2azclwoTH+Y7rEgA9kbDa4/K3ycNdA= LtyYaEfiCbQBbhQabchxbTIHNQ9BaWk4LUMVOb8pLko=

[... total 32 of these entries, because the file is filled with identical data, this is expected]

DWypxEXA7TXbM2azclwoTH+Y7rEgA9kbDa4/K3ycNdA= LtyYaEfiCbQBbhQabchxbTIHNQ9BaWk4LUMVOb8pLko=

v106/7c+/S7Gw2rTES3ZM+/tY8Thy//PqI4nWcFE8tg= ypeBEsobvcr6wjGzmiPcTaeG7/gUfE5yuYB3ha/uSLs=

v106/7c+/S7Gw2rTES3ZM+/tY8Thy//PqI4nWcFE8tg= ypeBEsobvcr6wjGzmiPcTaeG7/gUfE5yuYB3ha/uSLs=

It is from the subquery:

SELECT "BlocklistHash"."BlocksetID", "Block"."Hash", * FROM "BlocklistHash","Block" WHERE "BlocklistHash"."Hash" = "Block"."Hash" AND "Block"."VolumeID" = ?;

BlocksetID Hash BlocksetID Index Hash ID Hash Size VolumeID

3 DWypxEXA7TXbM2azclwoTH+Y7rEgA9kbDa4/K3ycNdA= 3 0 DWypxEXA7TXbM2azclwoTH+Y7rEgA9kbDa4/K3ycNdA= 4 DWypxEXA7TXbM2azclwoTH+Y7rEgA9kbDa4/K3ycNdA= 1024 3

3 v106/7c+/S7Gw2rTES3ZM+/tY8Thy//PqI4nWcFE8tg= 3 1 v106/7c+/S7Gw2rTES3ZM+/tY8Thy//PqI4nWcFE8tg= 6 v106/7c+/S7Gw2rTES3ZM+/tY8Thy//PqI4nWcFE8tg= 32 3

8 v106/7c+/S7Gw2rTES3ZM+/tY8Thy//PqI4nWcFE8tg= 8 1 v106/7c+/S7Gw2rTES3ZM+/tY8Thy//PqI4nWcFE8tg= 6 v106/7c+/S7Gw2rTES3ZM+/tY8Thy//PqI4nWcFE8tg= 32 3

The first entry is the changed part of the file, but the second one is unchanged. The blocklist table itself looks like this:

BlocksetID Index Hash

3 0 DWypxEXA7TXbM2azclwoTH+Y7rEgA9kbDa4/K3ycNdA=

3 1 v106/7c+/S7Gw2rTES3ZM+/tY8Thy//PqI4nWcFE8tg=

8 0 lQw8vVLiZ8fPhU7yZsjGud8Lczxqp8KDrKPQcqzNfGw=

8 1 v106/7c+/S7Gw2rTES3ZM+/tY8Thy//PqI4nWcFE8tg=

Because block 8-1 is the same as 3-1, it is stored on the same volume and is not filtered, even though it is for a different version entirely. The code does not realize that these belong to different blocksets (3 vs 8), and combines both into a single file. Maybe it should check the blockset ID instead of the hash to know when to emit the next entry? This also only happens with blocklists that are not full, because otherwise the maximum file size would split the entries automatically.

The circumstances for this failure are:

- have a file larger than a single blocklist can fit, but also not evenly divisible (the final blocklist must not be full, otherwise it will just be overwritten multiple times)

- the file changes at the beginning, but the final blocklist stays the same (however many bytes that are)

- the index corresponding to the unchanged final blocklist goes missing

- repair will repeat the blocklist while recreating the index (even multiple times for more than 2 file versions) and append everything, then fail due to the incorrect hash

TL;DR

The SQL query for GetBlocklists needs to be changed to filter blocklists correctly in this edge case.

The bad news

This broken GetBlocklists function is also used when writing indices for Compact (without a consistency check), so it might cause undetected, incorrect index files after compact. I am not sure how much of an impact that might have in existing backups. I tried a recreate with such a broken index and got the error:

Recreated database has missing blocks and 2 broken filelists. Consider using "list-broken-files" and "purge-broken-files" to purge broken data from the remote store and the database.

So, in the future it would be another possibility for people who get this error to delete the list folder from the index file of the broken volume and see if that fixes it.

Edit: If this is deemed severe enough, it would be possible to add the hash consistency check in Recreate, so that future versions can cope with this kind of broken index. It would just have to download an additional block volume.

The good news

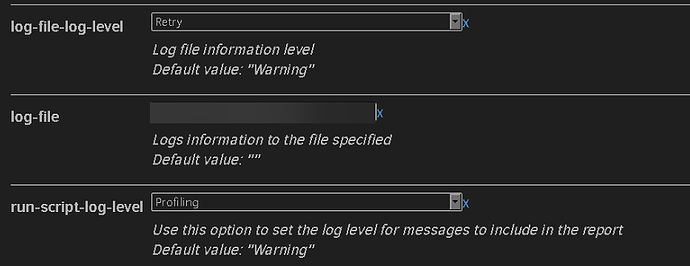

There is probably no damage to the database, so a recreate with --index-file-policy=Lookup will be a remedy in the short term. Change it back after the repair is successful, and maybe keep note of the index file that only has partial data.

Any database recreate will work correctly, because it uses a different way to build the blocklists.