Here are a few thoughts on things one may try when plotting out how they work together.

Right now the SQL leaves the C# to try to figure out when it’s got a full or a new blocklist.

An inability to properly recognize a new in the run-together case is the bug we have here.

SQL has better information, so could pass hints to reduce complicated analysis in the C#.

but then one gets a lot of BlocksetID that one has to wade through to see what’s changed.

One can use the result column to more directly flag when SQL moved to another blocklist:

WHEN “B”.“Index” % 32 = 0 THEN “A”.“BlocksetID” END

and if a NULL is a problem, produce 0, and if that’s somehow a worry, use -1 or something.

Maximum number of block hashes in a blocklist is 32 for the test case. At defaults it’s 3200.

Unfortunately it looks like SQLite does not come out-of-the-box with Base64. This impedes

attempting to use GROUP BY and group_concat to build a blocklist hash in the SQL code.

One could still try building it as a JSON or other list of Base64 strings if that might help any.

Recognizing exact duplicate blocklists is easier when it’s a string and not, say, 3200 strings.

One could even boil the string down to a SHA-256 hash if desired, creating a blocklist hash

out of the ordered list of Base64 block hashes, instead of the 32 byte versions after decode.

I’m still not sure if it’s worth the time and effort to check every blocklist use in BlocksetEntry.

There’s already pretty good sanity checking, although it’s not aimed exactly at this situation.

Even though I’m putting out lots of ideas here, extensive change is likely a riskier approach.

was talking about the simple plan. A bigger change merits more test, maybe even in Canary.

As proven by this bug, real-world usage provides edge cases that we can’t easily just create.

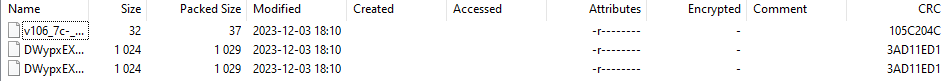

I did try a test case of changing character 32769 to a “b”, and the dindex got a double entry:

I’m using 7-Zip because it shows me the Size better than Windows, and it has a CRC to see

whether the blocklist is (barring CRC collisions that I don’t really worry about) the same one.

It would seem a bit of work (although Duplicati does far worse) to need to collect all of them,

then figure out what the duplicates are. Possibly an ORDER BY could gather them together.

As two more style nits, comma join is harder to read than JOIN, and join conditions in WHERE

is harder to read than using ON for join conditions, and leaving WHERE to be used for filtering.

AND "B"."Index" >= ("A"."Index" * 3200)

AND "B"."Index" < (("A"."Index" + 1) * 3200)

Isn’t this always equivalent (but longer and maybe slower) to:

"B"."Index" / 3200 = "A"."Index"

and integer division goes nicely with proposed integer modulo.

To me, though, the need is to fix a bug without breaking things. Beyond that, the tradeoffs get worse. Although we might have some time to work this particular topic, there’s also this problem happening:

so I’m still hoping for a quick path to a Canary for testing, then to actually get a fix into Beta release.

Ditto for the VACUUM, and for me that works well, whereas PRAGMA secure_delete worked worse.