Are you benchmarking for speed, space usage, error rates, or none/all of the above. Would you be willing to share your results with the group?

I’ve done no benchmarking and had not really planned on doing any as those sorts metrics aren’t important to me. I’m more interested in CPU and memory loads which anecdotally seem much lower with Duplicati than CrashPlan (at least for me).

However if you or somebody else wanted to put together some test cases that would be a good addition to the original post wiki. Even better would be if those test cases could be applied to other apps posted in other Comparison Topics.

Sure happy to post results -

Bit about the backup set of some frequently changed documents. Testing out speed before moving onto a more slower updating dataset. For my test set - it is approx 10mb of files. In the file set, 1 file is approx 5mb and changes daily while the others change around weekly.

I am testing on speed on various backends (basically anything I can get my hands on free) with the intention for the best to become paid sometime in future.

For the speed I keep records (see file link below - funnily enough hosted on B2 hahaha!) but keep an eye on storage as well.

Observations so far:

-

The dedup is a bit disappointing - perhaps this is due to the 5mb file being encrypted excel - so the daily changes don’t seem to dedup at all and can see the backup growing steadily.

-

Box as backend has errors all the time - I put a post about this but no big resolution. The errors persist after deleting the database and even after 1st backup on a freshly made job. I just moved over to Box-over-web-dav which seems to have no errors though.

-

The giants (google / MS onedrive) do best on speed. pCloud is pretty good as well. Free services are pretty bad. Amazingly Box, aside from errors, is pretty bad speed wise - so dropped from the testing now.

-

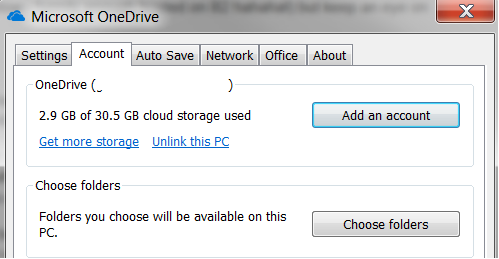

Haven’t really tried the native sync apps except Onedrive. One thing I noticed was there is no selective sync for their app - so end up getting a (syncing) copy of the backup on my hard disk — so this is probably a dealbreaker later if I move onto backup the large data set.

Anyway, speed results in attached. Have mean and also a 95% percentile worst case time-to-complete backup (some odd outliers excluded).

Have fun

https://f000.backblazeb2.com/file/backblaze-b2-public/Duplicati_Stats.xls

Onedrive does have selective sync - just checked clinet on Win7 and Win10:

Also - would be nice and safer if you host and share your spreadsheet as Google Sheets

Ouch…somebody used the word “percentile”…brain…losing…focus… (I’m terrible with stats.)

My thoughts on your numbers would be:

- I always assumed de-dupe was for not saving duplicate blocks between multiple files as well as versions. That being said, working on encrypted source files will severly constrain de-dupe-ability due to the likelihood of even a single byte change causing a completely different encrypted file coming out the other end. So basically you’re probably backing up the ENTIRE file after every every change, no matter how minor.

- Good to know about the relative stability of Box WebDAV vs API. I’m not fully sure I understand your spreadsheet but did you find the Box API errors with all tested tools (it’s Box’s fault) or just Duplicati (it’s “our” fault)?

- The inconsistancy in cloud provider performance has so many variables (user’s bandwidth, other local users on the trunk, distance from cloud providers servers, potential cloud provider maintenance, etc., etc.) I think it’s almost not worth testing. Unless of course you’re testing specifically for clod provider performance it would probably make for more comparable numbers to test the tools (Duplicati, Duplicicy, etc.) against local attached or network storage so the transfer medium side of performance can be minimized.

- I know nothing about Onedrive sync - does it provide versioning and ability to keep locally deleted files or is it really just a “files on Onedrive match files on local drive” tool?

Thanks for these initial testing numbers!

Hi, I wanted to add a couple of words on deduplication as well. (I have worked for 4 different enterprise companies that sell deduplication technology and so I have some experience.) The reality is that even for the largest enterprises deduplication results vary widely depending on numerous factors including data types, backup strategies and other factors.

The biggest challenge for most deduplication algorithms is deciding how exactly to deduplicate data to maximize data reduction. Companies spend millions of dollars optimizing this and so we have to set our expectations appropriately.

All of the above said, the biggest benefit we should focus on is data reduction from performing repetitive backups of the same data. I would also add that not all algorithms are the same. For example, I am also testing Duplicacy and while that product is faster it really performs single instance file storage vs deduplication. These two things are different. (There is a -hash option which seems to perform more traditional deduplication, but the details are a bit limited on that one.)

@dgcom - thanks for info about Onedrive. Missed that. Certainly helps Onedrive case that the feature is there.

On the dedup - my understanding is that there encryption methods that are more amendable to dedup routines (basically split file into blocks and encrypt block-wise) - so there is hope at end of the file do not result in an avalanche change effect on the whole file. That was also part of my testing to see how it duplicati reacts.

I saw mention of Duplicacy - for interest, in my linked excel, I also put some rough notes on results of some other testing I did on the other tab (not as well structured/formatted since I didn’t expect this to go outside… … …). Anyway, I was testing out other software packages (duplicacy, arq, some other paid services).

One thing I noticed that duplicati does well that duplicacy (and others?) seems to fail on — if I make a perfect copy of some files; I would expect little change in backup set (since hash should pick up it is a copy and just refer to existing blocks). Duplicati does the right thing (copy the entire data set and the backup size barely moves) but Duplicacy seems to be still growing (ie it keeps a copy of each file, even if duplicates).

Right now - I still am testing around Arq (but seems behind duplicati) and happy to do some testing/benchmarking if anyone has ideas on things to evaluate/test on.

Hint: If you refer to some other post on this forum, it would be great if you could provide a link to that post.

The link will automatically be visible from both sides (i.e. there will also be a link back from the post you’re linking to). Those links will make it much easier for people to navigate the forum and find relevant information.

Haha noted tophee but the post I was referring to was the one immediately before my post so dont think need to link in this case

To refer to a specific part of the previous post (or any post, for that matter) you can select the text bit that you want to refer to and then press the “quote” button that appears

With Duplicati 1.3.x I used the rdiff algorithm, which is really great at finding a near-optimal “diff” between two files. Unfortunately it suffers from collision with big files (say 100MB) due to the small 16 bit rolling hash. It could be fixed with a larger rolling hash, but that would make it incompatible with other rdiff based tools.

But I then looked at what really happens to files and file formats. The files people said they wanted to back up were mostly: source code, word documents, spread sheets, music, pictures, video, and executables.

If you look at the file formats, all but source code and executables are actually compressed (mp3, jpeg, mp4, docx, xlsx). Size wise, the multimedia files are vastly greater than any source code repo I have seen (maybe excluding the Linux kernel stuff).

For all the media files, it is rare that files are re-written. And if a media file is rewritten, it is usually re-encoded making it highly unlikely that you will find matching blocks after. (The exception is metadata edit, like MP3 tag or EXIF editing).

For other large files, like virtual machines or database files, rewriting the files entirely usually takes many minutes in raw disk IO, so they tend to do appends or in-place updates.

With this I decided on using a block-based approach, where each block is fixed size, as the complexity for handling dynamic-length blocks turned out to be quite high when combined with a SQL based storage model.

The fixed-length approach also means that it is no longer possible to detect shifts in data, where 1 byte is inserted or deleted. However, I have only seen this make sense for pure text files (source code, css, html) and these tend to only be a small percentage of the actual files.

For word documents, the docx format is actually a zip file, so contents are compressed, destroying any option to detect bit shifts.

If someone finds a use case for variable length blocks, it is possible to add this to the storage scheme quite easily, but the SQL queries that computes files are much more complicated because the offsets will need to be computed in a “rolling” manner.

AFAIK, Duplicacy and the other similar tools use this algorithm:

https://restic.github.io/blog/2015-09-12/restic-foundation1-cdc

@jl_678 Have you seen a real-life situation where you get big savings on other approaches?

No, duplicacy prides itself for having developed its own algorithm, I believe. See duplicacy/README.md at master · gilbertchen/duplicacy · GitHub.

There is a comparison between various algorithms at duplicacy/README.md at master · gilbertchen/duplicacy · GitHub

Do I understand correctly that duplicati is using neither of the algorithms listed there: duplicity, bup, Obnam, Attic, restic, or Duplicacy?

I find that comparison provided by the duplicacy developer highly interesting and it would be great to see what duplicati would add to that picture…

Actually, why don’t I just copy that table and add duplicati. (Don’t have time to fill it in right now though. Feel free to do it @kenkendk.)

| Feature/Tool | duplicity | bup | Obnam | Attic | restic | Duplicacy | duplicati |

|---|---|---|---|---|---|---|---|

| Incremental Backup* | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Full Snapshot | No | Yes | Yes | Yes | Yes | Yes | Yes |

| Deduplication | Weak | Yes | Weak | Yes | Yes | Yes | Yes |

| Encryption | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Deletion | No | No | Yes | Yes | No | Yes | Yes |

| Concurrent Access | No | No | Exclusive locking | Not recommended | Exclusive locking | Lock-free | No |

| Cloud Support | Extensive | No | No | No | S3, B2, OpenStack | S3, GCS, Azure, Dropbox, Backblaze B2, Google Drive, OneDrive, and Hubic | Extensive |

| Snapshot Migration | No | No | No | No | No | Yes | No |

* Incremental backup is defined here as: only back up what has been changed (so it includes what ken prefers to call

I don’t think Attic, restic, Duplicati and Duplicacy does real incremental backups.

I prefer to call this type “differential backup” because “incremental” implies dependency on the previous backup.

duplicity and bup does real incremental backups, not sure about Obnam.

Yeah, I read the docs after posting

I have edited my post.

Isn’t it better to call every backup Duplicati makes a Full backup?

Incremental backups rely on the former Incremental backup, until the most recent Full backup has been reached.

Differential backups rely only on the last Full backup, at the cost that all changes since the last Full backup are re-uploaded, thus use more storage capacity.

Technically I guess at best all available backups can be called Full backups, because all snapshots have access to all data directly, without relying on any other backup.

I guess it falls between the two. You are only storing the difference, but you do not have a dependency. “Deconstructed backup” maybe?

You raise some great points and I really appreciate your thoughtful approach. In enterprise data protection solutions, dynamic block sizes are common. This because data is typically laid down as a stream and so finding that one-byte change can really make a huge difference since a one byte offset could potentially impact current and future streams. The data is laid down this way due to the history of backup applications writing to streaming media, aka tape.

The benefit of your product is that you are focused more on a file-based strategy which makes more sense and I completely agree about the stagnant nature of data. Using a fixed block strategy on a file-based backup makes sense especially since the 1 byte offset will really only affect one file which is not a huge penalty in the grand scheme of things.

The thing that I personally am trying to get my head around is the performance difference between the different algorithms. (Specifically duplicacy and duplicati.) . Naturally, the products are different and have different defaults, but for me given that I am using a low-end single board computer as my “backup server” and that cloud storage is relatively cheap, I would be willing to trade off storage efficiency for speed.

My personal sense is that the default parameters for duplicacy are more focused towards speed over efficiency whereas duplicati is the opposite. One example is that duplicacy leaves encryption off by default which is a problem, IMO.

Anyway, I plan to continue tests and would welcome any suggested Duplicati parameters.

Thank you!

Interesting thought - have you tried a performance comparison with Duplicati configure as similar to Duplucacy as possibly?

I am trying to do some of that now. I am not going to mess with the deduplication settings; they are what they are. However, Duplicacy had an unfair disadvantage with encryption turned off and so I am running a backup as we speak on the same data set with encryption enabled.

The other setting I am thinking about is threading. My SBC is a quad core one and I am debating enabling the multi-threading option on Duplicacy to see what it does. Does Duplicati support multi-threading? (I think that the Duplicacy multi-threading parameter refers to the number simultaneous uploads.)

To be clear, in case anyone wonders, I am backing about about 2.5GB of file data residing on a NAS over a GigE LAN. These are typical office docs and my backup target is B2. The source server is a 2GB Pine64 connected to the NAS via a GigE switch.