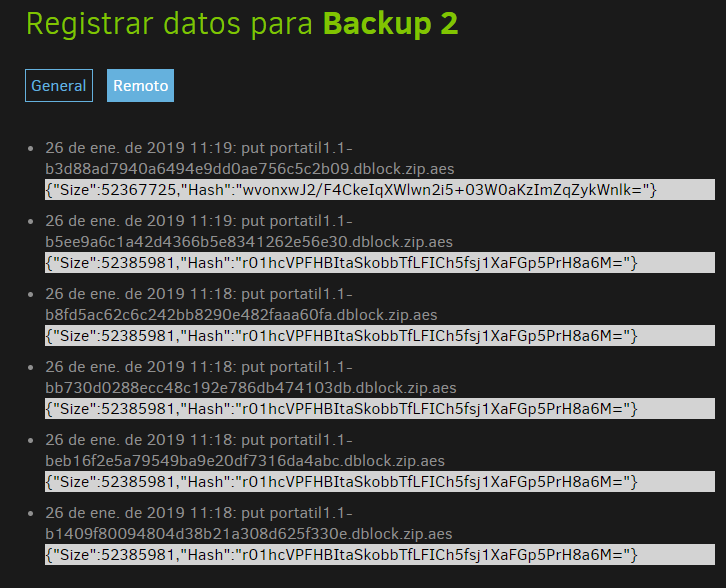

Could someone explain to me the meaning of this message

Size is not an error in itself, but seeing the same Size (size of the upload being done by the put operation) and Hash (mathematical computation as an additional check on file integrity) five times in a row is worrying because it could be that default –number-of-retries (with a new name for each try) to upload kept on failing.

What storage type is this, did it work before, and does it offer any logs that would give a view from its end?

Duplicati is not good at pointing one to the exact log, but can you also look at Job → Show log → General and About → Show log → Stored for anything at the right time. If not, you might need to do a run to watch About → Show log → Live → Retry, or (if nothing else reveals what went wrong), set up an actual log file.

I do not know why because, when making the change in the size of the blocks, the failure was solved. Before I did not present this flaw.

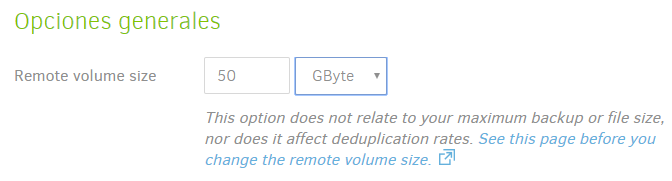

I can’t understand what you said, or how the picture fits into the text, however if the picture is the current setting, it’s an increase of 1024 times from the default, whereas your original picture is the default 50MB.

Are you saying the setting in the picture (wrong as it seems) avoid the problem?

Did you follow the “See this page” link in the picture about how to choose sizes?

Are you saying the setting in the picture (wrong as it seems) avoid the problem?

The answer is: yes, changing the size of the blocks

You must create blocks of 50 MB, but for some reason when you create block 1, then 2 and then 3 etc. It generates an error in the size of the creation of the blocks.

What I want to say is that it is possible in the backup there is a file that can not be divided by blocks and generates this error in size and hash

(by the way, you can highlight text which brings up a quote option which you can click to get like the above)

If the file can be read (which isn’t always the case, for example if locked), then it can be divided into blocks. Additionally the size you changed isn’t the –blocksize that controls dividing into blocks, but it’s –dblock-size which controls how many of the --blocksize (or smaller) blocks are put into a dblock file for the destination.

This is explained in the “See this page” link seen in your screenshot. Choosing sizes in Duplicati is similar. How the backup process works is an explanation of how file content is spit, deduplicated, and repackaged. The 50 GB is the package size, and such packages are hard to deal with, especially as many of such size could be required to be downloaded to restore even one source file and it’s likely to take a hugely long time.

I’m not at all sure it’s doing what you say. Please look at my first words here about it looking like a retry of the same block five times. The chance of blocks 2 and 3 having the same size and hash as 1 is too small. You can also look at About → Show log → Remote for your successful backup to see how it differs from your initial screenshot. You’ll probably find a dindex file going up after the dblock, not repeated dblock tries.

If this convinces you, then you probably need to either look at About → Show log → Live → Retries with 50 MB (or whatever fails) dblock size to look into upload problems, or maybe even set up a log file for that. Assuming this is an upload retry situation, the initial screenshot doesn’t show the cause. Need more logs.

Generally I would expect things to get worse trying to do huge transfers, but you are seeing the opposite… What storage type is the destination? I can’t think of one that would reject small files but take huge ones…

Dear,

I have the same issue but I HAVE SIZE SETTINGS ON DEFAULT.

Please, see *.dblock.zip size mismatch · Issue #3626 · duplicati/duplicati · GitHub for more info.

I would appreciate if others could support / vote for this bug as well.

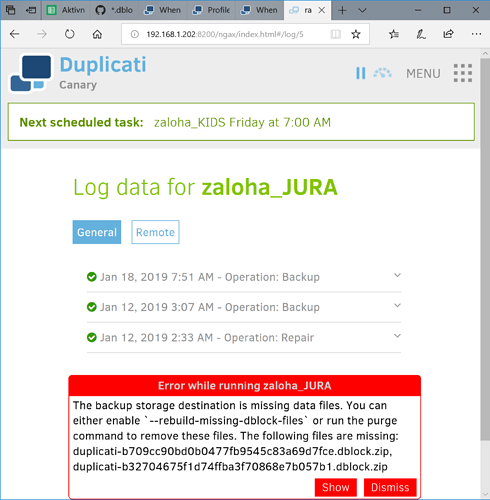

Jura

Could you please detail the similarities? To me, they look different. They both involve size, but differently. Please feel free to use the debugging here starting with the screenshot of Job → Show log → Remote. Possibly if there are two cases of the same issue, having multiple people pursuing it can locate it faster. Regardless, it would be good to see another record of file length, because you have size disagreement.

Hello,

today my backup job didn’t finished again. Full log as sent to email:

Failed: Found 3 files that are missing from the remote storage, please run repair

Details: Duplicati.Library.Interface.UserInformationException: Found 3 files that are missing from the remote storage, please run repair

at Duplicati.Library.Main.Operation.FilelistProcessor.VerifyRemoteList (Duplicati.Library.Main.BackendManager backend, Duplicati.Library.Main.Options options, Duplicati.Library.Main.Database.LocalDatabase database, Duplicati.Library.Main.IBackendWriter log, System.String protectedfile) [0x00203] in <db65b3c26950472bbb17359c9b89f17a>:0

at Duplicati.Library.Main.Operation.BackupHandler.PreBackupVerify (Duplicati.Library.Main.BackendManager backend, System.String protectedfile) [0x0005f] in <db65b3c26950472bbb17359c9b89f17a>:0

— End of stack trace from previous location where exception was thrown —

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw () [0x0000c] in <8f2c484307284b51944a1a13a14c0266>:0

at CoCoL.ChannelExtensions.WaitForTaskOrThrow (System.Threading.Tasks.Task task) [0x00050] in <6973ce2780de4b28aaa2c5ffc59993b1>:0

at Duplicati.Library.Main.Operation.BackupHandler.Run (System.String sources, Duplicati.Library.Utility.IFilter filter) [0x00008] in <db65b3c26950472bbb17359c9b89f17a>:0

at Duplicati.Library.Main.Controller+<>c__DisplayClass13_0.<Backup>b__0 (Duplicati.Library.Main.BackupResults result) [0x00035] in <db65b3c26950472bbb17359c9b89f17a>:0

at Duplicati.Library.Main.Controller.RunAction[T] (T result, System.String& paths, Duplicati.Library.Utility.IFilter& filter, System.Action`1[T] method) [0x0011d] in <db65b3c26950472bbb17359c9b89f17a>:0

Log data:

2019-01-29 21:47:38 +01 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-20190121T061505Z.dlist.zip

2019-01-29 21:47:38 +01 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-b1ff4717fa9b243da87a7df3309394a0a.dblock.zip

2019-01-29 21:47:38 +01 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-i0d7c7b9b8c144cbaaf581d140df8f7a8.dindex.zip

2019-01-29 21:47:39 +01 - [Error-Duplicati.Library.Main.Operation.FilelistProcessor-MissingRemoteFiles]: Found 3 files that are missing from the remote storage, please run repair

2019-01-29 21:47:39 +01 - [Error-Duplicati.Library.Main.Operation.BackupHandler-FatalError]: Fatal error

Duplicati.Library.Interface.UserInformationException: Found 3 files that are missing from the remote storage, please run repair

at Duplicati.Library.Main.Operation.FilelistProcessor.VerifyRemoteList (Duplicati.Library.Main.BackendManager backend, Duplicati.Library.Main.Options options, Duplicati.Library.Main.Database.LocalDatabase database, Duplicati.Library.Main.IBackendWriter log, System.String protectedfile) [0x00203] in <db65b3c26950472bbb17359c9b89f17a>:0

at Duplicati.Library.Main.Operation.BackupHandler.PreBackupVerify (Duplicati.Library.Main.BackendManager backend, System.String protectedfile) [0x0005f] in <db65b3c26950472bbb17359c9b89f17a>:0

After running Database -> Repair:

It’s bad that there is nothing about errors when database was unsuccessfully repaired neither in the log nor in email notification, just in the pop-up window. Now running Database -> Recreate.