I have been using Duplicati for some 4 or 5 months without issue.

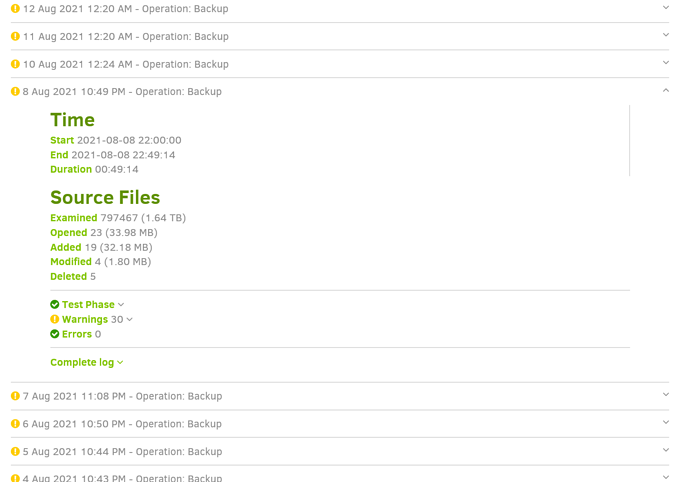

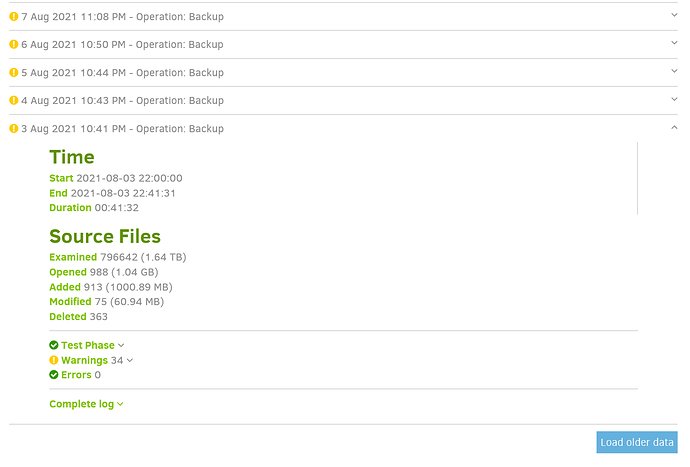

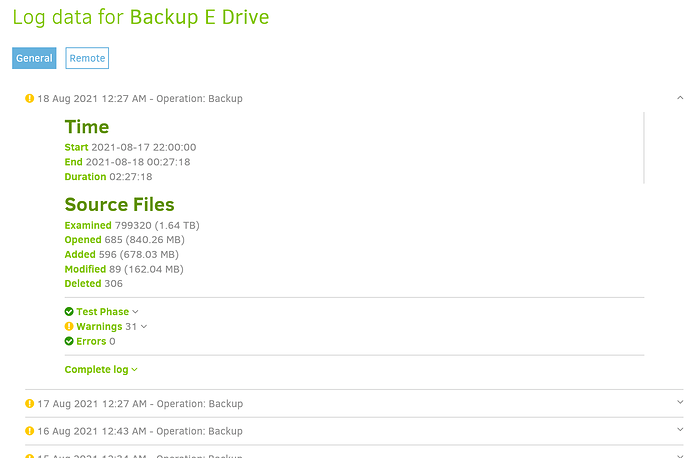

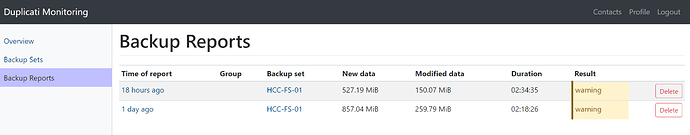

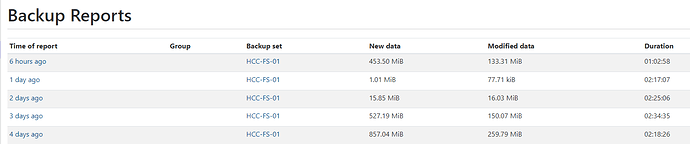

But recently (9th August 2021) a job did not run - and since the time the backup job didn’t run, the backups are now taking 2.5 hours - where as prior to the job not running the backup jobs were always between 40 and 60 minutes

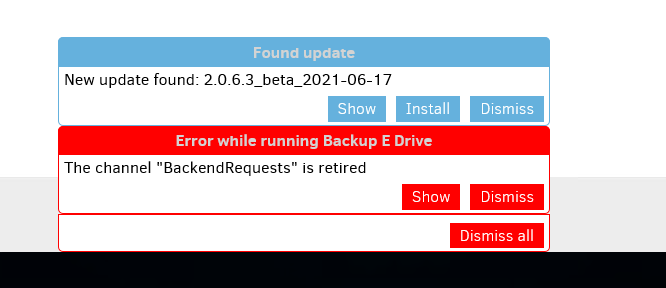

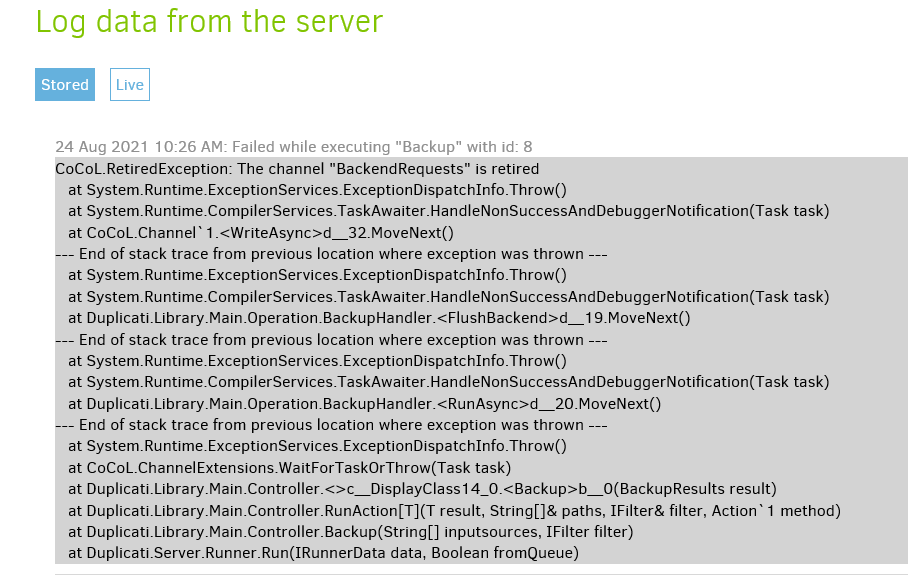

I have checked the Logs - but can’t see anything which stands out. But I am also not too sure what i should be looking for

I know that the server which is running Duplicati was online and working on the 9th when the backup did not run - as i can see in Windows Event Viewer that other processes were working.

No errors in Windows Event Viewer about Duplicati either

Windows server 2019 (1809), OS build 17763.1397

New server as of 19/10/2020 running intel Xeon E5-2665 CPU (16 cores), 32GB RAM, 4TB SSD storage

The server is used as a file server

The job in question backs up the files from the local file server to a network location

This network location is another server on the LAN - which is used only for this backup - no issues with this other server either

For further troubleshooting i have copied a folder from my PC to the file server running Duplicati - the folder was exactly 1GB made up of circa 500 files - large and small

The copy from my PC to the file server running Duplicati took about 25 seconds

I then copied this same folder to the backup server (to test network performance) and it took only 22 seconds - so to me this seems to rule out the network and the backup server (which is receiving the backups from Duplicati) and the file server itself which is running Duplicati

Thanks in advance for any help!