duplicati-log.zip (1.7 KB)

Who that are big reply’s with a lot of question!! Thanks for that!!

Where to begin… I attached a file with a few log lines.

The log says my database is locked, but I don’t know how that could have happend.

It’s running in the container and doing nothing else then that.

First I use the Duplicati/Duplicati Container.

Sound a bit strange that a Overwrite doesn’t overwrite when there a file on the destination. It shouldn’t matter if there a file and/or in what state that file is.

Also the same for the timestamp version, that also needs to write a file despite the version on the destination.

On my database I did a “.tables” with the following output;

sqlite> .tables

Block Configuration Fileset Operation

BlocklistHash DeletedBlock FilesetEntry PathPrefix

Blockset DuplicateBlock IndexBlockLink RemoteOperation

BlocksetEntry File LogData Remotevolume

ChangeJournalData FileLookup Metadataset Version

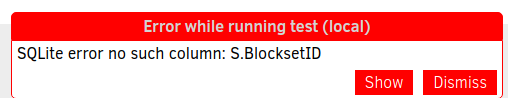

I ran the above query:

sqlite> SELECT DISTINCT “Fileset-C409B04BE8434E439BEAE376862253E4”.“TargetPath”

…> ,“Fileset-C409B04BE8434E439BEAE376862253E4”.“Path”

…> ,“Fileset-C409B04BE8434E439BEAE376862253E4”.“ID”

…> ,“Blocks-C409B04BE8434E439BEAE376862253E4”.“Index”

…> ,“Blocks-C409B04BE8434E439BEAE376862253E4”.“Hash”

…> ,“Blocks-C409B04BE8434E439BEAE376862253E4”.“Size”

…> FROM “Fileset-C409B04BE8434E439BEAE376862253E4”

…> ,“Blocks-C409B04BE8434E439BEAE376862253E4”

…> ,“LatestBlocksetIds-4327E7D8F93D2342BD9A31132B45EA18” S

…> ,“Block”

…> ,“BlocksetEntry”

…> WHERE “BlocksetEntry”.“BlocksetID” = “S”.“BlocksetID”

…> AND “BlocksetEntry”.“BlockID” = “Block”.“ID”

…> AND “Blocks-C409B04BE8434E439BEAE376862253E4”.“Hash” = “Block”.“Hash”

…> AND “Blocks-C409B04BE8434E439BEAE376862253E4”.“Size” = “Block”.“Size”

…> AND “S”.“Path” = “Fileset-C409B04BE8434E439BEAE376862253E4”.“Path”

…> AND “Blocks-C409B04BE8434E439BEAE376862253E4”.“Index” = “BlocksetEntry”.“Index”

…> AND “Fileset-C409B04BE8434E439BEAE376862253E4”.“ID” = “Blocks-C409B04BE8434E439BEAE376862253E4”.“FileID”

…> AND “Blocks-C409B04BE8434E439BEAE376862253E4”.“Restored” = 0

…> AND “Blocks-C409B04BE8434E439BEAE376862253E4”.“Metadata” = 0

…> AND “Fileset-C409B04BE8434E439BEAE376862253E4”.“TargetPath” != “Fileset-C409B04BE8434E439BEAE376862253E4”.“Path”

…> ORDER BY “Fileset-C409B04BE8434E439BEAE376862253E4”.“ID”

…> ,“Blocks-C409B04BE8434E439BEAE376862253E4”.“Index”;

Error: no such table: Fileset-C409B04BE8434E439BEAE376862253E4

and

sqlite> SELECT DISTINCT “Fileset-C409B04BE8434E439BEAE376862253E4”.“TargetPath”

…> ,“Fileset-C409B04BE8434E439BEAE376862253E4”.“Path”

…> ,“Fileset-C409B04BE8434E439BEAE376862253E4”.“ID”

…> ,“Blocks-C409B04BE8434E439BEAE376862253E4”.“Index”

…> ,“Blocks-C409B04BE8434E439BEAE376862253E4”.“Hash”

…> ,“Blocks-C409B04BE8434E439BEAE376862253E4”.“Size”

…> FROM “Fileset-C409B04BE8434E439BEAE376862253E4”

…> ,“Blocks-C409B04BE8434E439BEAE376862253E4”

…> ,“LatestBlocksetIds-4327E7D8F93D2342BD9A31132B45EA18” S

…> ,“Block”

…> ,“BlocksetEntry”

…> WHERE “BlocksetEntry”.“BlocksetID” = “S”.“BlocksetID”

…> AND “BlocksetEntry”.“BlockID” = “Block”.“ID”

…> AND “Blocks-C409B04BE8434E439BEAE376862253E4”.“Hash” = “Block”.“Hash”

…> AND “Blocks-C409B04BE8434E439BEAE376862253E4”.“Size” = “Block”.“Size”

…> AND “S”.“Path” = “Fileset-C409B04BE8434E439BEAE376862253E4”.“Path”

…> AND “Blocks-C409B04BE8434E439BEAE376862253E4”.“Index” = “BlocksetEntry”.“Index”

…> AND “Fileset-C409B04BE8434E439BEAE376862253E4”.“ID” = “Blocks-C409B04BE8434E439BEAE376862253E4”.“FileID”

…> AND “Blocks-C409B04BE8434E439BEAE376862253E4”.“Restored” = 0

…> AND “Blocks-C409B04BE8434E439BEAE376862253E4”.“Metadata” = 0

…> AND “Fileset-C409B04BE8434E439BEAE376862253E4”.“TargetPath” != “Fileset-C409B04BE8434E439BEAE376862253E4”.“Path”

…> ORDER BY “Fileset-C409B04BE8434E439BEAE376862253E4”.“ID”

…> ,“Blocks-C409B04BE8434E439BEAE376862253E4”.“Index”;

Error: no such table: Fileset-C409B04BE8434E439BEAE376862253E4

The .tables isn’t showing any of the above tables, so don’t know what the query is doing and how I should see the results.

Also can’t find the location of the temp store in the docker container, maybe somebody can tell me it so I can check it?