Hello,

Im trying to “Duplicate” from Open Media Vault(debian7) Nas to DS215j Synology.Both connected on the same router via FTP.

Everything is OK. But when i press “Run”, i get this message on the and:

Error while running test

Disk full. Path /tmp/dup-15ffe232-7af4-4e99-84b5-411bf397d703

Disk can not be full, its to obvious becouse im backing up a small folder with 3gb of video files.

Any idea`s?

Is the problem on Duplicati or Synology ?

That looks like a temp file that is generated on the SOURCE side. So check the free space on your debian NAS.

There is also a very slim chance as system and share disk.

One question more:

Duplicati can be installed on Synology, but can he also “pull” the data from Open media vault?

Just check it out and it is not on Open media vault disk, probably Syonology.

Hello @Nas2Nas, welcome to the forum!

I suspect @drwtsn32 is correct that you are running out of space on your source NAS. How much free space do you have in /tmp/ on that device and what settings are you using for your “Upload volume size” (--blocksize) and --asynchronous-upload-limit?

You may need to lower your volume size, async and/or upload limit or adjust your --tempdir or --asynchronous-upload-folder to point to a location with more available space.

--asynchronous-upload-limit

When performing asynchronous uploads, Duplicati will create volumes that can be uploaded. To prevent Duplicati from generating too many volumes, this option limits the number of pending uploads. Set to zero to disable the limit

Default value: “4”

--asynchronous-upload-folder

The pre-generated volumes will be placed into the temporary folder by default, this option can set a different folder for placing the temporary volumes, despite the name, this also works for synchronous runs

Default value: “<system specific>”

--tempdir

Duplicati will use the system default temporary folder. This option can be used to supply an alternative folder for temporary storage. Note that SQLite will always put temporary files in the system default temporary folder. Consider using the TMPDIR environment variable on Linux to set the temporary folder for both Duplicati and SQLite.

Default value: “<system specific>”

Do you mean can Duplicati use OMV as a SOURCE for files to be backed up? As long as those OMV files can be mounted on the Synology (so they look local to Duplicati) or exposed from OMV as a UNC (so “\MyOMV\MyFiles”) then yes.

But it should be noted that you won’t get the best performance from such setup because when a file is changed (and ALL files are considered changed during the first backup) then Duplicati will need to read the entire file.

This means your entire “remote source” will be pulled over your network so Duplicati can look at it, then save it locally in blocks. Of course future backups should be much faster as they’ll just get file lists and check sizes / time stamps.

1 Like

JonMikelV,

thank u very much for clarification !!!

It seems i have very very wrongfully understood ““Upload volume size” (–blocksize)” .

I should choose a lot smaller “mount”.

I will give it a try, and let you know.

For the rest(just as info):

Can u tell me where do i change following options - Duplicati GUI or in terminal of Omv?

–asynchronous-upload-limit

–asynchronous-upload-folder

–tempdir

Second option of installing of Duplicati on Synology fall`s off.But tnx for letting know.It is very usefull information.

You can add parameters in either the GUI or terminal, whatever works best for you.

Setting parameters via GUI

In the GUI you would:

- edit your job

- go to step 5 (Options)

- scroll down a little bit and expand “Advanced options” section

- choose the options you want from the “Add advanced option” select list

Note: If you have a setting you want to apply to ALL jobs (such as email notifications or tempdir) you can use the “Add advanced option” selector under main menu “Settings” - > “Default options”.

Setting parameters via command line

Just add the parameters you want to your command line shortcut with a double dash “–” before each one and a space between them (use quotes for paths in case they include a space). In your case you could add something like the following:

<current parms> --asyncronous-upload-limit=2 --asyncronous-upload-folder="/tmp/DuplicatiUploads/" --tempdir="/tmp/DuplicatiTempdir"

Setting parameters via parameters file

If you have too many parameters to include in your shortcut or you’d prefer to be able to edit them in a text editor you can use the --parameters-file parameter as documented here.

Basically, just add the --parameters-file="\<path to file>" parameter to your shortcut then create a text file at "\<path to file>" with a single parameter per line.

In your case you’d end up with a file (let’s call it “/DuplicatiParms”) that would look something like this:

--asyncronous-upload-limit=2

--asyncronous-upload-folder="/tmp/DuplicatiUploads/"

--tempdir="/tmp/DuplicatiTempdir"

Note that in general parameter file values will OVERRIDE anything on the command line (or GUI) so if you have the same parameter in multiple places it will try to use use parameter file ELSE command line ELSE GUI ELSE defaults.

Oh, and one nice thing about parameter files is that you can include comments! Any line that does NOT start with “–” is ignored and considered a comment.

2 Likes

(quote)“How much free space do you have in /tmp/ on that device and what settings are you using for your “Upload volume size” (–blocksize)”

-This was problem from beginning, i set up “a skyrocketing value”.

Now it works. But i can not say that the speed of transfer between two Nas devices is good.

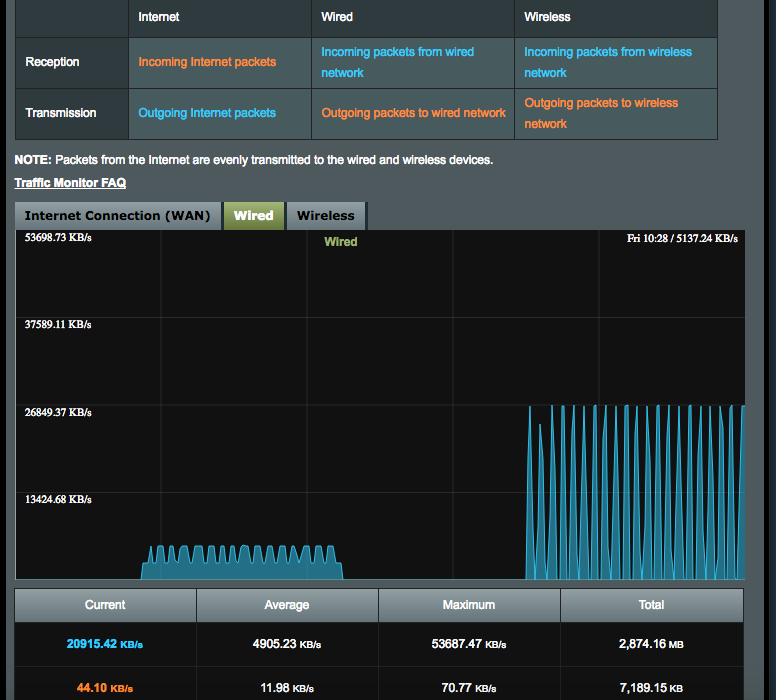

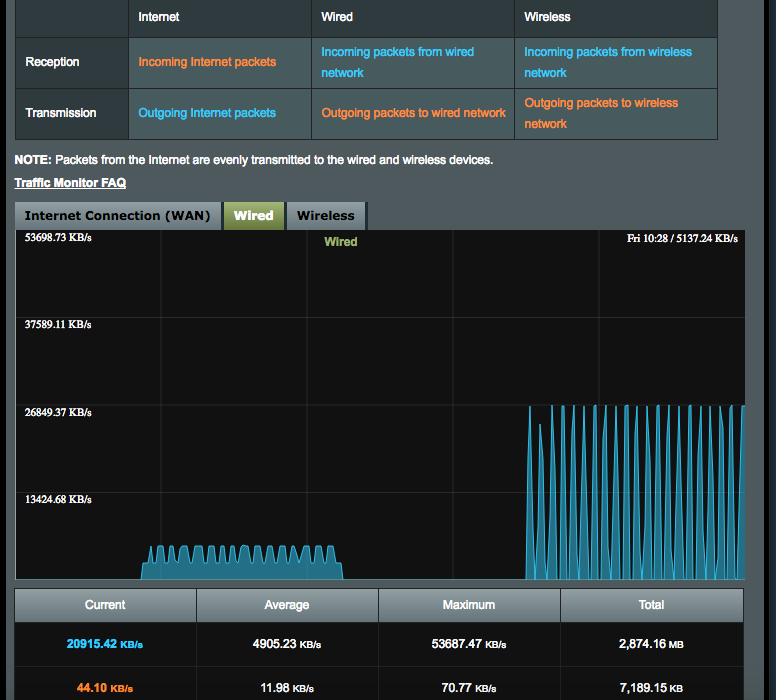

Following screenshot is on base of blocksize of 10mb:

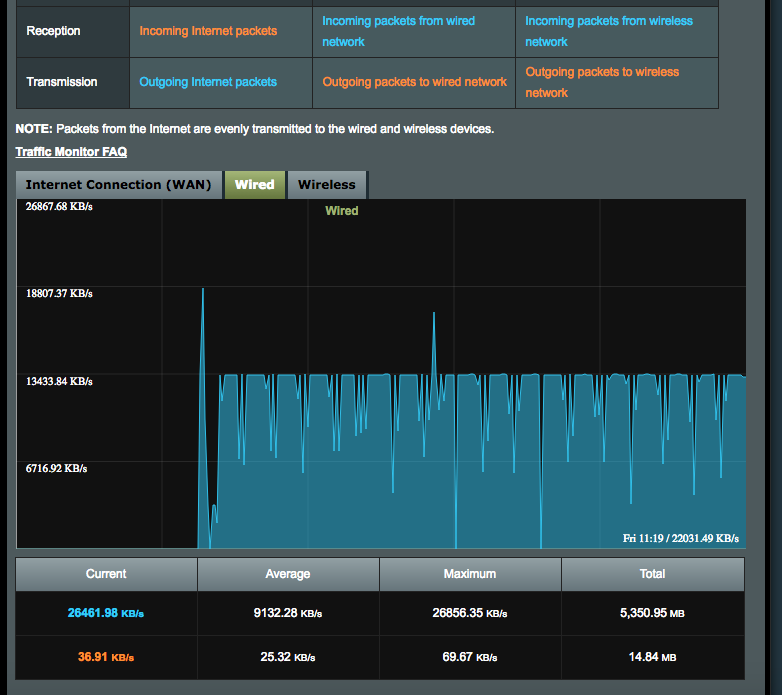

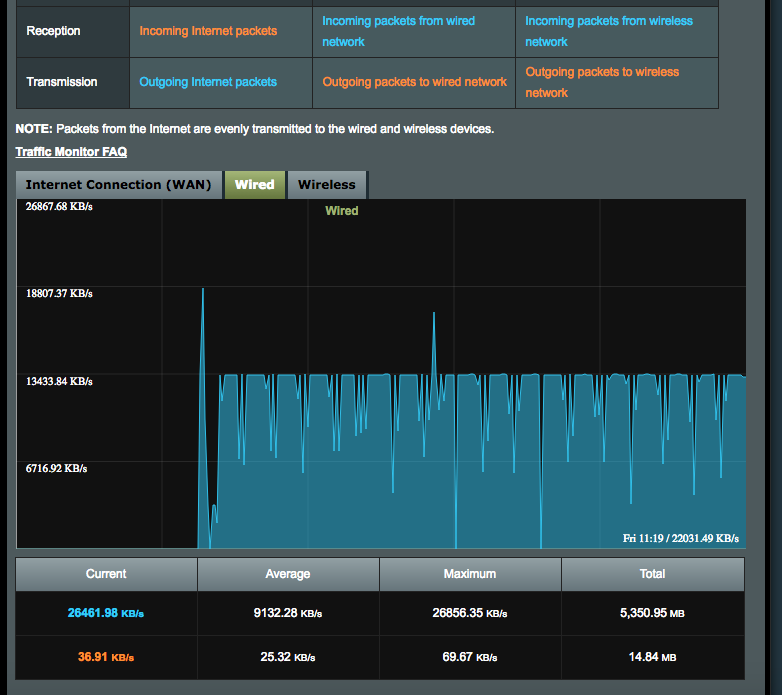

The next(left is 10mb) right 100mb(here we see that the speed is not consistent):

And on this one is blocksize 50mb:

Now, i do understand that there is also a proces of ziping(compression)(note: i am NOT using encryption).

But is there any chance by options to make a transfer speed higher but that is more consistent as in case of blocksize 50mb?

I am asking this becouse some rsync tools offers much higher speeds(if is it relevant?) !

Ahhhh - now you’re talking about performance optimization which is much harder to do because it varies so much depending on the environment and hardware involved.

You might want to search the forum for “performance” and see what others have to say, but most likely you’ll want to try things like:

- disable or lower encryption (you’ve already done that)

- lower

--zip-compression-level setting (more space needed at your destination)

- increase

--asyncronous-upload-limit (more files queued for upload)

- use latest version of Duplicati (though maybe not 2.0.3.6) which include some optimizations and multi-threading updates

1 Like