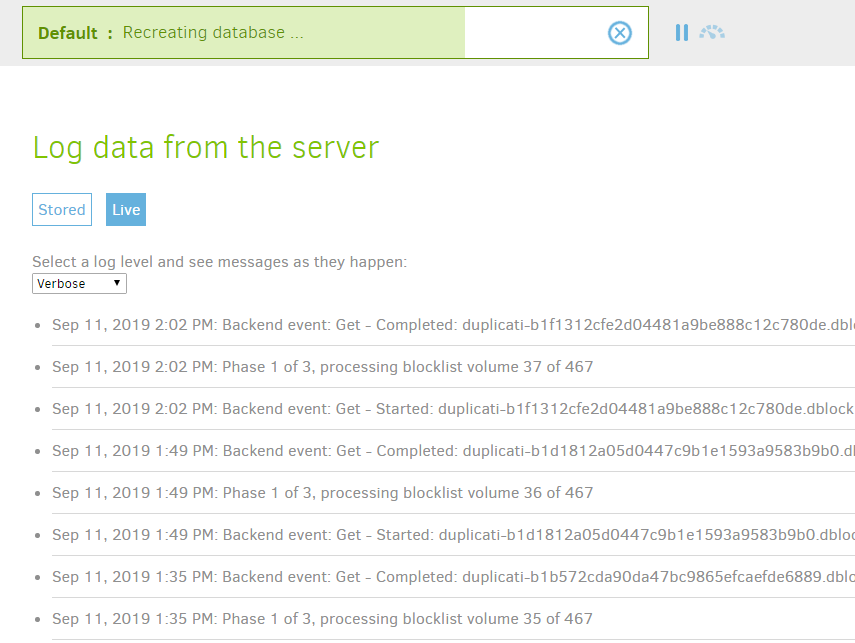

I just had an “Unexpected difference in fileset” error happen and googled myself to trying out recreating the local database to try to solve it. I was surprised by how long the recreation took so I tried to see why that is, and found that the resource-usage on my computer was quite odd. This is my backup size:

Source: 179.87 GB

Backup: 171.26 GB / 14 Versions

It is located on a hard-drive inside a NAS and accessed over the network from my computer.

First off I noticed CPU usage never really went above 20% on my 4c/8t CPU, which is fine I guess. I suppose the recreation process is mostly coded to be single-threaded but I guess it would be nice if more cores was used to speed it up. Not really an odd thing though, so that’s fine.

However I noticed that there was a VERY large amount of data written to my disk: https://i.imgur.com/6sLIwK8.png

At the same time I noticed that VERY little RAM was being used by Duplicati: https://i.imgur.com/j6SMQOJ.png

Checking the TBW (Tera-bytes written) on my SSDs confirms the heavy writing: https://i.imgur.com/BiyuqNI.png

The whole recreation process has taken about 8 hours so far, and it’s about 80% complete. Measuring the TBW written to my SSD over 1 hour shows about 300 GB written.

Looking at the actual local database that has been created it comes in at about 2GB: htt ps://i.imgur.com/qxqxXb8.png (had to break this link due to limit of 3 links per post as a new user)

To me these stats seems quite odd. I can’t come up with an explanation why several terabytes of data needs to be written to my SSD (reducing its life-span btw) when it all ends up just being a 2GB file. If the process needs to iterate with writes is it not better to do so in RAM and only write to the disk when complete? That whole 2GB file could easily fit in my RAM, and if not it could be paged to disk by the OS. I feel like doing so would probably speed up the whole process substantially. If I ever need to run a recreation again I will at least make sure to have the local database on a RAM-disk while it runs and just copy over the completed files after, I assume that would work. Or is there something weird with my system and I’m the only ones experiencing this odd resource usage?

So I guess you can disregard everything I wrote if the later version already improved it! I didn’t know later versions are posted here on the forums.

So I guess you can disregard everything I wrote if the later version already improved it! I didn’t know later versions are posted here on the forums.