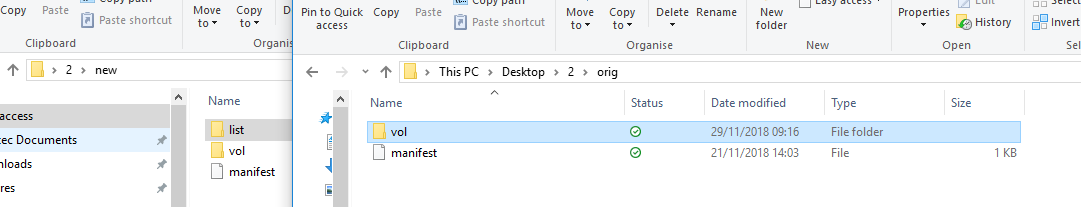

I’m seeing this on 2.0.5.0 Experimental. When compact actually rebuilds a dindex it omits the list folder. Reproducible in under a minute on Windows. Open a File Explorer then run backup until file list shrinks.

Between backups, you can (if you like) click on a dindex file to unzip it to see whether it has a list folder. Running an unencrypted backup at 1MB dblock,1KB block, retention of 5, threshold of 10 for compacts.

Test script below is quick and dirty. If your batch skill is better than mine, feel free to improve. Goal is to make files large enough to need more than one block, and variations over time that eventually compact.

%random% after dividing by 1000 looks like it would give a value from about 0 to 32. Using raw random would pretty much guarantee entire old dblock would get tossed. Need to have a reason to do a merge.

@echo off

set length1100=01234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789012345678901234567890123456789

:loop

setlocal enabledelayedexpansion

for /L %%i in (1,1,40) do (

set /a value = !random! / 1000

echo !value! %length1100% > C:\random\%%i.txt

)

timeout /t 1

goto loop

EDIT:

While original script reproduced the seeming problem, it unexpectedly did not change the files as intended because the for loop apparently expanded at least some variables once for entire body. Original quick-and-dirty script was a lot of lines made in an editor – harder to get such surprises…

EDIT 2:

Was it filed, or did chase pause, I wonder? I did try searching, but came up with this topic instead.

Additionally, I should note that testing on 2.0.4.23 with originally posted, or revised script also fails assuming this is a failure and not some attempt at optimization for special case of a single dblock.

Here’s a log of 2.0.4.23 which seems to confirm missing list folder makes it download only dblock:

Jan 8, 2020 8:43 AM: The operation Repair has completed

Jan 8, 2020 8:43 AM: Recreate completed, and consistency checks completed, marking database as complete

Jan 8, 2020 8:43 AM: Recreate completed, verifying the database consistency

Jan 8, 2020 8:43 AM: Backend event: Get - Completed: duplicati-ba8418552f72341048515332330ded740.dblock.zip (68.22 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Started: duplicati-ba8418552f72341048515332330ded740.dblock.zip (68.22 KB)

Jan 8, 2020 8:43 AM: Processing required 1 blocklist volumes

Jan 8, 2020 8:43 AM: Backend event: Get - Completed: duplicati-ie1a5f5a984664e579e8ca3744b59bff2.dindex.zip (9.61 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Started: duplicati-ie1a5f5a984664e579e8ca3744b59bff2.dindex.zip (9.61 KB)

Jan 8, 2020 8:43 AM: Filelists restored, downloading 1 index files

Jan 8, 2020 8:43 AM: Backend event: Get - Completed: duplicati-20200108T132605Z.dlist.zip (3.76 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Started: duplicati-20200108T132605Z.dlist.zip (3.76 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Completed: duplicati-20200108T132600Z.dlist.zip (3.69 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Started: duplicati-20200108T132600Z.dlist.zip (3.69 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Completed: duplicati-20200108T132557Z.dlist.zip (3.62 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Started: duplicati-20200108T132557Z.dlist.zip (3.62 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Completed: duplicati-20200108T132553Z.dlist.zip (3.63 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Started: duplicati-20200108T132553Z.dlist.zip (3.63 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Completed: duplicati-20200108T132425Z.dlist.zip (3.56 KB)

Jan 8, 2020 8:43 AM: Backend event: Get - Started: duplicati-20200108T132425Z.dlist.zip (3.56 KB)

Jan 8, 2020 8:43 AM: Rebuild database started, downloading 5 filelists

Jan 8, 2020 8:43 AM: Backend event: List - Completed: (7 bytes)

Jan 8, 2020 8:43 AM: Backend event: List - Started: ()

Jan 8, 2020 8:43 AM: The operation Repair has started