Will this do?

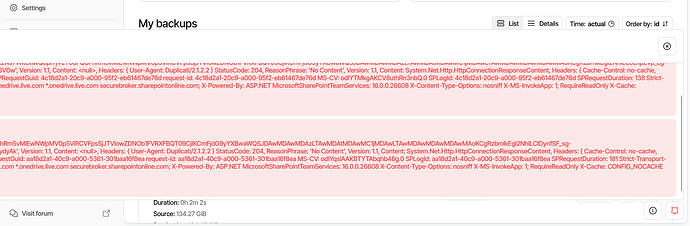

2025-10-23 13:15:24 -04 - [Warning-Duplicati.Library.Main.Backend.Handler-BackendManagerHandlerFailure]: Error in handler: Failed to parse response

Method: DELETE, RequestUri: 'https://xxxxxxxxxxxxx-my.sharepoint.com/personal/xxxxxxx_xxxxxxxxxxxxxxx_com/_api/v2.0/drive/items/01O3D4LCZ24WXHEWPNOFEJPXKUQSCC2OQ3/uploadSession?guid='2745ad63-eba2-47e2-92ec-22df83434421'&overwrite=True&rename=False&dc=0&tempauth=REDACTED', Version: 1.1, Content: <null>, Headers:

{

User-Agent: Duplicati/2.2.0.0

traceparent: 00-95ccb87524fe7109505eda16388a775f-ea47ac4ff99ee722-00

}

StatusCode: 204, ReasonPhrase: 'No Content', Version: 1.1, Content: System.Net.Http.HttpConnectionResponseContent, Headers:

{

Cache-Control: no-cache, no-store

Pragma: no-cache

P3P: CP=\"ALL IND DSP COR ADM CONo CUR CUSo IVAo IVDo PSA PSD TAI TELo OUR SAMo CNT COM INT NAV ONL PHY PRE PUR UNI\"

X-NetworkStatistics: 0,4194720,0,2,587463,525568,525568,6797

X-SharePointHealthScore: 1

X-SP-SERVERSTATE: ReadOnly=0

ODATA-VERSION: 4.0

SPClientServiceRequestDuration: 208

X-AspNet-Version: 4.0.30319

IsOCDI: 0

X-DataBoundary: NONE

X-1DSCollectorUrl: https://mobile.events.data.microsoft.com/OneCollector/1.0/

X-AriaCollectorURL: https://browser.pipe.aria.microsoft.com/Collector/3.0/

SPRequestGuid: e443d2a1-0092-a000-8a40-80ea31826dd3

request-id: e443d2a1-0092-a000-8a40-80ea31826dd3

MS-CV: odJD5JIAAKCKQIDqMYJt0w.0

SPLogId: e443d2a1-0092-a000-8a40-80ea31826dd3

SPRequestDuration: 234

Strict-Transport-Security: max-age=31536000

X-Frame-Options: SAMEORIGIN

Content-Security-Policy: frame-ancestors 'self' teams.microsoft.com *.teams.microsoft.com *.skype.com *.teams.microsoft.us local.teams.office.com teams.cloud.microsoft *.office365.com goals.cloud.microsoft *.powerapps.com *.powerbi.com *.yammer.com engage.cloud.microsoft word.cloud.microsoft excel.cloud.microsoft powerpoint.cloud.microsoft *.officeapps.live.com *.office.com *.microsoft365.com m365.cloud.microsoft *.cloud.microsoft *.stream.azure-test.net *.dynamics.com *.microsoft.com onedrive.live.com *.onedrive.live.com securebroker.sharepointonline.com;

X-Powered-By: ASP.NET

MicrosoftSharePointTeamServices: 16.0.0.26608

X-Content-Type-Options: nosniff

X-MS-InvokeApp: 1; RequireReadOnly

X-Cache: CONFIG_NOCACHE

X-MSEdge-Ref: Ref A: 230CD7ED1DD742ECAD071A6D7A545AD5 Ref B: EWR311000108051 Ref C: 2025-10-23T17:15:24Z

Date: Thu, 23 Oct 2025 17:15:23 GMT

Expires: -1

}

<error reading body>: The stream was already consumed. It cannot be read again.

Duplicati.Library.Backend.MicrosoftGraph.MicrosoftGraphException: Failed to parse response

Method: DELETE, RequestUri: 'https://xxxxxxxxxxxxx-my.sharepoint.com/personal/xxxxxxx_xxxxxxxxxxxxxxx_com/_api/v2.0/drive/items/01O3D4LCZ24WXHEWPNOFEJPXKUQSCC2OQ3/uploadSession?guid='2745ad63-eba2-47e2-92ec-22df83434421'&overwrite=True&rename=False&dc=0&tempauth=REDACTED', Version: 1.1, Content: <null>, Headers:

{

User-Agent: Duplicati/2.2.0.0

traceparent: 00-95ccb87524fe7109505eda16388a775f-ea47ac4ff99ee722-00

}

StatusCode: 204, ReasonPhrase: 'No Content', Version: 1.1, Content: System.Net.Http.HttpConnectionResponseContent, Headers:

{

Cache-Control: no-cache, no-store

Pragma: no-cache

P3P: CP=\"ALL IND DSP COR ADM CONo CUR CUSo IVAo IVDo PSA PSD TAI TELo OUR SAMo CNT COM INT NAV ONL PHY PRE PUR UNI\"

X-NetworkStatistics: 0,4194720,0,2,587463,525568,525568,6797

X-SharePointHealthScore: 1

X-SP-SERVERSTATE: ReadOnly=0

ODATA-VERSION: 4.0

SPClientServiceRequestDuration: 208

X-AspNet-Version: 4.0.30319

IsOCDI: 0

X-DataBoundary: NONE

X-1DSCollectorUrl: https://mobile.events.data.microsoft.com/OneCollector/1.0/

X-AriaCollectorURL: https://browser.pipe.aria.microsoft.com/Collector/3.0/

SPRequestGuid: e443d2a1-0092-a000-8a40-80ea31826dd3

request-id: e443d2a1-0092-a000-8a40-80ea31826dd3

MS-CV: odJD5JIAAKCKQIDqMYJt0w.0

SPLogId: e443d2a1-0092-a000-8a40-80ea31826dd3

SPRequestDuration: 234

Strict-Transport-Security: max-age=31536000

X-Frame-Options: SAMEORIGIN

Content-Security-Policy: frame-ancestors 'self' teams.microsoft.com *.teams.microsoft.com *.skype.com *.teams.microsoft.us local.teams.office.com teams.cloud.microsoft *.office365.com goals.cloud.microsoft *.powerapps.com *.powerbi.com *.yammer.com engage.cloud.microsoft word.cloud.microsoft excel.cloud.microsoft powerpoint.cloud.microsoft *.officeapps.live.com *.office.com *.microsoft365.com m365.cloud.microsoft *.cloud.microsoft *.stream.azure-test.net *.dynamics.com *.microsoft.com onedrive.live.com *.onedrive.live.com securebroker.sharepointonline.com;

X-Powered-By: ASP.NET

MicrosoftSharePointTeamServices: 16.0.0.26608

X-Content-Type-Options: nosniff

X-MS-InvokeApp: 1; RequireReadOnly

X-Cache: CONFIG_NOCACHE

X-MSEdge-Ref: Ref A: 230CD7ED1DD742ECAD071A6D7A545AD5 Ref B: EWR311000108051 Ref C: 2025-10-23T17:15:24Z

Date: Thu, 23 Oct 2025 17:15:23 GMT

Expires: -1

}

<error reading body>: The stream was already consumed. It cannot be read again.

at Duplicati.Library.Main.Backend.BackendManager.Handler.ExecuteWithRetry(PendingOperationBase op, CancellationToken cancellationToken)

at Duplicati.Library.Main.Backend.BackendManager.Handler.ReclaimCompletedTasks(List`1 tasks)

at Duplicati.Library.Main.Backend.BackendManager.Handler.EnsureAtMostNActiveTasks(Int32 n, List`1 tasks)

at Duplicati.Library.Main.Backend.BackendManager.Handler.EnsureAtMostNActiveTasks(Int32 uploads, Int32 downloads)

at Duplicati.Library.Main.Backend.BackendManager.Handler.Run(IReadChannel`1 requestChannel)","2025-10-23 13:15:24 -04 - [Warning-Duplicati.Library.Main.Backend.Handler-BackendManagerDisposeWhileActive]: Terminating 3 active uploads","2025-10-23 13:15:24 -04 - [Warning-Duplicati.Library.Main.Backend.Handler-BackendManagerDisposeError]: Error in active upload: Cancelled","2025-10-23 13:15:24 -04 - [Warning-Duplicati.Library.Main.Backend.Handler-BackendManagerDisposeError]: Terminating, but 2 active upload(s) are still active","2025-10-23 13:15:24 -04 - [Warning-Duplicati.Library.Main.Backend.Handler-BackendManagerDisposeError]: Error in active upload: Cancelled","2025-10-23 13:15:24 -04 - [Warning-Duplicati.Library.Main.Backend.Handler-BackendManagerDisposeError]: Terminating, but 1 active upload(s) are still active","2025-10-23 13:15:24 -04 - [Warning-Duplicati.Library.Main.Backend.Handler-BackendManagerDisposeError]: Error in active upload: Cancelled","2025-10-23 13:15:26 -04 - [Error-Duplicati.Library.Main.Operation.BackupHandler-FatalError]: Fatal error

Duplicati.Library.Backend.MicrosoftGraph.MicrosoftGraphException: Failed to parse response

Method: DELETE, RequestUri: 'https://xxxxxxxxxxxxx-my.sharepoint.com/personal/xxxxxxx_xxxxxxxxxxxxxxx_com/_api/v2.0/drive/items/01O3D4LCZ24WXHEWPNOFEJPXKUQSCC2OQ3/uploadSession?guid='2745ad63-eba2-47e2-92ec-22df83434421'&overwrite=True&rename=False&dc=0&tempauth=REDACTED', Version: 1.1, Content: <null>, Headers:

{

User-Agent: Duplicati/2.2.0.0

traceparent: 00-95ccb87524fe7109505eda16388a775f-ea47ac4ff99ee722-00

}

StatusCode: 204, ReasonPhrase: 'No Content', Version: 1.1, Content: System.Net.Http.HttpConnectionResponseContent, Headers:

{

Cache-Control: no-cache, no-store

Pragma: no-cache

P3P: CP=\"ALL IND DSP COR ADM CONo CUR CUSo IVAo IVDo PSA PSD TAI TELo OUR SAMo CNT COM INT NAV ONL PHY PRE PUR UNI\"

X-NetworkStatistics: 0,4194720,0,2,587463,525568,525568,6797

X-SharePointHealthScore: 1

X-SP-SERVERSTATE: ReadOnly=0

ODATA-VERSION: 4.0

SPClientServiceRequestDuration: 208

X-AspNet-Version: 4.0.30319

IsOCDI: 0

X-DataBoundary: NONE

X-1DSCollectorUrl: https://mobile.events.data.microsoft.com/OneCollector/1.0/

X-AriaCollectorURL: https://browser.pipe.aria.microsoft.com/Collector/3.0/

SPRequestGuid: e443d2a1-0092-a000-8a40-80ea31826dd3

request-id: e443d2a1-0092-a000-8a40-80ea31826dd3

MS-CV: odJD5JIAAKCKQIDqMYJt0w.0

SPLogId: e443d2a1-0092-a000-8a40-80ea31826dd3

SPRequestDuration: 234

Strict-Transport-Security: max-age=31536000

X-Frame-Options: SAMEORIGIN

Content-Security-Policy: frame-ancestors 'self' teams.microsoft.com *.teams.microsoft.com *.skype.com *.teams.microsoft.us local.teams.office.com teams.cloud.microsoft *.office365.com goals.cloud.microsoft *.powerapps.com *.powerbi.com *.yammer.com engage.cloud.microsoft word.cloud.microsoft excel.cloud.microsoft powerpoint.cloud.microsoft *.officeapps.live.com *.office.com *.microsoft365.com m365.cloud.microsoft *.cloud.microsoft *.stream.azure-test.net *.dynamics.com *.microsoft.com onedrive.live.com *.onedrive.live.com securebroker.sharepointonline.com;

X-Powered-By: ASP.NET

MicrosoftSharePointTeamServices: 16.0.0.26608

X-Content-Type-Options: nosniff

X-MS-InvokeApp: 1; RequireReadOnly

X-Cache: CONFIG_NOCACHE

X-MSEdge-Ref: Ref A: 230CD7ED1DD742ECAD071A6D7A545AD5 Ref B: EWR311000108051 Ref C: 2025-10-23T17:15:24Z

Date: Thu, 23 Oct 2025 17:15:23 GMT

Expires: -1

}

<error reading body>: The stream was already consumed. It cannot be read again.

at Duplicati.Library.Main.Backend.BackendManager.Handler.ExecuteWithRetry(PendingOperationBase op, CancellationToken cancellationToken)

at Duplicati.Library.Main.Backend.BackendManager.Handler.ReclaimCompletedTasks(List`1 tasks)

at Duplicati.Library.Main.Backend.BackendManager.Handler.EnsureAtMostNActiveTasks(Int32 n, List`1 tasks)

at Duplicati.Library.Main.Backend.BackendManager.Handler.EnsureAtMostNActiveTasks(Int32 uploads, Int32 downloads)

at Duplicati.Library.Main.Backend.BackendManager.Handler.Run(IReadChannel`1 requestChannel)

at Duplicati.Library.Main.Backend.BackendManager.PutAsync(VolumeWriterBase volume, IndexVolumeWriter indexVolume, Action indexVolumeFinished, Boolean waitForComplete, Func`1 onDbUpdate, CancellationToken cancelToken)

at Duplicati.Library.Main.Operation.Backup.DataBlockProcessor.<>c__DisplayClass0_0.<<Run>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Duplicati.Library.Main.Operation.Backup.DataBlockProcessor.<>c__DisplayClass0_0.<<Run>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at CoCoL.AutomationExtensions.RunTask[T](T channels, Func`2 method, Boolean catchRetiredExceptions)

at Duplicati.Library.Main.Operation.BackupHandler.RunMainOperation(Channels channels, ISourceProvider source, UsnJournalService journalService, BackupDatabase database, IBackendManager backendManager, BackupStatsCollector stats, Options options, IFilter filter, BackupResults result, ITaskReader taskreader, Int64 filesetid, Int64 lastfilesetid)

at Duplicati.Library.Main.Operation.BackupHandler.RunAsync(String[] sources, IBackendManager backendManager, IFilter filter)","2025-10-23 13:15:26 -04 - [Warning-Duplicati.Library.Main.Backend.BackendManager-BackendManagerShutdown]: Backend manager queue runner crashed

System.AggregateException: One or more errors occurred. (Failed to parse response

Method: DELETE, RequestUri: 'https://xxxxxxxxxxxxx-my.sharepoint.com/personal/xxxxxxx_xxxxxxxxxxxxxxx_com/_api/v2.0/drive/items/01O3D4LCZ24WXHEWPNOFEJPXKUQSCC2OQ3/uploadSession?guid='2745ad63-eba2-47e2-92ec-22df83434421'&overwrite=True&rename=False&dc=0&tempauth=REDACTED', Version: 1.1, Content: <null>, Headers:

{

User-Agent: Duplicati/2.2.0.0

traceparent: 00-95ccb87524fe7109505eda16388a775f-ea47ac4ff99ee722-00

}

StatusCode: 204, ReasonPhrase: 'No Content', Version: 1.1, Content: System.Net.Http.HttpConnectionResponseContent, Headers:

{

Cache-Control: no-cache, no-store

Pragma: no-cache

P3P: CP=\"ALL IND DSP COR ADM CONo CUR CUSo IVAo IVDo PSA PSD TAI TELo OUR SAMo CNT COM INT NAV ONL PHY PRE PUR UNI\"

X-NetworkStatistics: 0,4194720,0,2,587463,525568,525568,6797

X-SharePointHealthScore: 1

X-SP-SERVERSTATE: ReadOnly=0

ODATA-VERSION: 4.0

SPClientServiceRequestDuration: 208

X-AspNet-Version: 4.0.30319

IsOCDI: 0

X-DataBoundary: NONE

X-1DSCollectorUrl: https://mobile.events.data.microsoft.com/OneCollector/1.0/

X-AriaCollectorURL: https://browser.pipe.aria.microsoft.com/Collector/3.0/

SPRequestGuid: e443d2a1-0092-a000-8a40-80ea31826dd3

request-id: e443d2a1-0092-a000-8a40-80ea31826dd3

MS-CV: odJD5JIAAKCKQIDqMYJt0w.0

SPLogId: e443d2a1-0092-a000-8a40-80ea31826dd3

SPRequestDuration: 234

Strict-Transport-Security: max-age=31536000

X-Frame-Options: SAMEORIGIN

Content-Security-Policy: frame-ancestors 'self' teams.microsoft.com *.teams.microsoft.com *.skype.com *.teams.microsoft.us local.teams.office.com teams.cloud.microsoft *.office365.com goals.cloud.microsoft *.powerapps.com *.powerbi.com *.yammer.com engage.cloud.microsoft word.cloud.microsoft excel.cloud.microsoft powerpoint.cloud.microsoft *.officeapps.live.com *.office.com *.microsoft365.com m365.cloud.microsoft *.cloud.microsoft *.stream.azure-test.net *.dynamics.com *.microsoft.com onedrive.live.com *.onedrive.live.com securebroker.sharepointonline.com;

X-Powered-By: ASP.NET

MicrosoftSharePointTeamServices: 16.0.0.26608

X-Content-Type-Options: nosniff

X-MS-InvokeApp: 1; RequireReadOnly

X-Cache: CONFIG_NOCACHE

X-MSEdge-Ref: Ref A: 230CD7ED1DD742ECAD071A6D7A545AD5 Ref B: EWR311000108051 Ref C: 2025-10-23T17:15:24Z

Date: Thu, 23 Oct 2025 17:15:23 GMT

Expires: -1

}

<error reading body>: The stream was already consumed. It cannot be read again.)

---> Duplicati.Library.Backend.MicrosoftGraph.MicrosoftGraphException: Failed to parse response

Method: DELETE, RequestUri: 'https://xxxxxxxxxxxxx-my.sharepoint.com/personal/xxxxxxx_xxxxxxxxxxxxxxx_com/_api/v2.0/drive/items/01O3D4LCZ24WXHEWPNOFEJPXKUQSCC2OQ3/uploadSession?guid='2745ad63-eba2-47e2-92ec-22df83434421'&overwrite=True&rename=False&dc=0&tempauth=REDACTED', Version: 1.1, Content: <null>, Headers:

{

User-Agent: Duplicati/2.2.0.0

traceparent: 00-95ccb87524fe7109505eda16388a775f-ea47ac4ff99ee722-00

}

StatusCode: 204, ReasonPhrase: 'No Content', Version: 1.1, Content: System.Net.Http.HttpConnectionResponseContent, Headers:

{

Cache-Control: no-cache, no-store

Pragma: no-cache

P3P: CP=\"ALL IND DSP COR ADM CONo CUR CUSo IVAo IVDo PSA PSD TAI TELo OUR SAMo CNT COM INT NAV ONL PHY PRE PUR UNI\"

X-NetworkStatistics: 0,4194720,0,2,587463,525568,525568,6797

X-SharePointHealthScore: 1

X-SP-SERVERSTATE: ReadOnly=0

ODATA-VERSION: 4.0

SPClientServiceRequestDuration: 208

X-AspNet-Version: 4.0.30319

IsOCDI: 0

X-DataBoundary: NONE

X-1DSCollectorUrl: https://mobile.events.data.microsoft.com/OneCollector/1.0/

X-AriaCollectorURL: https://browser.pipe.aria.microsoft.com/Collector/3.0/

SPRequestGuid: e443d2a1-0092-a000-8a40-80ea31826dd3

request-id: e443d2a1-0092-a000-8a40-80ea31826dd3

MS-CV: odJD5JIAAKCKQIDqMYJt0w.0

SPLogId: e443d2a1-0092-a000-8a40-80ea31826dd3

SPRequestDuration: 234

Strict-Transport-Security: max-age=31536000

X-Frame-Options: SAMEORIGIN

Content-Security-Policy: frame-ancestors 'self' teams.microsoft.com *.teams.microsoft.com *.skype.com *.teams.microsoft.us local.teams.office.com teams.cloud.microsoft *.office365.com goals.cloud.microsoft *.powerapps.com *.powerbi.com *.yammer.com engage.cloud.microsoft word.cloud.microsoft excel.cloud.microsoft powerpoint.cloud.microsoft *.officeapps.live.com *.office.com *.microsoft365.com m365.cloud.microsoft *.cloud.microsoft *.stream.azure-test.net *.dynamics.com *.microsoft.com onedrive.live.com *.onedrive.live.com securebroker.sharepointonline.com;

X-Powered-By: ASP.NET

MicrosoftSharePointTeamServices: 16.0.0.26608

X-Content-Type-Options: nosniff

X-MS-InvokeApp: 1; RequireReadOnly

X-Cache: CONFIG_NOCACHE

X-MSEdge-Ref: Ref A: 230CD7ED1DD742ECAD071A6D7A545AD5 Ref B: EWR311000108051 Ref C: 2025-10-23T17:15:24Z

Date: Thu, 23 Oct 2025 17:15:23 GMT

Expires: -1

}

<error reading body>: The stream was already consumed. It cannot be read again.

at Duplicati.Library.Main.Backend.BackendManager.Handler.ExecuteWithRetry(PendingOperationBase op, CancellationToken cancellationToken)

at Duplicati.Library.Main.Backend.BackendManager.Handler.ReclaimCompletedTasks(List`1 tasks)

at Duplicati.Library.Main.Backend.BackendManager.Handler.EnsureAtMostNActiveTasks(Int32 n, List`1 tasks)

at Duplicati.Library.Main.Backend.BackendManager.Handler.EnsureAtMostNActiveTasks(Int32 uploads, Int32 downloads)

at Duplicati.Library.Main.Backend.BackendManager.Handler.Run(IReadChannel`1 requestChannel)

at Duplicati.Library.Main.Backend.BackendManager.PutAsync(VolumeWriterBase volume, IndexVolumeWriter indexVolume, Action indexVolumeFinished, Boolean waitForComplete, Func`1 onDbUpdate, CancellationToken cancelToken)

at Duplicati.Library.Main.Operation.Backup.DataBlockProcessor.<>c__DisplayClass0_0.<<Run>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Duplicati.Library.Main.Operation.Backup.DataBlockProcessor.<>c__DisplayClass0_0.<<Run>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at CoCoL.AutomationExtensions.RunTask[T](T channels, Func`2 method, Boolean catchRetiredExceptions)

at Duplicati.Library.Main.Operation.BackupHandler.RunMainOperation(Channels channels, ISourceProvider source, UsnJournalService journalService, BackupDatabase database, IBackendManager backendManager, BackupStatsCollector stats, Options options, IFilter filter, BackupResults result, ITaskReader taskreader, Int64 filesetid, Int64 lastfilesetid)

at Duplicati.Library.Main.Operation.BackupHandler.RunAsync(String[] sources, IBackendManager backendManager, IFilter filter)

at Duplicati.Library.Main.Controller.<>c__DisplayClass22_0.<<Backup>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Func`3 method)

--- End of inner exception stack trace ---","2025-10-23 13:15:26 -04 - [Error-Duplicati.Library.Main.Controller-FailedOperation]: The operation Backup has failed

Duplicati.Library.Backend.MicrosoftGraph.MicrosoftGraphException: Failed to parse response

Method: DELETE, RequestUri: 'https://xxxxxxxxxxxxx-my.sharepoint.com/personal/xxxxxxx_xxxxxxxxxxxxxxx_com/_api/v2.0/drive/items/01O3D4LCZ24WXHEWPNOFEJPXKUQSCC2OQ3/uploadSession?guid='2745ad63-eba2-47e2-92ec-22df83434421'&overwrite=True&rename=False&dc=0&tempauth=REDACTED', Version: 1.1, Content: <null>, Headers:

{

User-Agent: Duplicati/2.2.0.0

traceparent: 00-95ccb87524fe7109505eda16388a775f-ea47ac4ff99ee722-00

}

StatusCode: 204, ReasonPhrase: 'No Content', Version: 1.1, Content: System.Net.Http.HttpConnectionResponseContent, Headers:

{

Cache-Control: no-cache, no-store

Pragma: no-cache

P3P: CP=\"ALL IND DSP COR ADM CONo CUR CUSo IVAo IVDo PSA PSD TAI TELo OUR SAMo CNT COM INT NAV ONL PHY PRE PUR UNI\"

X-NetworkStatistics: 0,4194720,0,2,587463,525568,525568,6797

X-SharePointHealthScore: 1

X-SP-SERVERSTATE: ReadOnly=0

ODATA-VERSION: 4.0

SPClientServiceRequestDuration: 208

X-AspNet-Version: 4.0.30319

IsOCDI: 0

X-DataBoundary: NONE

X-1DSCollectorUrl: https://mobile.events.data.microsoft.com/OneCollector/1.0/

X-AriaCollectorURL: https://browser.pipe.aria.microsoft.com/Collector/3.0/

SPRequestGuid: e443d2a1-0092-a000-8a40-80ea31826dd3

request-id: e443d2a1-0092-a000-8a40-80ea31826dd3

MS-CV: odJD5JIAAKCKQIDqMYJt0w.0

SPLogId: e443d2a1-0092-a000-8a40-80ea31826dd3

SPRequestDuration: 234

Strict-Transport-Security: max-age=31536000

X-Frame-Options: SAMEORIGIN

Content-Security-Policy: frame-ancestors 'self' teams.microsoft.com *.teams.microsoft.com *.skype.com *.teams.microsoft.us local.teams.office.com teams.cloud.microsoft *.office365.com goals.cloud.microsoft *.powerapps.com *.powerbi.com *.yammer.com engage.cloud.microsoft word.cloud.microsoft excel.cloud.microsoft powerpoint.cloud.microsoft *.officeapps.live.com *.office.com *.microsoft365.com m365.cloud.microsoft *.cloud.microsoft *.stream.azure-test.net *.dynamics.com *.microsoft.com onedrive.live.com *.onedrive.live.com securebroker.sharepointonline.com;

X-Powered-By: ASP.NET

MicrosoftSharePointTeamServices: 16.0.0.26608

X-Content-Type-Options: nosniff

X-MS-InvokeApp: 1; RequireReadOnly

X-Cache: CONFIG_NOCACHE

X-MSEdge-Ref: Ref A: 230CD7ED1DD742ECAD071A6D7A545AD5 Ref B: EWR311000108051 Ref C: 2025-10-23T17:15:24Z

Date: Thu, 23 Oct 2025 17:15:23 GMT

Expires: -1

}

<error reading body>: The stream was already consumed. It cannot be read again.

at Duplicati.Library.Main.Backend.BackendManager.Handler.ExecuteWithRetry(PendingOperationBase op, CancellationToken cancellationToken)

at Duplicati.Library.Main.Backend.BackendManager.Handler.ReclaimCompletedTasks(List`1 tasks)

at Duplicati.Library.Main.Backend.BackendManager.Handler.EnsureAtMostNActiveTasks(Int32 n, List`1 tasks)

at Duplicati.Library.Main.Backend.BackendManager.Handler.EnsureAtMostNActiveTasks(Int32 uploads, Int32 downloads)

at Duplicati.Library.Main.Backend.BackendManager.Handler.Run(IReadChannel`1 requestChannel)

at Duplicati.Library.Main.Backend.BackendManager.PutAsync(VolumeWriterBase volume, IndexVolumeWriter indexVolume, Action indexVolumeFinished, Boolean waitForComplete, Func`1 onDbUpdate, CancellationToken cancelToken)

at Duplicati.Library.Main.Operation.Backup.DataBlockProcessor.<>c__DisplayClass0_0.<<Run>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Duplicati.Library.Main.Operation.Backup.DataBlockProcessor.<>c__DisplayClass0_0.<<Run>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at CoCoL.AutomationExtensions.RunTask[T](T channels, Func`2 method, Boolean catchRetiredExceptions)

at Duplicati.Library.Main.Operation.BackupHandler.RunMainOperation(Channels channels, ISourceProvider source, UsnJournalService journalService, BackupDatabase database, IBackendManager backendManager, BackupStatsCollector stats, Options options, IFilter filter, BackupResults result, ITaskReader taskreader, Int64 filesetid, Int64 lastfilesetid)

at Duplicati.Library.Main.Operation.BackupHandler.RunAsync(String[] sources, IBackendManager backendManager, IFilter filter)

at Duplicati.Library.Main.Controller.<>c__DisplayClass22_0.<<Backup>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Func`3 method)"],"Exception":"Duplicati.Library.Backend.MicrosoftGraph.MicrosoftGraphException: Failed to parse response

Method: DELETE, RequestUri: 'https://xxxxxxxxxxxxx-my.sharepoint.com/personal/xxxxxxx_xxxxxxxxxxxxxxx_com/_api/v2.0/drive/items/01O3D4LCZ24WXHEWPNOFEJPXKUQSCC2OQ3/uploadSession?guid='2745ad63-eba2-47e2-92ec-22df83434421'&overwrite=True&rename=False&dc=0&tempauth=REDACTED', Version: 1.1, Content: <null>, Headers:

{

User-Agent: Duplicati/2.2.0.0

traceparent: 00-95ccb87524fe7109505eda16388a775f-ea47ac4ff99ee722-00

}

StatusCode: 204, ReasonPhrase: 'No Content', Version: 1.1, Content: System.Net.Http.HttpConnectionResponseContent, Headers:

{

Cache-Control: no-cache, no-store

Pragma: no-cache

P3P: CP=\"ALL IND DSP COR ADM CONo CUR CUSo IVAo IVDo PSA PSD TAI TELo OUR SAMo CNT COM INT NAV ONL PHY PRE PUR UNI\"

X-NetworkStatistics: 0,4194720,0,2,587463,525568,525568,6797

X-SharePointHealthScore: 1

X-SP-SERVERSTATE: ReadOnly=0

ODATA-VERSION: 4.0

SPClientServiceRequestDuration: 208

X-AspNet-Version: 4.0.30319

IsOCDI: 0

X-DataBoundary: NONE

X-1DSCollectorUrl: https://mobile.events.data.microsoft.com/OneCollector/1.0/

X-AriaCollectorURL: https://browser.pipe.aria.microsoft.com/Collector/3.0/

SPRequestGuid: e443d2a1-0092-a000-8a40-80ea31826dd3

request-id: e443d2a1-0092-a000-8a40-80ea31826dd3

MS-CV: odJD5JIAAKCKQIDqMYJt0w.0

SPLogId: e443d2a1-0092-a000-8a40-80ea31826dd3

SPRequestDuration: 234

Strict-Transport-Security: max-age=31536000

X-Frame-Options: SAMEORIGIN

Content-Security-Policy: frame-ancestors 'self' teams.microsoft.com *.teams.microsoft.com *.skype.com *.teams.microsoft.us local.teams.office.com teams.cloud.microsoft *.office365.com goals.cloud.microsoft *.powerapps.com *.powerbi.com *.yammer.com engage.cloud.microsoft word.cloud.microsoft excel.cloud.microsoft powerpoint.cloud.microsoft *.officeapps.live.com *.office.com *.microsoft365.com m365.cloud.microsoft *.cloud.microsoft *.stream.azure-test.net *.dynamics.com *.microsoft.com onedrive.live.com *.onedrive.live.com securebroker.sharepointonline.com;

X-Powered-By: ASP.NET

MicrosoftSharePointTeamServices: 16.0.0.26608

X-Content-Type-Options: nosniff

X-MS-InvokeApp: 1; RequireReadOnly

X-Cache: CONFIG_NOCACHE

X-MSEdge-Ref: Ref A: 230CD7ED1DD742ECAD071A6D7A545AD5 Ref B: EWR311000108051 Ref C: 2025-10-23T17:15:24Z

Date: Thu, 23 Oct 2025 17:15:23 GMT

Expires: -1

}

<error reading body>: The stream was already consumed. It cannot be read again.

at Duplicati.Library.Main.Backend.BackendManager.Handler.ExecuteWithRetry(PendingOperationBase op, CancellationToken cancellationToken)

at Duplicati.Library.Main.Backend.BackendManager.Handler.ReclaimCompletedTasks(List`1 tasks)

at Duplicati.Library.Main.Backend.BackendManager.Handler.EnsureAtMostNActiveTasks(Int32 n, List`1 tasks)

at Duplicati.Library.Main.Backend.BackendManager.Handler.EnsureAtMostNActiveTasks(Int32 uploads, Int32 downloads)

at Duplicati.Library.Main.Backend.BackendManager.Handler.Run(IReadChannel`1 requestChannel)

at Duplicati.Library.Main.Backend.BackendManager.PutAsync(VolumeWriterBase volume, IndexVolumeWriter indexVolume, Action indexVolumeFinished, Boolean waitForComplete, Func`1 onDbUpdate, CancellationToken cancelToken)

at Duplicati.Library.Main.Operation.Backup.DataBlockProcessor.<>c__DisplayClass0_0.<<Run>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Duplicati.Library.Main.Operation.Backup.DataBlockProcessor.<>c__DisplayClass0_0.<<Run>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at CoCoL.AutomationExtensions.RunTask[T](T channels, Func`2 method, Boolean catchRetiredExceptions)

at Duplicati.Library.Main.Operation.BackupHandler.RunMainOperation(Channels channels, ISourceProvider source, UsnJournalService journalService, BackupDatabase database, IBackendManager backendManager, BackupStatsCollector stats, Options options, IFilter filter, BackupResults result, ITaskReader taskreader, Int64 filesetid, Int64 lastfilesetid)

at Duplicati.Library.Main.Operation.BackupHandler.RunAsync(String[] sources, IBackendManager backendManager, IFilter filter)

at Duplicati.Library.Main.Controller.<>c__DisplayClass22_0.<<Backup>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Func`3 method)"