For years the status bar upload speed (right hand side as “at <speed>”) was incorrect, getting user questions and awkward attempts at answers. Did any Canary backend work improve the situation?

If not, maybe the next comment is a feature (not a bug), but the speed seems to rarely appear. Awhile ago it seemed to show up when an upload finished and a speed became available, but recently I see uploads but no upload speed. I just set up a test using local folder destination with a 1 MB/s upload throttle and no parallel uploads, and watched in Explorer as files showed up but a speed didn’t. I also had a clock up, to watch any time speed showed up. It was at least 4 seconds starting at 19:12:00 UTC. Wireshark shows:

HTTP/1.1 200 OK

Content-Type: application/json; charset=utf-8

Date: Mon, 14 Jul 2025 19:11:56 GMT

Server: Kestrel

Transfer-Encoding: chunked

0.000000s

{"BackupID":"5","TaskID":59,"BackendAction":"Put","BackendPath":"duplicati-b4d33062e8cc447dcb3270cdffd578f07.dblock.zip","BackendFileSize":1048981,"BackendFileProgress":0,"BackendSpeed":-1,"BackendIsBlocking":false,"CurrentFilename":"C:\\Users\\Me\\Downloads\\Binary\\duplicati-2.1.0.124_canary_2025-07-11-win-x64-gui.zip","CurrentFilesize":83392132,"CurrentFileoffset":56623104,"CurrentFilecomplete":false,"Phase":"Backup_ProcessingFiles","OverallProgress":0,"ProcessedFileCount":0,"ProcessedFileSize":0,"TotalFileCount":1,"TotalFileSize":83392132,"StillCounting":false,"ActiveTransfers":[{"BackendAction":"Put","BackendPath":"duplicati-b4d33062e8cc447dcb3270cdffd578f07.dblock.zip","BackendFileSize":1048981,"BackendFileProgress":0,"BackendSpeed":-1,"BackendIsBlocking":false}]}

HTTP/1.1 200 OK

Content-Type: application/json; charset=utf-8

Date: Mon, 14 Jul 2025 19:11:58 GMT

Server: Kestrel

Transfer-Encoding: chunked

0.000000s

{"BackupID":"5","TaskID":59,"BackendAction":"Put","BackendPath":"duplicati-b730541a2211b48adaf7b4325f84707c0.dblock.zip","BackendFileSize":1048981,"BackendFileProgress":983040,"BackendSpeed":720896,"BackendIsBlocking":false,"CurrentFilename":"C:\\Users\\Me\\Downloads\\Binary\\duplicati-2.1.0.124_canary_2025-07-11-win-x64-gui.zip","CurrentFilesize":83392132,"CurrentFileoffset":61865984,"CurrentFilecomplete":false,"Phase":"Backup_ProcessingFiles","OverallProgress":0,"ProcessedFileCount":0,"ProcessedFileSize":0,"TotalFileCount":1,"TotalFileSize":83392132,"StillCounting":false,"ActiveTransfers":[{"BackendAction":"Put","BackendPath":"duplicati-b730541a2211b48adaf7b4325f84707c0.dblock.zip","BackendFileSize":1048981,"BackendFileProgress":983040,"BackendSpeed":720896,"BackendIsBlocking":false}]}

HTTP/1.1 200 OK

Content-Type: application/json; charset=utf-8

Date: Mon, 14 Jul 2025 19:12:00 GMT

Server: Kestrel

Transfer-Encoding: chunked

0.000000s

{"BackupID":"5","TaskID":59,"BackendAction":"Put","BackendPath":"duplicati-bb8226d99e1e14dfdb7f04412ac7a07a6.dblock.zip","BackendFileSize":1048981,"BackendFileProgress":851968,"BackendSpeed":720896,"BackendIsBlocking":false,"CurrentFilename":"C:\\Users\\Me\\Downloads\\Binary\\duplicati-2.1.0.124_canary_2025-07-11-win-x64-gui.zip","CurrentFilesize":83392132,"CurrentFileoffset":61865984,"CurrentFilecomplete":false,"Phase":"Backup_ProcessingFiles","OverallProgress":0,"ProcessedFileCount":0,"ProcessedFileSize":0,"TotalFileCount":1,"TotalFileSize":83392132,"StillCounting":false,"ActiveTransfers":[{"BackendAction":"Put","BackendPath":"duplicati-bb8226d99e1e14dfdb7f04412ac7a07a6.dblock.zip","BackendFileSize":1048981,"BackendFileProgress":851968,"BackendSpeed":720896,"BackendIsBlocking":false}]}

HTTP/1.1 200 OK

Content-Type: application/json; charset=utf-8

Date: Mon, 14 Jul 2025 19:12:02 GMT

Server: Kestrel

Transfer-Encoding: chunked

0.000000s

{"BackupID":"5","TaskID":59,"BackendAction":"Get","BackendPath":null,"BackendFileSize":0,"BackendFileProgress":0,"BackendSpeed":-1,"BackendIsBlocking":false,"CurrentFilename":"C:\\Users\\Me\\Downloads\\Binary\\duplicati-2.1.0.124_canary_2025-07-11-win-x64-gui.zip","CurrentFilesize":83392132,"CurrentFileoffset":61865984,"CurrentFilecomplete":false,"Phase":"Backup_ProcessingFiles","OverallProgress":0,"ProcessedFileCount":0,"ProcessedFileSize":0,"TotalFileCount":1,"TotalFileSize":83392132,"StillCounting":false,"ActiveTransfers":[]}

2.019305s

Problem (if it’s really a problem…) can be seen in both old ngax and new ngclient GUI.

I think I also observe this on my Backblaze B2 backup, which is speed limited naturally.

In some good news, with the accumulated fixes, I finally have a backup with the proper number of dindex files for dblock files, and content is clean even after a large compact, meaning recreate no longer has to download a few (was 7 awhile, then it dropped to 3) dblock files due to bad dindex files. A full-remote-verification also is nice and clean now. History of these fixes came at different times, so I’m just saying the area looks nice now, provided user does the manual work involving things like test and repair. How to let people know what they must do for best results in recreate and direct restore from files?

Storage size didn’t drop as much as hoped, maybe because custom retention seems to leave the partial backups alone. There might have been a reason, but I’d have to check.

EDIT 1:

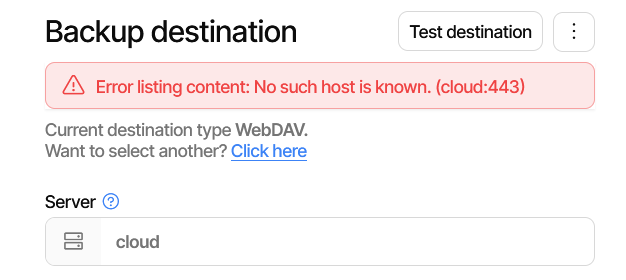

What is correct? If I give ngclient a space, I’m still seeing %20 in ngclient Edit target URL and in the database, and in ngax. Current test is with SSH to avoid some issues with file.

EDIT 2:

URL gets URL-encoded during Submit and Import #324

has some history, including the way it used to be done, and concern about changing that.