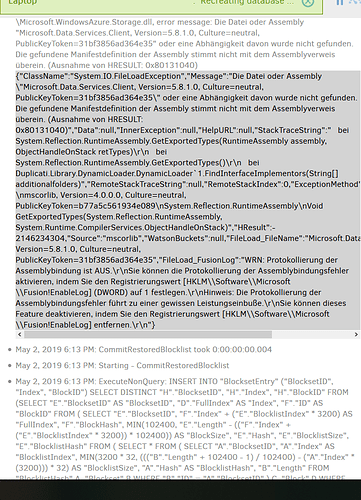

My concern is that the code looks like it already uses one transaction per dblock (but looking below it using Process Monitor at its -journal file shows what looks like a per-block-not-dblock close – anyone know IRP?) From log at profiling level plus --profile-all-database-queries of recreate of my Linux ISO+length 1+length 0:

2019-04-25 11:13:30 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitUpdateFilesetFromRemote]: Starting - CommitUpdateFilesetFromRemote

2019-04-25 11:13:31 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitUpdateFilesetFromRemote]: CommitUpdateFilesetFromRemote took 0:00:00:00.723

2019-04-25 11:13:51 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRecreateDb]: Starting - CommitRecreatedDb

2019-04-25 11:13:53 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRecreateDb]: CommitRecreatedDb took 0:00:00:01.993

2019-04-25 11:13:56 -04 - [Verbose-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-ProcessingAllBlocklistVolumes]: Processing all of the 35 volumes for blocklists: duplicati-b167500d2e2a941ca8c075859c0bc22f9.dblock.zip, duplicati-b16d5524a88c24fde99642f42b9faf1ad.dblock.zip, duplicati-b195763b0f55645f088585158de6d2107.dblock.zip, duplicati-b1a7ef013b20d46648d129c3c62a4494d.dblock.zip, duplicati-b1dc943b66de348009a0a2e3f5cc480bb.dblock.zip, duplicati-b1de83c2777f54ba787af25eae7179aff.dblock.zip, duplicati-b1ff26aa09502408cb3e326fe035d7f06.dblock.zip, duplicati-b2c49bc3e6b4440d5a97033ed72ada825.dblock.zip, duplicati-b2d06fe0458aa48c29ab01456fee8c085.dblock.zip, duplicati-b358f80645bba4878b0573d581f98c77e.dblock.zip, duplicati-b3cd136cb6f734c5ab4026e6ac90228a8.dblock.zip, duplicati-b3d3a14d375fe4b1580d333ae31254ea0.dblock.zip, duplicati-b3e6f89ee06a1435aa413d83ab03acdf8.dblock.zip, duplicati-b40aac901e79848898f8aa3360c2a53d9.dblock.zip, duplicati-b41721e38341e4d8fa119628cd156f072.dblock.zip, duplicati-b50333b60289b4748b6aefadde5a5b314.dblock.zip, duplicati-b5310f989cf934c228078fb3f1ac2cdda.dblock.zip, duplicati-b58caaa19663c4367b94d139d2878461d.dblock.zip, duplicati-b5b7a8303c98849928a7aeadeb511a735.dblock.zip, duplicati-b5dd98dddb9284efdba791913ac58483b.dblock.zip, duplicati-b5e0ed3bf021941618d44c34318713cc0.dblock.zip, duplicati-b614685f276a1459bb7a4ba8b5a9ecfbb.dblock.zip, duplicati-b623dd3d548594d91baeca344204f4423.dblock.zip, duplicati-b63c02ee435ab41629a68a82cc01d283c.dblock.zip, duplicati-b7dd0772ccf124ef4a136aaac7e33392f.dblock.zip, duplicati-b8aab30fb66ee4bf59d25ac36e6e71819.dblock.zip, duplicati-ba78c1e302368477697a585a76069a31a.dblock.zip, duplicati-bad20c40f66ba4e299bd8d0ad1d91aa5d.dblock.zip, duplicati-bb6c7b6bf121341c6ab5bfcbbe5f84ba7.dblock.zip, duplicati-bc1c3bfd0c7e0473e92551e68008d9cc8.dblock.zip, duplicati-bd2a2f42f628c439d99251f1423a1d696.dblock.zip, duplicati-bdca2732bc30a42a987d1aee472666426.dblock.zip, duplicati-be2badc43a0a24ff3a2749c0581016416.dblock.zip, duplicati-bf0f4c68b2e0940d9b6df908dd64c8e4a.dblock.zip, duplicati-bfdaf5015c45946b0bddcb08972312ba2.dblock.zip

2019-04-25 11:13:56 -04 - [Verbose-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-ProcessingAllBlocklistVolumes]: Processing all of the 35 volumes for blocklists

2019-04-25 11:14:51 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:14:51 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.475

2019-04-25 11:15:30 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:15:31 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.919

2019-04-25 11:15:32 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:15:33 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.634

2019-04-25 11:16:11 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:16:11 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.467

2019-04-25 11:16:30 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:16:30 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.370

2019-04-25 11:16:50 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:16:50 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.302

2019-04-25 11:17:07 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:17:08 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.942

2019-04-25 11:17:22 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:17:22 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.843

2019-04-25 11:17:40 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:17:40 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.322

2019-04-25 11:17:59 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:17:59 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.334

2019-04-25 11:18:15 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:16 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.708

2019-04-25 11:18:16 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:16 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.297

2019-04-25 11:18:29 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:30 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.353

2019-04-25 11:18:35 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:35 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.397

2019-04-25 11:18:36 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:36 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.249

2019-04-25 11:18:41 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:42 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.576

2019-04-25 11:18:43 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:44 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.429

2019-04-25 11:18:46 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:47 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.162

2019-04-25 11:18:48 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:49 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.351

2019-04-25 11:18:50 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:51 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.327

2019-04-25 11:18:54 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:54 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.227

2019-04-25 11:18:57 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:18:57 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.435

2019-04-25 11:19:00 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:00 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.272

2019-04-25 11:19:02 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:02 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.411

2019-04-25 11:19:06 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:06 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.195

2019-04-25 11:19:07 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:08 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.249

2019-04-25 11:19:10 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:11 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.369

2019-04-25 11:19:14 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:14 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.256

2019-04-25 11:19:22 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:22 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.483

2019-04-25 11:19:25 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:25 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.160

2019-04-25 11:19:28 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:29 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.424

2019-04-25 11:19:30 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:31 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.336

2019-04-25 11:19:33 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:33 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.367

2019-04-25 11:19:35 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:36 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.265

2019-04-25 11:19:38 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: Starting - CommitRestoredBlocklist

2019-04-25 11:19:38 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-CommitRestoredBlocklist]: CommitRestoredBlocklist took 0:00:00:00.267

Above backup was made for my tests in Empty source file can make Recreate download all dblock files fruitlessly with huge delay #3747 where I attempt to identify the current and historical reasons behind the all-dblock processing. What should ideally happen on Recreate is it should be over by the line which (for my little backup) said CommitRecreatedDb took 0:00:00:01.993. The 35 dblocks should never have processed. In that GitHuib issue, I did make a case for efficient processing where dblocks really require processing, but I made a stronger case for that being for exceptional situations rather than almost-usual.

If anybody would like to look at the actual code (ability to read the rather complex SQL present may help):

Here to here looks like dlist processing (all in one transaction)

Here to here looks like dindex processing (all in one transaction)

Here to here looks like dblock processing (transaction per dblock, but Process Monitor looks suspicious)