Thank you. From what I can tell:

How does pCloud Drive use the cache storage? describes that.

Where is my cache located? describes what I suppose you did to move the cache onto the external drive.

What’s not described (not surprisingly) is what can happen on disconnection. Even with an ordinary USB drive used for direct access, filesystem corruption can happen if disconnect happens while drive is active.

You no longer need to use Safely Remove Hardware when removing a USB drive on Windows 10

was a claim once made, but as you can see it received some skepticism. Regardless, the filesystem did not totally corrupt it appears, otherwise I guess Duplicati missing file error would not have been possible.

There’s still some question about what else may be wrong, and still a big gap on the current issues seen.

For the moment, let’s ignore corruption details, and try to collect some basic info on attempts and results.

I don’t think I posted one. I did post the output from an unposted command, and output from a test script.

“it didn’t work” is also prior problem I’m fighting – lack of specifics. Drive letter shows that it’s Windows:

Could not find file '\\?\R:

Linux and Windows both support GUI through web browser, GUI command line, and OS command line.

Using Duplicati from the Command Line is challenging (but some people prefer it) due to option volume.

Using the Command line tools from within the Graphical User Interface is maybe what you did to delete.

Options are easier here, because they’re carried in from the GUI job. You might also get a bit more info:

Return code: 100 for example. whereas GUI would only show a popup (error is red), and maybe logs.

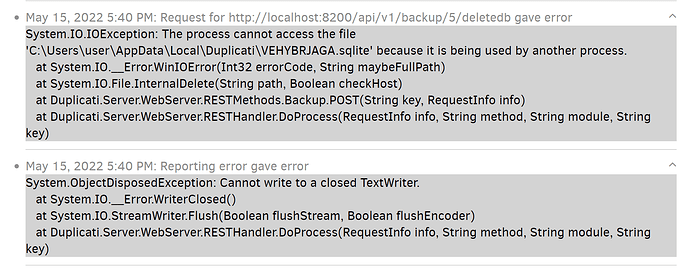

Viewing the Duplicati Server Logs at About → Show log tends to get errors when an operation fully fails.

Viewing the log files of a backup job at <job> → Show log tends to get warnings and some small errors.

Either way, there’s usually something said, which you can retype (ouch), copy-and-paste, or screenshot.

A written description of what you do (step-by-step) and what errors occur is a helpful place to start from.

I think you’re doing what most users do (nothing wrong with that), which is GUI, and GUI Commandline when you have to do something GUI has no button for. The delete is one. Thinking more, the paste you previously posted clinches it. “Running commandline entry” means you’re using command line (in GUI).

Some operations (such as repair) have a GUI button, but can also be done in OS or GUI command line.

Note that GUI button is easy to push, but getting details beyond the popup is harder. Command line has errors right there to copy and paste, as you did, but takes more preparation to run. Duplicati prepares a screen to do a backup, then you have to change the Command at top and maybe remove excess options.

The BACKUP command is where you start in GUI Commandline.

The REPAIR command requires less, e.g. clear out source paths.

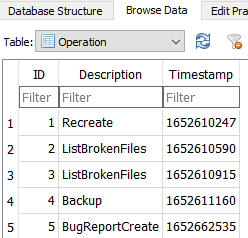

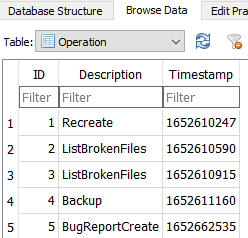

The LIST-BROKEN-FILES command is similar, and I think you ran it (at least the bug report shows runs)

Beyond that, there is no useful info. The list of files is empty. What I wanted was the bug report before database deletion – if old database is around you can put it back. I asked for rename. Did you delete? While there’s a GUI button for delete (without saving a copy…), the path to the database is shown too.

If database is truly gone, that’s more lost debug data, and we can only move forward with new issues.

Even if it’s not gone, it’s good for certain specific things, but does not replace your writeup and pastes.

If above image from DB bug report reflects what you did, what happened at each step? Ideally paste it.

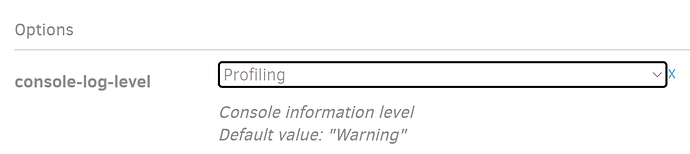

If it’s all gone and you need another run, improving the logging level to at least Information would help.

GUI Commandline can do that with console-log-level. If you prefer, you can also edit job configuration.

log-file=<path> and log-file-log-level=Information is a good starter, but Verbose and Profiling get detail.

This will save you from having to run everything from GUI Commandline, because it will log either way.

An option for a fast peek at what’s going on is server log at About → Show log → Live → (pick a level).

This is reverse-chronological, so if you’re lucky there’s enough information at the end (top) to help out.

Sometimes one needs to click on an error to expand it though. So three ways to get some more detail.

Right now, there’s not even an error message said, and without any data at all, there’s little I can do…