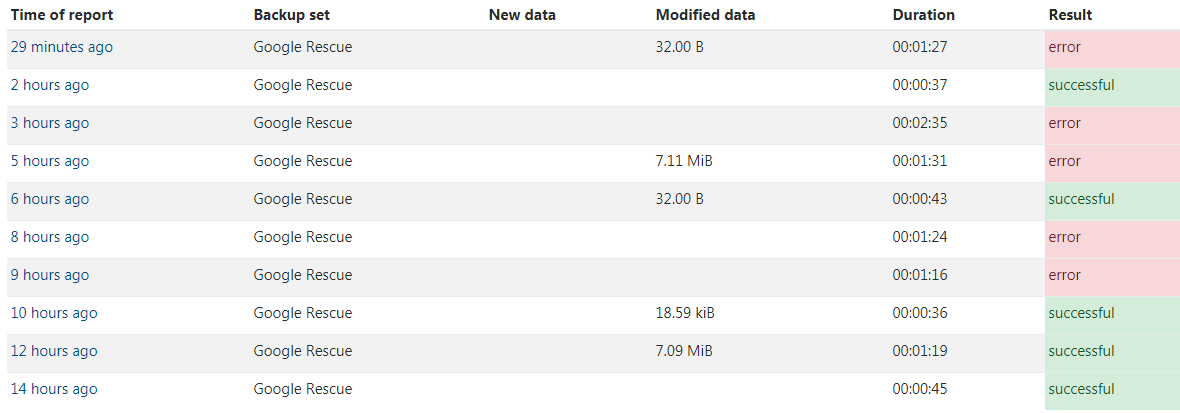

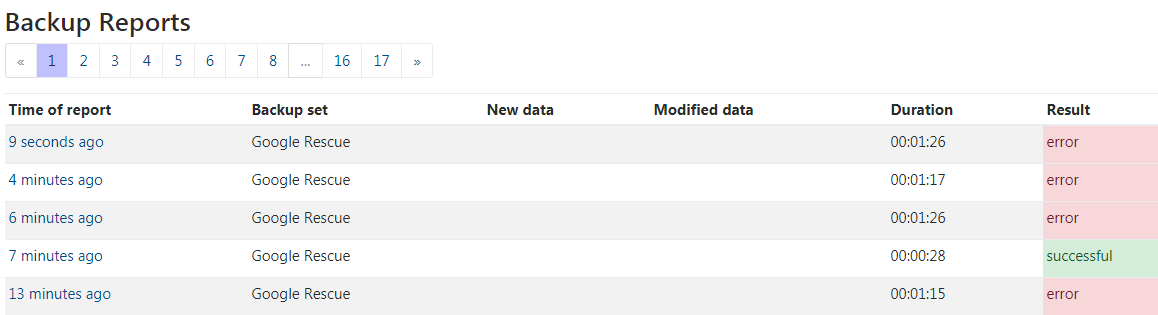

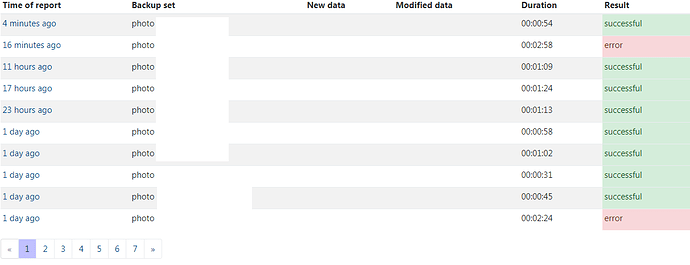

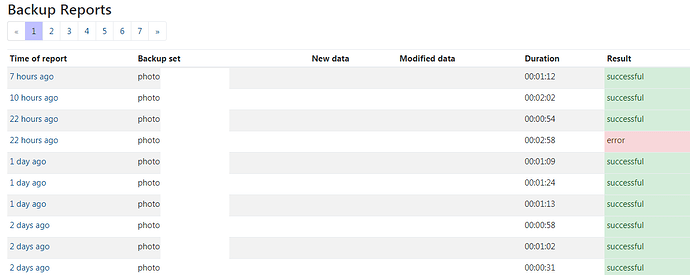

Many successfull runs but now I’ve got the error again and I have the log file!

I am a little bit worried because the hashes that do not match are not the same hashes reported some days ago.

Duplicati log

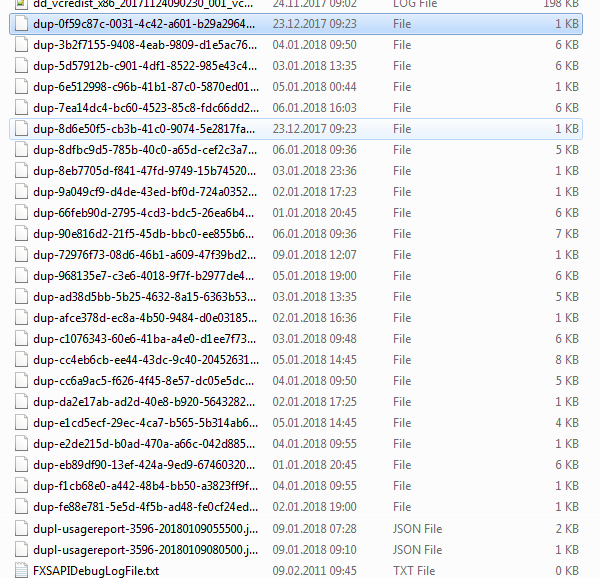

Failed to process file duplicati-b610f7bfd35c24b98883dc14a751c223b.dblock.zip.aes => Hash mismatch on file "C:\Users\server\AppData\Local\Temp\dup-b5f08c2a-44b9-4cce-96cc-4cae553f9e2c", recorded hash: RgzZaMU3n4QmMLZfFeEq/82tYaBCbuqwJghP/a9o5II=, actual hash KYfqN3xHnHDKb/pPGvBfD6ripcYAoXkDaln9AN1uMxA=

I took the last about 300 lines of the log. After the jpg-Files were processed. Splitted in 2 posts because post was too long

Logfile part 1

2018-01-14 19:33:24Z - Profiling: BackupMainOperation took 00:00:36.178

2018-01-14 19:33:24Z - Profiling: Starting - FinalizeRemoteVolumes

2018-01-14 19:33:24Z - Profiling: FinalizeRemoteVolumes took 00:00:00.000

2018-01-14 19:33:24Z - Profiling: Starting - UpdateChangeStatistics

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteScalarInt64: SELECT "ID" FROM "Fileset" WHERE "Timestamp" < ? AND "ID" != ? ORDER BY "Timestamp" DESC

2018-01-14 19:33:24Z - Profiling: ExecuteScalarInt64: SELECT "ID" FROM "Fileset" WHERE "Timestamp" < ? AND "ID" != ? ORDER BY "Timestamp" DESC took 00:00:00.000

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ? AND "File"."BlocksetID" = ? AND NOT "File"."Path" IN (SELECT "Path" FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ?)

2018-01-14 19:33:24Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ? AND "File"."BlocksetID" = ? AND NOT "File"."Path" IN (SELECT "Path" FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ?) took 00:00:00.017

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ? AND "File"."BlocksetID" = ? AND NOT "File"."Path" IN (SELECT "Path" FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ?)

2018-01-14 19:33:24Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ? AND "File"."BlocksetID" = ? AND NOT "File"."Path" IN (SELECT "Path" FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ?) took 00:00:00.000

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ? AND "File"."BlocksetID" = ? AND NOT "File"."Path" IN (SELECT "Path" FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ?)

2018-01-14 19:33:24Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ? AND "File"."BlocksetID" = ? AND NOT "File"."Path" IN (SELECT "Path" FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ?) took 00:00:00.017

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ? AND "File"."BlocksetID" = ? AND NOT "File"."Path" IN (SELECT "Path" FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ?)

2018-01-14 19:33:24Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ? AND "File"."BlocksetID" = ? AND NOT "File"."Path" IN (SELECT "Path" FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ?) took 00:00:00.000

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM (SELECT "File"."Path", "Blockset"."Fullhash" FROM "File", "FilesetEntry", "Metadataset", "Blockset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "Metadataset"."ID" = "File"."MetadataID" AND "File"."BlocksetID" = ? AND "Metadataset"."BlocksetID" = "Blockset"."ID" AND "FilesetEntry"."FilesetID" = ? ) A, (SELECT "File"."Path", "Blockset"."Fullhash" FROM "File", "FilesetEntry", "Metadataset", "Blockset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "Metadataset"."ID" = "File"."MetadataID" AND "File"."BlocksetID" = ? AND "Metadataset"."BlocksetID" = "Blockset"."ID" AND "FilesetEntry"."FilesetID" = ? ) B WHERE "A"."Path" = "B"."Path" AND "A"."Fullhash" != "B"."Fullhash"

2018-01-14 19:33:24Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM (SELECT "File"."Path", "Blockset"."Fullhash" FROM "File", "FilesetEntry", "Metadataset", "Blockset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "Metadataset"."ID" = "File"."MetadataID" AND "File"."BlocksetID" = ? AND "Metadataset"."BlocksetID" = "Blockset"."ID" AND "FilesetEntry"."FilesetID" = ? ) A, (SELECT "File"."Path", "Blockset"."Fullhash" FROM "File", "FilesetEntry", "Metadataset", "Blockset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "Metadataset"."ID" = "File"."MetadataID" AND "File"."BlocksetID" = ? AND "Metadataset"."BlocksetID" = "Blockset"."ID" AND "FilesetEntry"."FilesetID" = ? ) B WHERE "A"."Path" = "B"."Path" AND "A"."Fullhash" != "B"."Fullhash" took 00:00:00.002

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM (SELECT "File"."Path", "Blockset"."Fullhash" FROM "File", "FilesetEntry", "Metadataset", "Blockset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "Metadataset"."ID" = "File"."MetadataID" AND "File"."BlocksetID" = ? AND "Metadataset"."BlocksetID" = "Blockset"."ID" AND "FilesetEntry"."FilesetID" = ? ) A, (SELECT "File"."Path", "Blockset"."Fullhash" FROM "File", "FilesetEntry", "Metadataset", "Blockset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "Metadataset"."ID" = "File"."MetadataID" AND "File"."BlocksetID" = ? AND "Metadataset"."BlocksetID" = "Blockset"."ID" AND "FilesetEntry"."FilesetID" = ? ) B WHERE "A"."Path" = "B"."Path" AND "A"."Fullhash" != "B"."Fullhash"

2018-01-14 19:33:24Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM (SELECT "File"."Path", "Blockset"."Fullhash" FROM "File", "FilesetEntry", "Metadataset", "Blockset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "Metadataset"."ID" = "File"."MetadataID" AND "File"."BlocksetID" = ? AND "Metadataset"."BlocksetID" = "Blockset"."ID" AND "FilesetEntry"."FilesetID" = ? ) A, (SELECT "File"."Path", "Blockset"."Fullhash" FROM "File", "FilesetEntry", "Metadataset", "Blockset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "Metadataset"."ID" = "File"."MetadataID" AND "File"."BlocksetID" = ? AND "Metadataset"."BlocksetID" = "Blockset"."ID" AND "FilesetEntry"."FilesetID" = ? ) B WHERE "A"."Path" = "B"."Path" AND "A"."Fullhash" != "B"."Fullhash" took 00:00:00.000

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteNonQuery: CREATE TEMPORARY TABLE "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C" AS SELECT "File"."Path", "A"."Fullhash" AS "Filehash", "B"."Fullhash" AS "Metahash" FROM "File", "FilesetEntry", "Blockset" A, "Blockset" B, "Metadataset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "A"."ID" = "File"."BlocksetID" AND "FilesetEntry"."FilesetID" = ? AND "File"."MetadataID" = "Metadataset"."ID" AND "Metadataset"."BlocksetID" = "B"."ID"

2018-01-14 19:33:24Z - Profiling: ExecuteNonQuery: CREATE TEMPORARY TABLE "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C" AS SELECT "File"."Path", "A"."Fullhash" AS "Filehash", "B"."Fullhash" AS "Metahash" FROM "File", "FilesetEntry", "Blockset" A, "Blockset" B, "Metadataset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "A"."ID" = "File"."BlocksetID" AND "FilesetEntry"."FilesetID" = ? AND "File"."MetadataID" = "Metadataset"."ID" AND "Metadataset"."BlocksetID" = "B"."ID" took 00:00:00.012

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteNonQuery: CREATE TEMPORARY TABLE "TmpFileList-26DF3BEE5AE7C746A3754AC827CEF146" AS SELECT "File"."Path", "A"."Fullhash" AS "Filehash", "B"."Fullhash" AS "Metahash" FROM "File", "FilesetEntry", "Blockset" A, "Blockset" B, "Metadataset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "A"."ID" = "File"."BlocksetID" AND "FilesetEntry"."FilesetID" = ? AND "File"."MetadataID" = "Metadataset"."ID" AND "Metadataset"."BlocksetID" = "B"."ID"

2018-01-14 19:33:24Z - Profiling: ExecuteNonQuery: CREATE TEMPORARY TABLE "TmpFileList-26DF3BEE5AE7C746A3754AC827CEF146" AS SELECT "File"."Path", "A"."Fullhash" AS "Filehash", "B"."Fullhash" AS "Metahash" FROM "File", "FilesetEntry", "Blockset" A, "Blockset" B, "Metadataset" WHERE "File"."ID" = "FilesetEntry"."FileID" AND "A"."ID" = "File"."BlocksetID" AND "FilesetEntry"."FilesetID" = ? AND "File"."MetadataID" = "Metadataset"."ID" AND "Metadataset"."BlocksetID" = "B"."ID" took 00:00:00.010

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ? AND "File"."BlocksetID" != ? AND "File"."BlocksetID" != ? AND NOT "File"."Path" IN (SELECT "Path" FROM "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C")

2018-01-14 19:33:24Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ? AND "File"."BlocksetID" != ? AND "File"."BlocksetID" != ? AND NOT "File"."Path" IN (SELECT "Path" FROM "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C") took 00:00:00.020

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C" WHERE "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C"."Path" NOT IN (SELECT "Path" FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ?)

2018-01-14 19:33:24Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C" WHERE "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C"."Path" NOT IN (SELECT "Path" FROM "File" INNER JOIN "FilesetEntry" ON "File"."ID" = "FilesetEntry"."FileID" WHERE "FilesetEntry"."FilesetID" = ?) took 00:00:00.020

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C" A, "TmpFileList-26DF3BEE5AE7C746A3754AC827CEF146" B WHERE "A"."Path" = "B"."Path" AND ("A"."Filehash" != "B"."Filehash" OR "A"."Metahash" != "B"."Metahash")

2018-01-14 19:33:24Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C" A, "TmpFileList-26DF3BEE5AE7C746A3754AC827CEF146" B WHERE "A"."Path" = "B"."Path" AND ("A"."Filehash" != "B"."Filehash" OR "A"."Metahash" != "B"."Metahash") took 00:00:00.027

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteNonQuery: DROP TABLE IF EXISTS "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C";

2018-01-14 19:33:24Z - Profiling: ExecuteNonQuery: DROP TABLE IF EXISTS "TmpFileList-5E617F77F36AAC4091E2149D1ECE6C6C"; took 00:00:00.000

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteNonQuery: DROP TABLE IF EXISTS "TmpFileList-26DF3BEE5AE7C746A3754AC827CEF146";

2018-01-14 19:33:24Z - Profiling: ExecuteNonQuery: DROP TABLE IF EXISTS "TmpFileList-26DF3BEE5AE7C746A3754AC827CEF146"; took 00:00:00.002

2018-01-14 19:33:24Z - Profiling: UpdateChangeStatistics took 00:00:00.135

2018-01-14 19:33:24Z - Profiling: Starting - VerifyConsistency

2018-01-14 19:33:24Z - Profiling: Starting - ExecuteReader: SELECT "CalcLen", "Length", "A"."BlocksetID", "File"."Path" FROM (

SELECT

"A"."ID" AS "BlocksetID",

IFNULL("B"."CalcLen", 0) AS "CalcLen",

"A"."Length"

FROM

"Blockset" A

LEFT OUTER JOIN

(

SELECT

"BlocksetEntry"."BlocksetID",

SUM("Block"."Size") AS "CalcLen"

FROM

"BlocksetEntry"

LEFT OUTER JOIN

"Block"

ON

"Block"."ID" = "BlocksetEntry"."BlockID"

GROUP BY "BlocksetEntry"."BlocksetID"

) B

ON

"A"."ID" = "B"."BlocksetID"

) A, "File" WHERE "A"."BlocksetID" = "File"."BlocksetID" AND "A"."CalcLen" != "A"."Length"

2018-01-14 19:33:25Z - Profiling: ExecuteReader: SELECT "CalcLen", "Length", "A"."BlocksetID", "File"."Path" FROM (

SELECT

"A"."ID" AS "BlocksetID",

IFNULL("B"."CalcLen", 0) AS "CalcLen",

"A"."Length"

FROM

"Blockset" A

LEFT OUTER JOIN

(

SELECT

"BlocksetEntry"."BlocksetID",

SUM("Block"."Size") AS "CalcLen"

FROM

"BlocksetEntry"

LEFT OUTER JOIN

"Block"

ON

"Block"."ID" = "BlocksetEntry"."BlockID"

GROUP BY "BlocksetEntry"."BlocksetID"

) B

ON

"A"."ID" = "B"."BlocksetID"

) A, "File" WHERE "A"."BlocksetID" = "File"."BlocksetID" AND "A"."CalcLen" != "A"."Length" took 00:00:00.187

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteScalarInt64: SELECT Count(*) FROM "BlocklistHash"

2018-01-14 19:33:25Z - Profiling: ExecuteScalarInt64: SELECT Count(*) FROM "BlocklistHash" took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteScalarInt64: SELECT Count(*) FROM (SELECT DISTINCT "BlocksetID", "Index" FROM "BlocklistHash")

2018-01-14 19:33:25Z - Profiling: ExecuteScalarInt64: SELECT Count(*) FROM (SELECT DISTINCT "BlocksetID", "Index" FROM "BlocklistHash") took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM (SELECT * FROM (SELECT "N"."BlocksetID", (("N"."BlockCount" + 3200 - 1) / 3200) AS "BlocklistHashCountExpected", CASE WHEN "G"."BlocklistHashCount" IS NULL THEN 0 ELSE "G"."BlocklistHashCount" END AS "BlocklistHashCountActual" FROM (SELECT "BlocksetID", COUNT(*) AS "BlockCount" FROM "BlocksetEntry" GROUP BY "BlocksetID") "N" LEFT OUTER JOIN (SELECT "BlocksetID", COUNT(*) AS "BlocklistHashCount" FROM "BlocklistHash" GROUP BY "BlocksetID") "G" ON "N"."BlocksetID" = "G"."BlocksetID" WHERE "N"."BlockCount" > 1) WHERE "BlocklistHashCountExpected" != "BlocklistHashCountActual")

2018-01-14 19:33:25Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM (SELECT * FROM (SELECT "N"."BlocksetID", (("N"."BlockCount" + 3200 - 1) / 3200) AS "BlocklistHashCountExpected", CASE WHEN "G"."BlocklistHashCount" IS NULL THEN 0 ELSE "G"."BlocklistHashCount" END AS "BlocklistHashCountActual" FROM (SELECT "BlocksetID", COUNT(*) AS "BlockCount" FROM "BlocksetEntry" GROUP BY "BlocksetID") "N" LEFT OUTER JOIN (SELECT "BlocksetID", COUNT(*) AS "BlocklistHashCount" FROM "BlocklistHash" GROUP BY "BlocksetID") "G" ON "N"."BlocksetID" = "G"."BlocksetID" WHERE "N"."BlockCount" > 1) WHERE "BlocklistHashCountExpected" != "BlocklistHashCountActual") took 00:00:00.045

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM "File" WHERE "BlocksetID" != ? AND "BlocksetID" != ? AND NOT "BlocksetID" IN (SELECT "BlocksetID" FROM "BlocksetEntry")

2018-01-14 19:33:25Z - Profiling: ExecuteScalarInt64: SELECT COUNT(*) FROM "File" WHERE "BlocksetID" != ? AND "BlocksetID" != ? AND NOT "BlocksetID" IN (SELECT "BlocksetID" FROM "BlocksetEntry") took 00:00:00.010

2018-01-14 19:33:25Z - Profiling: VerifyConsistency took 00:00:00.242

2018-01-14 19:33:25Z - Profiling: removing temp files, as no data needs to be uploaded

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: CREATE TEMP TABLE "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ("ID" INTEGER PRIMARY KEY)

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: CREATE TEMP TABLE "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ("ID" INTEGER PRIMARY KEY) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteScalarInt64: SELECT "ID" FROM "Remotevolume" WHERE "Name" = ?

2018-01-14 19:33:25Z - Profiling: ExecuteScalarInt64: SELECT "ID" FROM "Remotevolume" WHERE "Name" = ? took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "IndexBlockLink" WHERE "BlockVolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ) OR "IndexVolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" )

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "IndexBlockLink" WHERE "BlockVolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ) OR "IndexVolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "FilesetEntry" WHERE "FilesetID" IN (SELECT "ID" FROM "Fileset" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ))

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "FilesetEntry" WHERE "FilesetID" IN (SELECT "ID" FROM "Fileset" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" )) took 00:00:00.005

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "Fileset" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" )

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "Fileset" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: CREATE TEMP TABLE "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" ("ID" INTEGER PRIMARY KEY)

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: CREATE TEMP TABLE "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" ("ID" INTEGER PRIMARY KEY) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: INSERT OR IGNORE INTO "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" ("ID") SELECT "BlocksetEntry"."BlocksetID" FROM "BlocksetEntry", "Block" WHERE "BlocksetEntry"."BlockID" = "Block"."ID" AND "Block"."VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ) UNION ALL SELECT "BlocksetID" FROM "BlocklistHash" WHERE "Hash" IN (SELECT "Hash" FROM "Block" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ))

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: INSERT OR IGNORE INTO "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" ("ID") SELECT "BlocksetEntry"."BlocksetID" FROM "BlocksetEntry", "Block" WHERE "BlocksetEntry"."BlockID" = "Block"."ID" AND "Block"."VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ) UNION ALL SELECT "BlocksetID" FROM "BlocklistHash" WHERE "Hash" IN (SELECT "Hash" FROM "Block" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" )) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "File" WHERE "BlocksetID" IN (SELECT "ID" FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" ) OR "MetadataID" IN (SELECT "ID" FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" )

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "File" WHERE "BlocksetID" IN (SELECT "ID" FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" ) OR "MetadataID" IN (SELECT "ID" FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" ) took 00:00:00.002

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "Metadataset" WHERE "BlocksetID" IN (SELECT "ID" FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" )

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "Metadataset" WHERE "BlocksetID" IN (SELECT "ID" FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" ) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "Blockset" WHERE "ID" IN (SELECT "ID" FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" )

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "Blockset" WHERE "ID" IN (SELECT "ID" FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" ) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "BlocksetEntry" WHERE "BlocksetID" IN (SELECT "ID" FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" )

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "BlocksetEntry" WHERE "BlocksetID" IN (SELECT "ID" FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" ) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "BlocklistHash" WHERE "Hash" IN (SELECT "Hash" FROM "Block" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ))

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "BlocklistHash" WHERE "Hash" IN (SELECT "Hash" FROM "Block" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" )) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "Block" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" )

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "Block" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "DeletedBlock" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" )

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "DeletedBlock" WHERE "VolumeID" IN (SELECT "ID" FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" ) took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574"

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574"

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DROP TABLE IF EXISTS "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574"

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DROP TABLE IF EXISTS "DelBlockSetIds-BD91660E62E28E40B2D39D7741947574" took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DROP TABLE IF EXISTS "DelVolSetIds-BD91660E62E28E40B2D39D7741947574"

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DROP TABLE IF EXISTS "DelVolSetIds-BD91660E62E28E40B2D39D7741947574" took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - Async backend wait

2018-01-14 19:33:25Z - Profiling: Starting - RemoteOperationTerminate

2018-01-14 19:33:25Z - Profiling: RemoteOperationTerminate took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Async backend wait took 00:00:00.002

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC

2018-01-14 19:33:25Z - Profiling: ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC

2018-01-14 19:33:25Z - Profiling: ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC

2018-01-14 19:33:25Z - Profiling: ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "Fileset" WHERE "Timestamp" IN ()

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "Fileset" WHERE "Timestamp" IN () took 00:00:00.000

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "FilesetEntry" WHERE "FilesetID" NOT IN (SELECT DISTINCT "ID" FROM "Fileset")

2018-01-14 19:33:25Z - Profiling: ExecuteNonQuery: DELETE FROM "FilesetEntry" WHERE "FilesetID" NOT IN (SELECT DISTINCT "ID" FROM "Fileset") took 00:00:00.002

2018-01-14 19:33:25Z - Profiling: Starting - ExecuteNonQuery: DELETE FROM "File" WHERE "ID" NOT IN (SELECT DISTINCT "FileID" FROM "FilesetEntry")