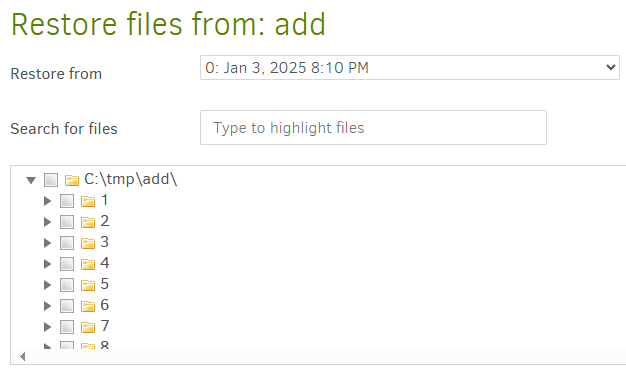

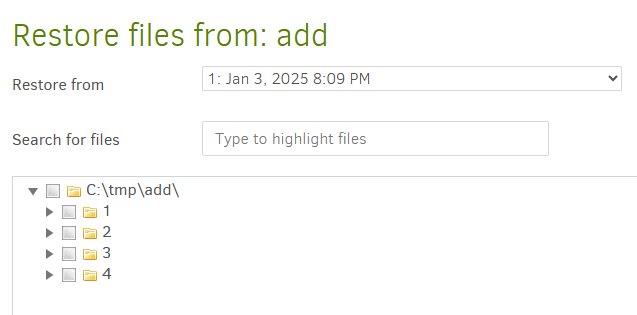

First, please stop personalizing my comments. I am trying to debug this and give you as much detail as I can. This issue has been a problem for 6+ months. I have done what you asked using the find command and have determined that only small subset of files is being backed up. IF you are doing the standard system calls for enumeration, you should not care what path or files the filesystem returns. That said, I just ran this backup with the new n: and here is the log that came back. What does not make sense is that the status field said that is got to ~115GB of data and ~65000 files and if you look at the backup, it only shows the following!! You can clearly see it is only backing up 3.5gb of data. And it seems to be starting somewhere down the file list.

IS THERE a way to force duplicati to do a FULL backup each time? Maybe you are looking at the “backed up” bit to determine which files to backup? If so, can you force a full backup?

Last successful backup:

Today at 12:15 PM (took 00:33:08)

Run now

Next scheduled run:

Today at 1:00 PM

Source:

4.07 GB

Backup:

3.30 GB / 1 Version

Here is the complete log file!

{

“DeletedFiles”: 0,

“DeletedFolders”: 0,

“ModifiedFiles”: 0,

“ExaminedFiles”: 2930,

“OpenedFiles”: 2866,

“AddedFiles”: 2866,

“SizeOfModifiedFiles”: 0,

“SizeOfAddedFiles”: 4178457842,

“SizeOfExaminedFiles”: 4374752222,

“SizeOfOpenedFiles”: 4178457842,

“NotProcessedFiles”: 0,

“AddedFolders”: 378,

“TooLargeFiles”: 0,

“FilesWithError”: 0,

“ModifiedFolders”: 0,

“ModifiedSymlinks”: 0,

“AddedSymlinks”: 0,

“DeletedSymlinks”: 0,

“PartialBackup”: false,

“Dryrun”: false,

“MainOperation”: “Backup”,

“CompactResults”: null,

“VacuumResults”: null,

“DeleteResults”: null,

“RepairResults”: null,

“TestResults”: {

“MainOperation”: “Test”,

“VerificationsActualLength”: 3,

“Verifications”: [

{

“Key”: “duplicati-20250106T164218Z.dlist.zip.aes”,

“Value”:

},

{

“Key”: “duplicati-i22bea8991f4a4750ac63c8754371134e.dindex.zip.aes”,

“Value”:

},

{

“Key”: “duplicati-bcedd525c382e47fbade24f1363acdd1a.dblock.zip.aes”,

“Value”:

}

],

“ParsedResult”: “Success”,

“Interrupted”: false,

“Version”: “2.1.0.2 (2.1.0.2_beta_2024-11-29)”,

“EndTime”: “2025-01-06T17:15:22.335385Z”,

“BeginTime”: “2025-01-06T17:15:15.9118607Z”,

“Duration”: “00:00:06.4235243”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null,

“BackendStatistics”: {

“RemoteCalls”: 144,

“BytesUploaded”: 3540279535,

“BytesDownloaded”: 51816007,

“FilesUploaded”: 139,

“FilesDownloaded”: 3,

“FilesDeleted”: 0,

“FoldersCreated”: 0,

“RetryAttempts”: 0,

“UnknownFileSize”: 0,

“UnknownFileCount”: 0,

“KnownFileCount”: 139,

“KnownFileSize”: 3540279535,

“LastBackupDate”: “2025-01-06T11:42:18-05:00”,

“BackupListCount”: 1,

“TotalQuotaSpace”: 0,

“FreeQuotaSpace”: 0,

“AssignedQuotaSpace”: -1,

“ReportedQuotaError”: false,

“ReportedQuotaWarning”: false,

“MainOperation”: “Backup”,

“ParsedResult”: “Success”,

“Interrupted”: false,

“Version”: “2.1.0.2 (2.1.0.2_beta_2024-11-29)”,

“EndTime”: “0001-01-01T00:00:00”,

“BeginTime”: “2025-01-06T16:42:14.1777115Z”,

“Duration”: “00:00:00”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null

}

},

“ParsedResult”: “Warning”,

“Interrupted”: false,

“Version”: “2.1.0.2 (2.1.0.2_beta_2024-11-29)”,

“EndTime”: “2025-01-06T17:15:22.6353594Z”,

“BeginTime”: “2025-01-06T16:42:14.1777065Z”,

“Duration”: “00:33:08.4576529”,

“MessagesActualLength”: 290,

“WarningsActualLength”: 637,

“ErrorsActualLength”: 0,

“Messages”: [

“2025-01-06 11:42:14 -05 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started”,

“2025-01-06 11:42:19 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()”,

“2025-01-06 11:42:19 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: ()”,

“2025-01-06 11:43:06 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-ba61ad178bc93453bab7e77ad11451894.dblock.zip.aes (49.19 MB)”,

“2025-01-06 11:43:10 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-be76569029bde4e89922d9125aa151a44.dblock.zip.aes (49.68 MB)”,

“2025-01-06 11:43:10 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b301886cf64fd4959b91062f70bd7d6dc.dblock.zip.aes (49.43 MB)”,

“2025-01-06 11:43:11 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-ba61ad178bc93453bab7e77ad11451894.dblock.zip.aes (49.19 MB)”,

“2025-01-06 11:43:13 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i28b182c8741746da98b0a0e3d1f242e3.dindex.zip.aes (4.56 KB)”,

“2025-01-06 11:43:14 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i28b182c8741746da98b0a0e3d1f242e3.dindex.zip.aes (4.56 KB)”,

“2025-01-06 11:43:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b301886cf64fd4959b91062f70bd7d6dc.dblock.zip.aes (49.43 MB)”,

“2025-01-06 11:43:19 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-if20c7134a7ae41f2b2682e99a725afe3.dindex.zip.aes (5.48 KB)”,

“2025-01-06 11:43:19 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-if20c7134a7ae41f2b2682e99a725afe3.dindex.zip.aes (5.48 KB)”,

“2025-01-06 11:43:19 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-bf045cdb40aca4fed9ad2b3f02cc963a3.dblock.zip.aes (49.84 MB)”,

“2025-01-06 11:43:20 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b4f00746e38c3435c9649df46d0a086a0.dblock.zip.aes (49.84 MB)”,

“2025-01-06 11:43:22 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-be76569029bde4e89922d9125aa151a44.dblock.zip.aes (49.68 MB)”,

“2025-01-06 11:43:22 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-ide3bee64a5354173b39fa9c33d567c2f.dindex.zip.aes (4.26 KB)”,

“2025-01-06 11:43:22 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-ide3bee64a5354173b39fa9c33d567c2f.dindex.zip.aes (4.26 KB)”,

“2025-01-06 11:43:23 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b99b05267d6e34afc8831d18881fe0ee3.dblock.zip.aes (49.90 MB)”,

“2025-01-06 11:43:32 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-bf045cdb40aca4fed9ad2b3f02cc963a3.dblock.zip.aes (49.84 MB)”,

“2025-01-06 11:43:33 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b99b05267d6e34afc8831d18881fe0ee3.dblock.zip.aes (49.90 MB)”

],

“Warnings”: [

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\fairleigh dickinson university-master of science in hs with grad certs (2).pdf\r\nIOException: The request is not supported. : ‘\\?\N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\fairleigh dickinson university-master of science in hs with grad certs (2).pdf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileBlockProcessor.FileEntry-PathProcessingFailed]: Failed to process path: N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_DefaultNCOAMerge.dbf\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_DefaultNCOAMerge.dbf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileBlockProcessor.FileEntry-PathProcessingFailed]: Failed to process path: N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_DefaultNCOAMerge.dmt\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_DefaultNCOAMerge.dmt’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\fairleigh dickinson-global security and terrorism grad cert (1).pdf\r\nIOException: The request is not supported. : ‘\\?\N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\fairleigh dickinson-global security and terrorism grad cert (1).pdf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.MetadataPreProcess.FileEntry-TimestampReadFailed]: Failed to read timestamp on "N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_NCOA_Output_Changed_V2.dbf"\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_NCOA_Output_Changed_V2.dbf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_NCOA_Output_Changed_V2.dmt\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_NCOA_Output_Changed_V2.dmt’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\florida institute of technology - bachelor of arts in crimnial justice with a concentration in homeland security.doc\r\nIOException: The request is not supported. : ‘\\?\N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\florida institute of technology - bachelor of arts in crimnial justice with a concentration in homeland security.doc’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.MetadataGenerator.Metadata-MetadataProcessFailed]: Failed to process metadata for "N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_NCOA_Output_Changed_V2.csv", storing empty metadata\r\nInvalidOperationException: Method failed with unexpected error code 50.”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\Importing text files into Excel.pdf\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\Importing text files into Excel.pdf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\florida state university - undergrad cert in em and hs (2).pdf\r\nIOException: The request is not supported. : ‘\\?\N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\florida state university - undergrad cert in em and hs (2).pdf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\MailListCleaner - Sales Receipt - 00344187 - 20190312.pdf\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\MailListCleaner - Sales Receipt - 00344187 - 20190312.pdf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileBlockProcessor.FileEntry-PathProcessingFailed]: Failed to process path: N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_NCOA_Output_Changed_V2.csv\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_NCOA_Output_Changed_V2.csv’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\florida state university - grad cert in em and hs (2).pdf\r\nIOException: The request is not supported. : ‘\\?\N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\florida state university - grad cert in em and hs (2).pdf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\MLC MergeEvent_List Duplicate Report.pdf\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\MLC MergeEvent_List Duplicate Report.pdf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\florida state university - interdisciplinary social sciences with em track.docx\r\nIOException: The request is not supported. : ‘\\?\N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\florida state university - interdisciplinary social sciences with em track.docx’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.MetadataGenerator.Metadata-MetadataProcessFailed]: Failed to process metadata for "N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_NCOA_Output_Changed_V2.dbf", storing empty metadata\r\nInvalidOperationException: Method failed with unexpected error code 50.”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\MLC_Layout.pdf\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\MLC_Layout.pdf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\florida state university - mpa with em speciality - copy.pdf\r\nIOException: The request is not supported. : ‘\\?\N:\JEM\Issue 19\Special Higher Education Issue\Directory\Distance Learning\florida state university - mpa with em speciality - copy.pdf’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\NCOA Documents.xls\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\NCOA Documents.xls’”,

“2025-01-06 12:14:18 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileBlockProcessor.FileEntry-PathProcessingFailed]: Failed to process path: N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_NCOA_Output_Changed_V2.dbf\r\nIOException: The request is not supported. : ‘\\?\N:\OCP\ICOO-2020\MARKETING\LISTS\2019\CASS_NCOA_OUTPUT\ICOO_2019_Final_Mailing_List_NCOA_Output_Changed_V2.dbf’”

],

“Errors”: ,

“BackendStatistics”: {

“RemoteCalls”: 144,

“BytesUploaded”: 3540279535,

“BytesDownloaded”: 51816007,

“FilesUploaded”: 139,

“FilesDownloaded”: 3,

“FilesDeleted”: 0,

“FoldersCreated”: 0,

“RetryAttempts”: 0,

“UnknownFileSize”: 0,

“UnknownFileCount”: 0,

“KnownFileCount”: 139,

“KnownFileSize”: 3540279535,

“LastBackupDate”: “2025-01-06T11:42:18-05:00”,

“BackupListCount”: 1,

“TotalQuotaSpace”: 0,

“FreeQuotaSpace”: 0,

“AssignedQuotaSpace”: -1,

“ReportedQuotaError”: false,

“ReportedQuotaWarning”: false,

“MainOperation”: “Backup”,

“ParsedResult”: “Success”,

“Interrupted”: false,

“Version”: “2.1.0.2 (2.1.0.2_beta_2024-11-29)”,

“EndTime”: “0001-01-01T00:00:00”,

“BeginTime”: “2025-01-06T16:42:14.1777115Z”,

“Duration”: “00:00:00”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null

}

}