Starting with (maybe) easier messages, what permissions do the /home/tomc/.cache/dconf/ and /home/tomc/.cache/doc/ directories have, according to stat or ls -ld? On my system, dconf is root-only, and I don’t have the other one (or know what it is, after some search). Is Duplicati running as root? If not, it has access limits.

What does this warning signify? points to synthetic fileset explanations. Possibly these were just a byproduct of the original problem causing the “interrupted backup” treatment to be used. You can see if that fits history.

I think that to know what might be happening, it would be helpful to distinguish between files not being put to remote storage, versus them being put then disappearing, versus them being there but somehow not found.

This is a case where use of backslashes might have caused a problem seeing files in WebDAV, however on the Linux laptop I’d expect forward slashes. I doubt this is the cause of the current problem, but I’ll mention it.

Another question would be how and when the USB drive is mounted then unmounted. Do missing files occur when a backup is re-run in quick succession (maybe with intentional file change) while drive stays attached?

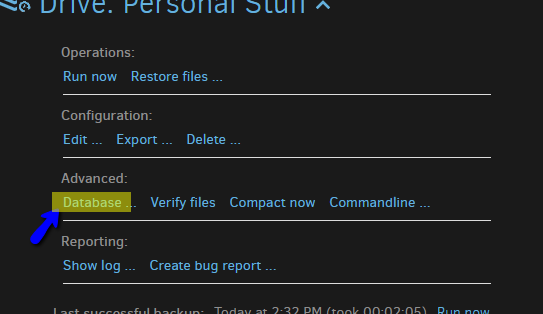

Reports seem to show varying numbers of missing files, and perhaps this is related to the amount of change, meaning this two-in-a-row plan might have a small number. Even better would be to run the backup by using the Commandline option of the job, checking that Command is backup, then adding –console-log-level=Retry before pushing the Run button. For a small number of changes, this should show a small number of files that get Put to the backend, and should also name any files it thinks are missing. Any file that gets Put should be actually on the drive (which can be confirmed), and reported missing files on the drive can be confirmed too.

Setting up a –log-file and –log-file-log-level is an alternative to doing tests on Commandline, if it’s preferred.

Example output from my system (where I’ve been inserting errors on purpose – also you won’t see Profiling level messages unless you set Profiling log level, but it does create a large amount of output when you do):

2018-12-05 10:18:39 -05 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingFile]: Missing file: duplicati-20181204T202210Z.dlist.zip

2018-12-05 10:18:39 -05 - [Error-Duplicati.Library.Main.Operation.FilelistProcessor-MissingRemoteFiles]: Found 1 files that are missing from the remote storage, please run repair

2018-12-05 18:41:53 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-20181205T233955Z.dlist.zip (723 bytes)

2018-12-05 18:41:53 -05 - [Profiling-Duplicati.Library.Main.Operation.Common.BackendHandler-UploadSpeed]: Uploaded 723 bytes in 00:00:00.0761505, 9.27 KB/s

2018-12-05 18:41:53 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-20181205T233955Z.dlist.zip (723 bytes)

If you don’t want to jump directly into looking at file names, you could start with file counts. For any job, there should be a “Show log” button leading to summary information. Some sampled lines from one of my backups:

BackendStatistics:

RemoteCalls: 7

BytesUploaded: 3569

BytesDownloaded: 2100

FilesUploaded: 2

FilesDownloaded: 3

FilesDeleted: 0

FoldersCreated: 0

RetryAttempts: 0

UnknownFileSize: 2702

UnknownFileCount: 1

KnownFileCount: 14

KnownFileSize: 11208

LastBackupDate: 12/4/2018 6:59:14 PM (1543967954)

BackupListCount: 6

so you can match things like KnownFileCount and KnownFileSize against what your Linux file tools think you actually have for your dlist, dblock, and dindex files. My UnknownFileCount is probably 1 because I have set –upload-verification-file which creates a duplicati-verification.json file after the backup listing what should be there. Match can then be checked with the DuplicatiVerify tools provided in the Duplicati utility-scripts folder. You’d presumably use the Python (not PowerShell) one, and a USB drive is easy because it’s already there.