I’ve got Duplicati running a single backup job that has never completed. It has managed to copy all the files to the destination during the initial backup. Reading the logs in the backup at the Reporting: Show logs link hangs for a while and then shows a dialog with Failed to connect: The database file is locked database is locked.

As it stands I can successfully:

- Start the container

- Browse the backups

- Verify the database

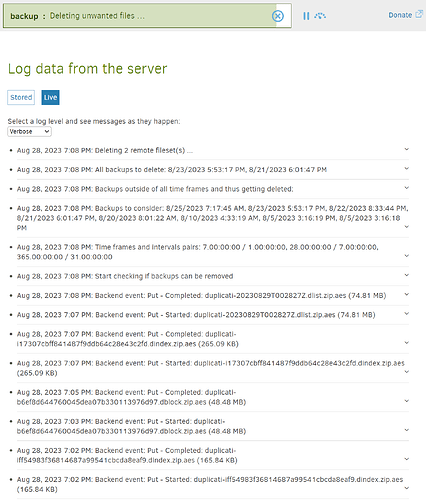

- Start and get all the way through an incremental backup until the “Deleting unwanted files” phase.

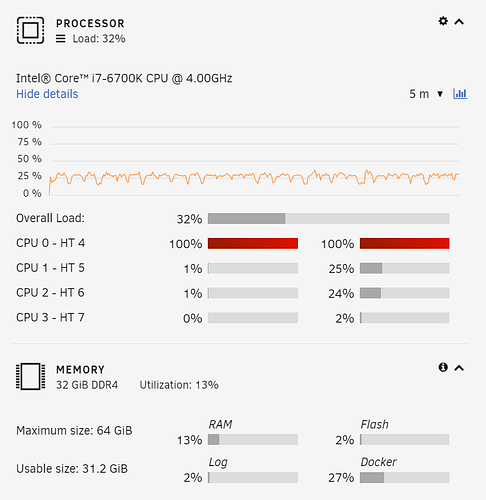

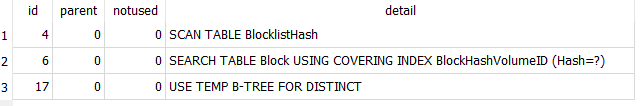

Once it begins “Deleting unwanted files” it just takes up 25% of my system CPU perpetually spinning, never being able to resolve the database lock. Attempting to read the About > Logs or viewing the system logs from Docker shows the following error:

Mono.Data.Sqlite.SqliteException (0x80004005): The database file is locked

database is locked

at Mono.Data.Sqlite.SQLite3.Prepare (Mono.Data.Sqlite.SqliteConnection cnn, System.String strSql, Mono.Data.Sqlite.SqliteStatement previous, System.UInt32 timeoutMS, System.String& strRemain) [0x001ba] in <0e0c808ba41a4844814b82eb7ba357a3>:0

at Mono.Data.Sqlite.SqliteCommand.BuildNextCommand () [0x000d3] in <0e0c808ba41a4844814b82eb7ba357a3>:0

at Mono.Data.Sqlite.SqliteCommand.GetStatement (System.Int32 index) [0x00008] in <0e0c808ba41a4844814b82eb7ba357a3>:0

at (wrapper remoting-invoke-with-check) Mono.Data.Sqlite.SqliteCommand.GetStatement(int)

at Mono.Data.Sqlite.SqliteDataReader.NextResult () [0x000b3] in <0e0c808ba41a4844814b82eb7ba357a3>:0

at Mono.Data.Sqlite.SqliteDataReader..ctor (Mono.Data.Sqlite.SqliteCommand cmd, System.Data.CommandBehavior behave) [0x0004e] in <0e0c808ba41a4844814b82eb7ba357a3>:0

at (wrapper remoting-invoke-with-check) Mono.Data.Sqlite.SqliteDataReader..ctor(Mono.Data.Sqlite.SqliteCommand,System.Data.CommandBehavior)

at Mono.Data.Sqlite.SqliteCommand.ExecuteReader (System.Data.CommandBehavior behavior) [0x00006] in <0e0c808ba41a4844814b82eb7ba357a3>:0

at Mono.Data.Sqlite.SqliteCommand.ExecuteNonQuery () [0x00000] in <0e0c808ba41a4844814b82eb7ba357a3>:0

at Mono.Data.Sqlite.SqliteConnection.Open () [0x00543] in <0e0c808ba41a4844814b82eb7ba357a3>:0

at Duplicati.Library.SQLiteHelper.SQLiteLoader.OpenSQLiteFile (System.Data.IDbConnection con, System.String path) [0x00021] in <0f5cd23b75654343bab04c48758cac91>:0

at Duplicati.Library.SQLiteHelper.SQLiteLoader.LoadConnection (System.String targetpath) [0x00048] in <0f5cd23b75654343bab04c48758cac91>:0

at Duplicati.Server.WebServer.RESTMethods.Backup.FetchRemoteLogData (Duplicati.Server.Serialization.Interface.IBackup backup, Duplicati.Server.WebServer.RESTMethods.RequestInfo info) [0x00006] in <30a34d71126b48248d040dda634ddad9>:0

at Duplicati.Server.WebServer.RESTMethods.Backup.GET (System.String key, Duplicati.Server.WebServer.RESTMethods.RequestInfo info) [0x001ed] in <30a34d71126b48248d040dda634ddad9>:0

at Duplicati.Server.WebServer.RESTHandler.DoProcess (Duplicati.Server.WebServer.RESTMethods.RequestInfo info, System.String method, System.String module, System.String key) [0x00146] in <30a34d71126b48248d040dda634ddad9>:0

And:

System.ObjectDisposedException: Can not write to a closed TextWriter.

at System.IO.StreamWriter.Flush (System.Boolean flushStream, System.Boolean flushEncoder) [0x00008] in <d636f104d58046fd9b195699bcb1a744>:0

at System.IO.StreamWriter.Flush () [0x00006] in <d636f104d58046fd9b195699bcb1a744>:0

at Duplicati.Server.WebServer.RESTHandler.DoProcess (Duplicati.Server.WebServer.RESTMethods.RequestInfo info, System.String method, System.String module, System.String key) [0x003bc] in <30a34d71126b48248d040dda634ddad9>:0

I’m running version 2.0.7.1_beta_2023-05-25. I originally had it backing up everything on the system where the container is running so it would have eventually attempted to back up the in-use database file. I have since:

- Excluded the entire container path for Duplicati

- Moved the file to a different location (and set the

dbpathflag). - Moved the file back after it was having trouble reading the remote logs even before the backup was running.

If there’s more information I can provide, this is 100% reproducible with my current setup and I don’t expect that to change soon given I’ve read every search result about the quoted terms in the title and nothing really seemed to help.

Here’s the system info:

System properties

System properties

- APIVersion : 1

- PasswordPlaceholder : **********

- ServerVersion : 2.0.7.1

- ServerVersionName : - 2.0.7.1_beta_2023-05-25

- ServerVersionType : Beta

- StartedBy : Server

- BaseVersionName : 2.0.7.1_beta_2023-05-25

- DefaultUpdateChannel : Beta

- DefaultUsageReportLevel : Information

- ServerTime : 2023-08-25T07:17:33.950878-07:00

- OSType : Linux

- DirectorySeparator : /

- PathSeparator : :

- CaseSensitiveFilesystem : true

- MonoVersion : 6.12.0.200

- MachineName : 792b30785b1b

- UserName : abc

- NewLine :

- CLRVersion : 4.0.30319.42000

- CLROSInfo : {“Platform”:“Unix”,“ServicePack”:“”,“Version”:“5.19.17.0”,“VersionString”:“Unix 5.19.17.0”}

- ServerModules :

- UsingAlternateUpdateURLs : false

- LogLevels : [“ExplicitOnly”,“Profiling”,“Verbose”,“Retry”,“Information”,“DryRun”,“Warning”,“Error”]

- SuppressDonationMessages : false

- SpecialFolders : [{“ID”:“%MY_DOCUMENTS%”,“Path”:“/config”},{“ID”:“%HOME%”,“Path”:“/config”}]

- BrowserLocale : {“Code”:“en-US”,“EnglishName”:“English (United States)”,“DisplayName”:“English (United States)”}

- SupportedLocales : [{“Code”:“bn”,“EnglishName”:“Bangla”,“DisplayName”:“বাংলা”},{“Code”:“ca”,“EnglishName”:“Catalan”,“DisplayName”:“català”},{“Code”:“cs”,“EnglishName”:“Czech”,“DisplayName”:“čeština”},{“Code”:“da”,“EnglishName”:“Danish”,“DisplayName”:“dansk”},{“Code”:“de”,“EnglishName”:“German”,“DisplayName”:“Deutsch”},{“Code”:“en”,“EnglishName”:“English”,“DisplayName”:“English”},{“Code”:“en-GB”,“EnglishName”:“English (United Kingdom)”,“DisplayName”:“English (United Kingdom)”},{“Code”:“es”,“EnglishName”:“Spanish”,“DisplayName”:“español”},{“Code”:“fi”,“EnglishName”:“Finnish”,“DisplayName”:“suomi”},{“Code”:“fr”,“EnglishName”:“French”,“DisplayName”:“français”},{“Code”:“fr-CA”,“EnglishName”:“French (Canada)”,“DisplayName”:“français (Canada)”},{“Code”:“hu”,“EnglishName”:“Hungarian”,“DisplayName”:“magyar”},{“Code”:“it”,“EnglishName”:“Italian”,“DisplayName”:“italiano”},{“Code”:“ja-JP”,“EnglishName”:“Japanese (Japan)”,“DisplayName”:“日本語 (日本)”},{“Code”:“ko”,“EnglishName”:“Korean”,“DisplayName”:“한국어”},{“Code”:“lt”,“EnglishName”:“Lithuanian”,“DisplayName”:“lietuvių”},{“Code”:“lv”,“EnglishName”:“Latvian”,“DisplayName”:“latviešu”},{“Code”:“nl-NL”,“EnglishName”:“Dutch (Netherlands)”,“DisplayName”:“Nederlands (Nederland)”},{“Code”:“pl”,“EnglishName”:“Polish”,“DisplayName”:“polski”},{“Code”:“pt”,“EnglishName”:“Portuguese”,“DisplayName”:“português”},{“Code”:“pt-BR”,“EnglishName”:“Portuguese (Brazil)”,“DisplayName”:“português (Brasil)”},{“Code”:“ro”,“EnglishName”:“Romanian”,“DisplayName”:“română”},{“Code”:“ru”,“EnglishName”:“Russian”,“DisplayName”:“русский”},{“Code”:“sk”,“EnglishName”:“Slovak”,“DisplayName”:“slovenčina”},{“Code”:“sk-SK”,“EnglishName”:“Slovak (Slovakia)”,“DisplayName”:“slovenčina (Slovensko)”},{“Code”:“sv-SE”,“EnglishName”:“Swedish (Sweden)”,“DisplayName”:“svenska (Sverige)”},{“Code”:“th”,“EnglishName”:“Thai”,“DisplayName”:“ไทย”},{“Code”:“zh-CN”,“EnglishName”:“Chinese (Simplified)”,“DisplayName”:“中文 (中国)”},{“Code”:“zh-HK”,“EnglishName”:“Chinese (Traditional, Hong Kong SAR China)”,“DisplayName”:“中文 (中国香港特别行政区)”},{“Code”:“zh-TW”,“EnglishName”:“Chinese (Traditional)”,“DisplayName”:“中文 (台湾)”}]

- BrowserLocaleSupported : true

- backendgroups : {“std”:{“ftp”:null,“ssh”:null,“webdav”:null,“openstack”:“OpenStack Object Storage / Swift”,“s3”:“S3 Compatible”,“aftp”:“FTP (Alternative)”},“local”:{“file”:null},“prop”:{“e2”:null,“s3”:null,“azure”:null,“googledrive”:null,“onedrive”:null,“onedrivev2”:null,“sharepoint”:null,“msgroup”:null,“cloudfiles”:null,“gcs”:null,“openstack”:null,“hubic”:null,“b2”:null,“mega”:null,“idrive”:null,“box”:null,“od4b”:null,“mssp”:null,“dropbox”:null,“sia”:null,“storj”:null,“tardigrade”:null,“jottacloud”:null,“rclone”:null,“cos”:null}}

- GroupTypes : [“Local storage”,“Standard protocols”,“Proprietary”,“Others”]

- Backend modules: aftp azure b2 box cloudfiles dropbox ftp file googledrive gcs hubic jottacloud mega msgroup onedrivev2 sharepoint openstack rclone s3 ssh od4b mssp sia storj tahoe tardigrade cos webdav e2

- Compression modules: zip 7z

- Encryption modules: aes gpg

Server state properties

- lastEventId : 374

- lastDataUpdateId : 12

- lastNotificationUpdateId : 0

- estimatedPauseEnd : 0001-01-01T00:00:00

- activeTask : {“Item1”:8,“Item2”:“1”}

- programState : Running

- lastErrorMessage :

- connectionState : connected

- xsfrerror : false

- connectionAttemptTimer : 0

- failedConnectionAttempts : 0

- lastPgEvent : {“BackupID”:“1”,“TaskID”:8,“BackendAction”:“Put”,“BackendPath”:“ParallelUpload”,“BackendFileSize”:1244938195,“BackendFileProgress”:1244938195,“BackendSpeed”:24431,“BackendIsBlocking”:false,“CurrentFilename”:null,“CurrentFilesize”:0,“CurrentFileoffset”:0,“CurrentFilecomplete”:false,“Phase”:“Backup_Delete”,“OverallProgress”:0,“ProcessedFileCount”:927666,“ProcessedFileSize”:4244879640226,“TotalFileCount”:927668,“TotalFileSize”:4244879640520,“StillCounting”:false}

- updaterState : Waiting

- updatedVersion :

- updateReady : false

- updateDownloadProgress : 0

- proposedSchedule : [{“Item1”:“1”,“Item2”:“2023-08-28T08:00:00Z”}]

- schedulerQueueIds :

- pauseTimeRemain : 0