I can’t speak for developers. To me this is a rare and new “nuisance” warning, not a technical problem, so my guess is it’s too new, does too little damage, and has a workaround. Many other problem do not. More:

From a philosophy point of view, Duplicati seems to me (and I am just a user) to be one of those programs that does not force a lot of restrictions on the user, but gives them large latitude to do the backup they want. Some other backup programs take an opposite approach, taking responsibility and capability from the user.

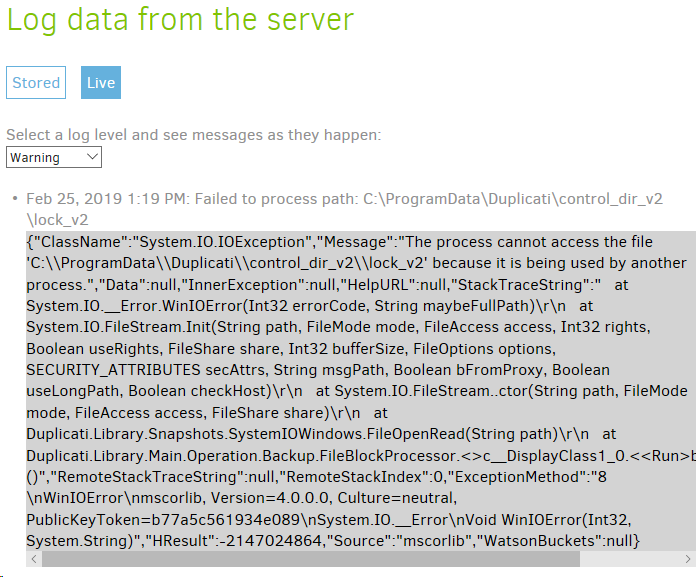

From a problem point of view, this appears to have only become a (rare) warning in the recent 2.0.4.5 beta (with some found in canary releases). It’s often near a #1400 error. Both might be 2.0.3.6 new-code issues.

New warnings/errors occurring after updating to 2.0.4.5

Errors are more serious than warnings, and there are enough still lingering that rare new warnings are not likely high on the priority list for scarce developer time. From a technical point of view, removing this could mean making filters more dynamic, because the database name changes. Current filters appear fixed, but do at least customize several paths to the user. Database path varies and can’t be shown in format below:

C:\Program Files\Duplicati 2>Duplicati.CommandLine.exe help filter-groups

Duplicati has several built-in groups of filters, which include commonly

excluded files and folders for different operating systems. These sets can be

specified via the --include/--exclude parameter by putting their name in

{curly brackets}.

None: Selects no filters.

DefaultExcludes: A set of default exclude filters, currently evaluates to:

DefaultIncludes, SystemFiles,DefaultIncludes,

OperatingSystem,DefaultIncludes, CacheFiles,DefaultIncludes,

TemporaryFiles,DefaultIncludes, Applications.

DefaultIncludes: A set of default include filters, currently evaluates to:

None.

SystemFiles: Files that are owned by the system or not suited to be backed

up. This includes any operating system reported protected files. Most

users should at least apply these filters.

Aliases: Sys,System

*UsrClass.dat*

*\I386*

*\Internet Explorer\

*\Microsoft*\RecoveryStore*

*\Microsoft*\Windows\*.edb

*\Microsoft*\Windows\*.log

*\Microsoft*\Windows\Cookies*

*\MSOCache*

*\NTUSER*

*\ntuser.dat*

*\RECYCLER\

?:\hiberfil.sys

?:\pagefile.sys

?:\swapfile.sys

?:\System Volume Information\

?:\Windows\Installer*

?:\Windows\Temp*

C:\ProgramData\Microsoft\Network\Downloader\*

C:\ProgramData\Microsoft\Windows\WER\*

C:\Users\xxx\AppData\Local\Temp\*

C:\Users\xxx\index.dat

C:\WINDOWS\memory.dmp

C:\WINDOWS\Minidump\*

C:\WINDOWS\netlogon.chg

C:\WINDOWS\softwaredistribution\*.*

C:\WINDOWS\system32\LogFiles\WMI\RtBackup\*.*

C:\Windows\system32\MSDtc\MSDTC.LOG

C:\Windows\system32\MSDtc\trace\dtctrace.log

OperatingSystem: Files that belong to the operating system. These files

are restored when the operating system is re-installed.

Aliases: OS

*\Recent\

?:\autoexec.bat

?:\Config.Msi*

C:\Users\xxx\AppData\Roaming\Microsoft\Windows\Recent\

C:\WINDOWS.old\

C:\WINDOWS\

TemporaryFiles: Files and folders that are known to be storage of

temporary data.

Aliases: Temp,TempFiles,TempFolders,TemporaryFolders,Tmp

*.tmp

*.tmp\

*\$RECYCLE.BIN\

*\AppData\Local\Temp*

*\AppData\Temp*

*\Local Settings\Temp*

?:\Windows\Temp*

C:\Users\xxx\AppData\Local\Temp\

CacheFiles: Files and folders that are known cache locations for the

operating system and various applications

Aliases: Cache,CacheFolders,Caches

*\AppData\Local\AMD\DxCache\

*\AppData\Local\Apple Computer\Mobile Sync\

*\AppData\Local\Comms\UnistoreDB\

*\AppData\Local\ElevatedDiagnostics\

*\AppData\Local\Microsoft\VSCommon\*SQM*

*\AppData\Local\Microsoft\Windows Store\

*\AppData\Local\Microsoft\Windows\Explorer\

*\AppData\Local\Microsoft\Windows\INetCache\

*\AppData\Local\Microsoft\Windows\UPPS\

*\AppData\Local\Microsoft\Windows\WebCache*

*\AppData\Local\Packages\

*\Application Data\Apple Computer\Mobile Sync\

*\Application Data\Application Data*

*\Dropbox\Dropbox.exe.log

*\Dropbox\QuitReports\

*\Duplicati\control_dir_v2\

*\Google\Chrome\*cache*

*\Google\Chrome\*Current*

*\Google\Chrome\*LOCK*

*\Google\Chrome\Safe Browsing*

*\Google\Chrome\User Data\Default\Cookies

*\Google\Chrome\User Data\Default\Cookies-journal

*\iPhoto Library\iPod Photo Cache\

*\Local Settings\History\

*\Mozilla\Firefox\*cache*

*\OneDrive\.849C9593-D756-4E56-8D6E-42412F2A707B

*\Safari\Library\Caches\

*\Temporary Internet Files\

*\Thumbs.db

C:\Users\xxx\AppData\Local\Microsoft\Windows\History\

C:\Users\xxx\AppData\Local\Microsoft\Windows\INetCache\

C:\Users\xxx\AppData\Local\Microsoft\Windows\INetCookies\

[.*\\(cookies|permissions).sqllite(-.{3})?]

Applications: Installed programs and their libraries, but not their

settings.

Aliases: App,Application,Apps

C:\Program Files (x86)\

C:\Program Files\

C:\ProgramData\Microsoft\Windows\Start Menu\Programs\Administrative

Tools\

C:\Users\xxx\AppData\Roaming\Microsoft\Windows\Start

Menu\Programs\Administrative Tools\

C:\Program Files\Duplicati 2>

Filtering has been found to cause quite a large loss of performance, but that’s been improved in 2.0.4.10:

Improved performance of filters by around 10x, thanks @warwickmm

I’m not sure how much performance loss filtering out the job’s own database would cause. If need be, the removal could perhaps be done by special-casing comparison instead of the usual filtering mechanism…

It seems a reasonable idea to me for new users, but if there are users who now backup their database to the extent that backup is possible, these users might be hugely surprised if Duplicati suddenly stops that.

To me, this has more the flavor of an issue than a new Feature, but you can make a case for it either way, or possibly someone will read this discussion. Ultimately, some volunteer has to decide to go off and do it.