Hi Folks,

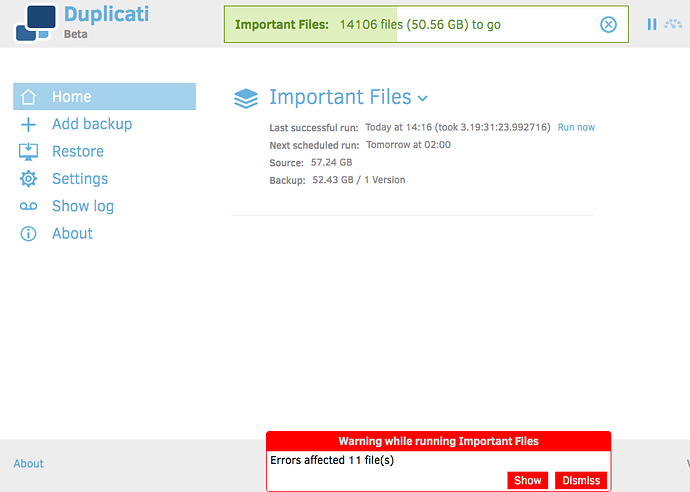

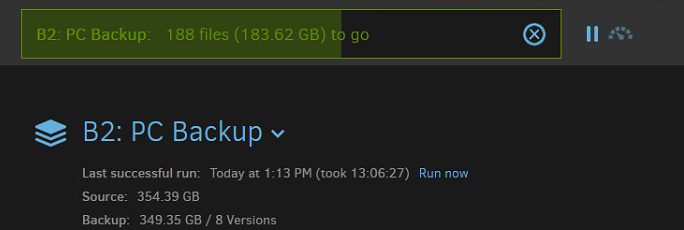

Just started using Duplicati with Backblaze B2, it spent 3 days backing up 50GB of data, it finally finished and then it seems to be backing up another 50GB…?

It’s running on Windows Server 2016 backing up a folder on a ReFS drive.

The error messages are of these ilk:

Error reported while accessing file: D:\Important Files\Shared\.TemporaryItems\folders.1033397298\Cleanup At Startup\.BAH.HIiu2\

System.UnauthorizedAccessException: Access to the path 'D:\Important Files\Shared\.TemporaryItems\folders.1033397298\Cleanup At Startup\.BAH.HIiu2' is denied.

at System.IO.__Error.WinIOError(Int32 errorCode, String maybeFullPath)

at System.IO.FileSystemEnumerableIterator`1.CommonInit()

at System.IO.Directory.GetFiles(String path)

at Duplicati.Library.Snapshots.NoSnapshotWindows.ListFiles(String folder)

at Duplicati.Library.Utility.Utility.<EnumerateFileSystemEntries>d__22.MoveNext()

Failed to process metadata for "D:\Important Files\Shared\.TemporaryItems\folders.1033397298\Cleanup At Startup\.BAH.HIiu2\", storing empty metadata

System.UnauthorizedAccessException: Attempted to perform an unauthorized operation.

at System.Security.AccessControl.Win32.GetSecurityInfo(ResourceType resourceType, String name, SafeHandle handle, AccessControlSections accessControlSections, RawSecurityDescriptor& resultSd)

at System.Security.AccessControl.NativeObjectSecurity.CreateInternal(ResourceType resourceType, Boolean isContainer, String name, SafeHandle handle, AccessControlSections includeSections, Boolean createByName, ExceptionFromErrorCode exceptionFromErrorCode, Object exceptionContext)

at System.Security.AccessControl.FileSystemSecurity..ctor(Boolean isContainer, String name, AccessControlSections includeSections, Boolean isDirectory)

at System.Security.AccessControl.DirectorySecurity..ctor(String name, AccessControlSections includeSections)

at Duplicati.Library.Snapshots.SystemIOWindows.GetAccessControlDir(String path)

at Duplicati.Library.Snapshots.SystemIOWindows.GetMetadata(String path, Boolean isSymlink, Boolean followSymlink)

at Duplicati.Library.Main.Operation.BackupHandler.GenerateMeta

DeletedFiles: 0

DeletedFolders: 0

ModifiedFiles: 0

ExaminedFiles: 18289

OpenedFiles: 18289

AddedFiles: 18278

SizeOfModifiedFiles: 0

SizeOfAddedFiles: 61460771057

SizeOfExaminedFiles: 61460771057

SizeOfOpenedFiles: 61460771057

NotProcessedFiles: 0

AddedFolders: 1677

TooLargeFiles: 0

FilesWithError: 11

ModifiedFolders: 0

ModifiedSymlinks: 0

AddedSymlinks: 0

DeletedSymlinks: 0

PartialBackup: False

Dryrun: False

MainOperation: Backup

CompactResults: null

DeleteResults:

DeletedSets: []

Dryrun: False

MainOperation: Delete

CompactResults: null

ParsedResult: Success

EndTime: 27/08/2017 13:14:48

BeginTime: 27/08/2017 13:14:43

Duration: 00:00:05.4448338

BackendStatistics:

RemoteCalls: 2159

BytesUploaded: 56298045089

BytesDownloaded: 54256023

FilesUploaded: 2149

FilesDownloaded: 3

FilesDeleted: 0

FoldersCreated: 0

RetryAttempts: 5

UnknownFileSize: 0

UnknownFileCount: 0

KnownFileCount: 2149

KnownFileSize: 56298045089

LastBackupDate: 23/08/2017 18:45:14

BackupListCount: 1

TotalQuotaSpace: 0

FreeQuotaSpace: 0

AssignedQuotaSpace: -1

ParsedResult: Success

RepairResults: null

TestResults:

MainOperation: Test

Verifications: [

Key: duplicati-20170823T174514Z.dlist.zip.aes

Value: [],

Key: duplicati-i37115728cf9d45d5b4ab9d787a24cd15.dindex.zip.aes

Value: [],

Key: duplicati-b9d1f1af1bdc54759855c285dcdf9d255.dblock.zip.aes

Value: []

]

ParsedResult: Success

EndTime: 27/08/2017 13:16:38

BeginTime: 27/08/2017 13:15:23

Duration: 00:01:15.4604669

ParsedResult: Error

EndTime: 27/08/2017 13:16:38

BeginTime: 23/08/2017 17:45:14

Duration: 3.19:31:23.9927161

Messages: [

Renaming "duplicati-iedc7df7c421949cdbc91fc5b26e1eff3.dindex.zip.aes" to "duplicati-ib1dc2f050b98487cb0da3478fae43f79.dindex.zip.aes",

Renaming "duplicati-b0a46cd4d76964904b413713246a2c464.dblock.zip.aes" to "duplicati-bc53646fc2f04444388c4fe7d844b306e.dblock.zip.aes",

Renaming "duplicati-ba6764df2933d4daeb9aa5d9120bb8d7a.dblock.zip.aes" to "duplicati-bee9903efd36446839d0774e0ca5851c7.dblock.zip.aes",

Renaming "duplicati-bf285c972b7dc405089d99c4e9b92f8bc.dblock.zip.aes" to "duplicati-b18b88a6f63d745958212c8c75d723cd6.dblock.zip.aes",

Renaming "duplicati-i663144296ab2467e8690af26fccb35eb.dindex.zip.aes" to "duplicati-id5ec4c0e1ffb4dbbaf9fe1ab667edc93.dindex.zip.aes",

...

]

Warnings: [

Failed to process metadata for "D:\Important Files\Shared\.TemporaryItems\folders.1033397298\Cleanup At Startup\.BAH.HIiu2\", storing empty metadata => Attempted to perform an unauthorized operation.,

Error reported while accessing file: D:\Important Files\Shared\.TemporaryItems\folders.1033397298\Cleanup At Startup\.BAH.HIiu2\ => Access to the path 'D:\Important Files\Shared\.TemporaryItems\folders.1033397298\Cleanup At Startup\.BAH.HIiu2' is denied.,

Error reported while accessing file: D:\Important Files\Shared\.TemporaryItems\folders.1033397298\Cleanup At Startup\.BAH.HIiu2\ => Access to the path 'D:\Important Files\Shared\.TemporaryItems\folders.1033397298\Cleanup At Startup\.BAH.HIiu2' is denied.,

Failed to process metadata for "D:\Important Files\Home\.DS_Store", storing empty metadata => Attempted to perform an unauthorized operation.,

Failed to process path: D:\Important Files\Home\.DS_Store => Access to the path 'D:\Important Files\Home\.DS_Store' is denied.,

...

]

Errors: []

removing file listed as Deleting: duplicati-i663144296ab2467e8690af26fccb35eb.dindex.zip.aes

I suspect I shouldn’t be too worried about the 11 files error? But can anyone explain why it’s started backing up another 50GB when most of this data (if not all) wouldn’t have changed?

Thanks