To expand final error from 7 A.M. backup (while the Wi-Fi was flaky, so maybe caused errors):

2025-02-16 07:34:58 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-verification.json (102.96 KiB)

2025-02-16 07:35:15 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.BackendManager-RemoteOperationPut]: RemoteOperationPut took 0:00:00:17.310

2025-02-16 07:35:15 -05 - [Retry-Duplicati.Library.Main.BackendManager-RetryPut]: Operation Put with file duplicati-verification.json attempt 1 of 0 failed with message: An error occurred while sending the request.

System.Net.Http.HttpRequestException: An error occurred while sending the request.

---> System.Net.Http.HttpIOException: The response ended prematurely. (ResponseEnded)

at System.Net.Http.HttpConnection.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

--- End of inner exception stack trace ---

at System.Net.Http.HttpConnection.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpConnectionPool.SendWithVersionDetectionAndRetryAsync(HttpRequestMessage request, Boolean async, Boolean doRequestAuth, CancellationToken cancellationToken)

at System.Net.Http.RedirectHandler.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at Microsoft.Extensions.Http.Logging.LoggingHttpMessageHandler.<SendCoreAsync>g__Core|5_0(HttpRequestMessage request, Boolean useAsync, CancellationToken cancellationToken)

at Microsoft.Extensions.Http.Logging.LoggingScopeHttpMessageHandler.<SendCoreAsync>g__Core|5_0(HttpRequestMessage request, Boolean useAsync, CancellationToken cancellationToken)

at System.Net.Http.HttpClient.<SendAsync>g__Core|83_0(HttpRequestMessage request, HttpCompletionOption completionOption, CancellationTokenSource cts, Boolean disposeCts, CancellationTokenSource pendingRequestsCts, CancellationToken originalCancellationToken)

at Duplicati.Library.JsonWebHelperHttpClient.GetResponseAsync(HttpRequestMessage req, CancellationToken cancellationToken)

at Duplicati.Library.JsonWebHelperHttpClient.ReadJsonResponseAsync[T](HttpRequestMessage req, CancellationToken cancellationToken)

at Duplicati.Library.JsonWebHelperHttpClient.GetJsonDataAsync[T](String url, CancellationToken cancellationToken, Action`1 setup)

at Duplicati.Library.JsonWebHelperHttpClient.PostAndGetJsonDataAsync[T](String url, Object item, CancellationToken cancellationToken)

at Duplicati.Library.Backend.Backblaze.B2.GetUploadUrlDataAsync(CancellationToken cancellationToken)

at Duplicati.Library.Backend.Backblaze.B2.PutAsync(String remotename, Stream stream, CancellationToken cancelToken)

at Duplicati.Library.Main.BackendManager.DoPutAsync(FileEntryItem item)

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.Library.Main.BackendManager.ThreadRun()

2025-02-16 07:35:15 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Failed: duplicati-verification.json (102.96 KiB)

For a friendlier view of 10 A.M. backup with missing files, tags with Upload or BackendEvent:

2025-02-16 10:49:39 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.BackendManager-RemoteOperationList]: Starting - RemoteOperationList

2025-02-16 10:49:39 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()

2025-02-16 10:49:40 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (506 bytes)

2025-02-16 10:49:40 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.BackendManager-RemoteOperationList]: RemoteOperationList took 0:00:00:01.251

2025-02-16 10:50:54 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes (50.01 MiB)

2025-02-16 10:50:56 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b5a714fb2fee94bf1b6fb131d48d23a65.dblock.zip.aes (49.98 MiB)

2025-02-16 10:51:11 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 50.01 MiB in 00:00:16.7923503, 2.98 MiB/s

2025-02-16 10:51:11 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes (50.01 MiB)

2025-02-16 10:51:14 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 49.98 MiB in 00:00:18.2696385, 2.74 MiB/s

2025-02-16 10:51:16 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b5a714fb2fee94bf1b6fb131d48d23a65.dblock.zip.aes (49.98 MiB)

2025-02-16 10:51:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i162a52604e33461d8844a395e75c97df.dindex.zip.aes (29.23 KiB)

2025-02-16 10:51:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-ib1a923650b104045a91fb8992fb81af1.dindex.zip.aes (24.62 KiB)

2025-02-16 10:51:17 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 24.62 KiB in 00:00:00.1768233, 139.25 KiB/s

2025-02-16 10:51:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-ib1a923650b104045a91fb8992fb81af1.dindex.zip.aes (24.62 KiB)

2025-02-16 10:51:17 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 29.23 KiB in 00:00:00.4153594, 70.38 KiB/s

2025-02-16 10:51:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i162a52604e33461d8844a395e75c97df.dindex.zip.aes (29.23 KiB)

2025-02-16 10:51:36 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes (22.62 MiB)

2025-02-16 10:51:37 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UpdateChangeStatistics]: Starting - UpdateChangeStatistics

2025-02-16 10:51:37 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UpdateChangeStatistics]: UpdateChangeStatistics took 0:00:00:00.398

2025-02-16 10:51:37 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UploadNewFileset]: Starting - Uploading a new fileset

2025-02-16 10:51:39 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UploadNewFileset]: Uploading a new fileset took 0:00:00:01.436

2025-02-16 10:51:40 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 22.62 MiB in 00:00:03.8972025, 5.80 MiB/s

2025-02-16 10:51:40 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes (22.62 MiB)

2025-02-16 10:51:41 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i55861405c35f4c569ad171016b5f66d5.dindex.zip.aes (62.22 KiB)

2025-02-16 10:51:41 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 62.22 KiB in 00:00:00.2984505, 208.46 KiB/s

2025-02-16 10:51:41 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i55861405c35f4c569ad171016b5f66d5.dindex.zip.aes (62.22 KiB)

2025-02-16 10:51:41 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-20250216T154934Z.dlist.zip.aes (948.67 KiB)

2025-02-16 10:51:42 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 948.67 KiB in 00:00:00.6454318, 1.44 MiB/s

2025-02-16 10:51:42 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-20250216T154934Z.dlist.zip.aes (948.67 KiB)

2025-02-16 10:51:44 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.BackendManager-RemoteOperationNothing]: Starting - RemoteOperationNothing

2025-02-16 10:51:44 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.BackendManager-RemoteOperationNothing]: RemoteOperationNothing took 0:00:00:00.000

2025-02-16 10:51:44 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.BackendManager-RemoteOperationPut]: Starting - RemoteOperationPut

2025-02-16 10:51:44 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-verification.json (104.96 KiB)

2025-02-16 10:51:47 -05 - [Profiling-Duplicati.Library.Main.BackendManager-UploadSpeed]: Uploaded 104.96 KiB in 00:00:02.0918700, 50.18 KiB/s

2025-02-16 10:51:47 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-verification.json (104.96 KiB)

2025-02-16 10:51:47 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.BackendManager-RemoteOperationPut]: RemoteOperationPut took 0:00:00:02.094

2025-02-16 10:51:47 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.BackendManager-RemoteOperationTerminate]: Starting - RemoteOperationTerminate

2025-02-16 10:51:47 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.BackendManager-RemoteOperationTerminate]: RemoteOperationTerminate took 0:00:00:00.000

2025-02-16 10:51:47 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.BackendManager-RemoteOperationList]: Starting - RemoteOperationList

2025-02-16 10:51:47 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()

2025-02-16 10:51:47 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (508 bytes)

2025-02-16 10:51:47 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.BackendManager-RemoteOperationList]: RemoteOperationList took 0:00:00:00.525

Of the two initial roughly 50 MB dblock files, the first vanished somehow, but second made it. Second file that made it was duplicati-ib1a923650b104045a91fb8992fb81af1.dindex.zip.aes. Possibly by coincidence (or maybe there’s a connection?) that’s dindex for successful dblock.

Which aspect? Unfortunately, the notes after it suggest it didn’t repro the odd dlist-after-failure.

I looked at emails from over a year of failures (remember, I’m “asking for it” with no-retry policy):

503 - service_unavailable: no tomes available

503 - service_unavailable: CPU too busy

500 - internal_error

401 - bad_auth_token

System.Net.Sockets.SocketException: A connection attempt failed because the connected party did not properly respond after a period of time, or established connection failed because connected host has failed to respond

System.AggregateException: Unable to read data from the transport connection: The connection was closed.

Details: System.AggregateException: One or more errors occurred. (Error while copying content to a stream. (Error while copying content to a stream.) (One or more errors occurred. (Error while copying content to a stream.)))

---> System.AggregateException: Error while copying content to a stream. (Error while copying content to a stream.) (One or more errors occurred. (Error while copying content to a stream.))

---> System.Net.WebException: Error while copying content to a stream.

---> System.Net.Http.HttpRequestException: Error while copying content to a stream.

---> System.IO.IOException: Unable to write data to the transport connection: An existing connection was forcibly closed by the remote host..

---> System.Net.Sockets.SocketException (10054): An existing connection was forcibly closed by the remote host.

The 503 (which were most common) are actually part of normal operations per B2 upload plan.

Other errors were handled well enough that I don’t remember problems like this one previously.

I’m not sure either of these failures are heading for any answer, so I might give up and clean up.

In order to save downloading and keep state awhile, I’ll practice on a cloned job and destination.

EDIT 1:

Duplicati crashed (seemingly idle except for polling). Server profiling log first messages of today:

2025-02-20 11:19:03 -05 - [Information-Duplicati.Server.Program-ServerStopping]: Server is stopping, tearing down handlers

2025-02-20 11:19:13 -05 - [Information-Duplicati.Server.Program-ServerStopped]: Server has stopped

Seemingly nothing relevant in event logs. Terminal output has no dates as usual, but does have:

[WebSocket] Client closed connection prematurely.

[WebSocket] Client closed connection prematurely.

[WebSocket] Client closed connection prematurely.

[WebSocket] Client closed connection prematurely.

[WebSocket] Client closed connection prematurely.

[WebSocket] Client closed connection prematurely.

Unexpected error: System.ComponentModel.Win32Exception (0x80004005): The operation completed successfully.

at Avalonia.Win32.Interop.Win32Icon.CreateIcon(Bitmap bitmap, PixelPoint hotSpot)

at Avalonia.Win32.Interop.Win32Icon..ctor(Win32Icon original, PixelSize size)

at Avalonia.Win32.TrayIconImpl.UpdateIcon(Boolean remove)

at Avalonia.Controls.TrayIcon.OnPropertyChanged(AvaloniaPropertyChangedEventArgs change)

at Avalonia.AvaloniaObject.RaisePropertyChanged[T](AvaloniaProperty`1 property, Optional`1 oldValue, BindingValue`1 newValue, BindingPriority priority, Boolean isEffectiveValue)

at Avalonia.PropertyStore.EffectiveValue`1.NotifyValueChanged(ValueStore owner, StyledProperty`1 property, T oldValue)

at Avalonia.PropertyStore.EffectiveValue`1.SetAndRaiseCore(ValueStore owner, StyledProperty`1 property, T value, BindingPriority priority, Boolean isOverriddenCurrentValue, Boolean isCoercedDefaultValue)

at Duplicati.GUI.TrayIcon.AvaloniaApp.SetIcon(TrayIcons icon)

at Avalonia.Threading.DispatcherOperation.InvokeCore()

at Avalonia.Threading.DispatcherOperation.Execute()

at Avalonia.Threading.Dispatcher.ExecuteJob(DispatcherOperation job)

at Avalonia.Threading.Dispatcher.ExecuteJobsCore(Boolean fromExplicitBackgroundProcessingCallback)

at Avalonia.Threading.Dispatcher.Signaled()

at Avalonia.Win32.Win32Platform.WndProc(IntPtr hWnd, UInt32 msg, IntPtr wParam, IntPtr lParam)

at Avalonia.Win32.Interop.UnmanagedMethods.DispatchMessage(MSG& lpmsg)

at Avalonia.Win32.Win32DispatcherImpl.RunLoop(CancellationToken cancellationToken)

at Avalonia.Threading.DispatcherFrame.Run(IControlledDispatcherImpl impl)

at Avalonia.Threading.Dispatcher.PushFrame(DispatcherFrame frame)

at Avalonia.Threading.Dispatcher.MainLoop(CancellationToken cancellationToken)

at Avalonia.Controls.ApplicationLifetimes.ClassicDesktopStyleApplicationLifetime.StartCore(String[] args)

at Avalonia.Controls.ApplicationLifetimes.ClassicDesktopStyleApplicationLifetime.Start(String[] args)

at Duplicati.GUI.TrayIcon.AvaloniaRunner.Run(String[] args)

at Duplicati.GUI.TrayIcon.TrayIconBase.Init(String[] args)

at Duplicati.GUI.TrayIcon.AvaloniaRunner.Init(String[] args)

at Duplicati.GUI.TrayIcon.Program.StartTray(String[] _args, Dictionary`2 options, HostedInstanceKeeper hosted, PasswordSource passwordSource, String password, String acceptedHostCertificate)

An error occurred on server tear down: System.Threading.ThreadInterruptedException: Thread was interrupted from a waiting state.

at System.Threading.Monitor.ObjWait(Int32 millisecondsTimeout, Object obj)

at System.Threading.ManualResetEventSlim.Wait(Int32 millisecondsTimeout, CancellationToken cancellationToken)

at System.Threading.Tasks.Task.SpinThenBlockingWait(Int32 millisecondsTimeout, CancellationToken cancellationToken)

at System.Threading.Tasks.Task.InternalWaitCore(Int32 millisecondsTimeout, CancellationToken cancellationToken)

at Duplicati.Server.Program.ShutdownModernWebserver()

at Duplicati.Server.Program.<>c.<Main>b__60_9()

at Duplicati.Server.Program.Main(String[] _args)

however I’m not sure if that was cause or result. I did probably mouse over the TrayIcon as test.

EDIT 2:

This was an attempt to see if following the directions works. I also prefer not to recreate the DB, however I did also try a test (not written up yet) going down that path which had its own quirks.

Backup says:

Found 5 files that are missing from the remote storage, please run repair

Repair says:

The backup storage destination is missing data files. You can either enable --rebuild-missing-dblock-files or run the purge command to remove these files. The following files are missing: duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes, duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes

Set advanced option rebuild-missing-dblock-files

Repair says:

The backup storage destination is missing data files. You can either enable --rebuild-missing-dblock-files or run the purge command to remove these files. The following files are missing: duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes, duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes

So try the other path, ignoring likely incorrect purge command direction. It almost certainly means purge-broken-files. Purge is very different.

list-broken-files from Commandline says:

Listing remote folder …

0 : 2/16/2025 10:49:34 AM (7 match(es))

and then actually shows 6 lines. Seems wrong.

purge-broken-files from Commandline says:

Listing remote folder …

Uploading file duplicati-20250216T154935Z.dlist.zip.aes (947.93 KiB) …

Deleting file duplicati-20250216T154934Z.dlist.zip.aes …

and the Verbose live log again says it’s:

Feb 21, 2025 8:05 AM: Purging 7 file(s) from fileset 2/16/2025 10:49:34 AM

and it itemizes each, but only 6 of them.

This isn’t a live log omission. Profiling log to file also shows 6 purges.

Verify files says:

(log says success)

Repair says:

(log says success)

BUT rclone --dry-run sync shows two dindex files were uploaded, pointing at missing dblocks.

What’s annoying is the reuploads are not in the RemoteOperation table. Looking at Data, they

first show on a list-broken-files after Repair, and I found the Repair upload in the profiling log.

is where I wrote up primarily weirdness about failed Repair with rebuild-missing-dblock-files.

EDIT 3:

I turned off rebuild-missing-dblock-files because it was harmful (beyond merely ineffective).

Recreate button got a red popup error. The log does exist and it shows what was expected:

- 2025-02-21 19:53:27 -05 - [Error-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-MissingFileDetected]: Remote file referenced as duplicati-b8deaff454e8c46d9b0524a8d3d41573b.dblock.zip.aes by duplicati-i162a52604e33461d8844a395e75c97df.dindex.zip.aes, but not found in list, registering a missing remote file

- 2025-02-21 19:53:36 -05 - [Error-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-MissingFileDetected]: Remote file referenced as duplicati-b29f1b378849c4e55bd43dbe9da8cf5d7.dblock.zip.aes by duplicati-i55861405c35f4c569ad171016b5f66d5.dindex.zip.aes, but not found in list, registering a missing remote file

- 2025-02-21 19:56:18 -05 - [Error-Duplicati.Library.Main.Controller-FailedOperation]: The operation Repair has failed with error: Recreated database has missing blocks and 1 broken filelists. Consider using “list-broken-files” and “purge-broken-files” to purge broken data from the remote store and the database. UserInformationException: Recreated database has missing blocks and 1 broken filelists. Consider using “list-broken-files” and “purge-broken-files” to purge broken data from the remote store and the database.

The bad references are in replacement dindex files that Repair somehow decided to reupload, not noticing that associated dblock wasn’t there. The missing files are from the original issue where files thought to be uploaded somehow never showed up.

EDIT 4:

I was trying to track down a problem where purge-broken-files says:

Uploading file duplicati-20250216T122101Z.dlist.zip.aes (948.40 KiB) ...

Deleting file duplicati-20250216T122100Z.dlist.zip.aes ...

The operation PurgeBrokenFiles has failed with error: Found 1 file(s) with missing blocklist hashes => Found 1 file(s) with missing blocklist hashes

System.IO.InvalidDataException: Found 1 file(s) with missing blocklist hashes

at Duplicati.Library.Main.Database.LocalDatabase.VerifyConsistency(Int64 blocksize, Int64 hashsize, Boolean verifyfilelists, IDbTransaction transaction)

at Duplicati.Library.Main.Operation.PurgeBrokenFilesHandler.Run(IFilter filter)

at Duplicati.Library.Main.Controller.<>c__DisplayClass43_0.<PurgeBrokenFiles>b__0(PurgeBrokenFilesResults result)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Action`1 method)

at Duplicati.Library.Main.Controller.RunAction[T](T result, Action`1 method)

at Duplicati.Library.Main.Controller.PurgeBrokenFiles(IFilter filter)

at Duplicati.CommandLine.Commands.PurgeBrokenFiles(TextWriter outwriter, Action`1 setup, List`1 args, Dictionary`2 options, IFilter filter)

at Duplicati.CommandLine.Program.ParseCommandLine(TextWriter outwriter, Action`1 setup, Boolean& verboseErrors, String[] args)

at Duplicati.CommandLine.Program.RunCommandLine(TextWriter outwriter, TextWriter errwriter, Action`1 setup, String[] args)

Return code: 100

and was trying to see if it would say it again. I’m pretty sure I can get it if I Recreate again, but it now appears to be stuck in an otherwise irrecoverable situation because purge-broken-files says

The operation PurgeBrokenFiles has failed with error: Cannot continue because the database is marked as being under repair, but does not have broken files. => Cannot continue because the database is marked as being under repair, but does not have broken files.

ErrorID: CannotListOnDatabaseInRepair

Cannot continue because the database is marked as being under repair, but does not have broken files.

Return code: 100

list-broken-files says:

The operation ListBrokenFiles has failed with error: Cannot continue because the database is marked as being under repair, but does not have broken files. => Cannot continue because the database is marked as being under repair, but does not have broken files.

ErrorID: CannotListOnDatabaseInRepair

Cannot continue because the database is marked as being under repair, but does not have broken files.

Return code: 100

Repair button says:

The database was attempted repaired, but the repair did not complete. This database may be incomplete and the repair process is not allowed to alter remote files as that could result in data loss.

Looking in source code at RepairInProgress variable, it looks like setting it false can be done in:

if it had gotten that far, however I think it failed earlier below, so how is variable ever clearable?

EDIT 5:

Since Repair stated its reasons for apprently refusing to run after an initial failure, does this make purge-broken-files the place to clear RepairInProgress? If so, maybe after finding no issues now, should it perhaps just declare an all-looks-good? If not, how to get that, short of a new Recreate?

EDIT 6:

Test reproducibility of that after rclone sync of destination to the local test copy.

Recreate says (as expected from damage):

Recreated database has missing blocks and 1 broken filelists. Consider using “list-broken-files” and “purge-broken-files” to purge broken data from the remote store and the database.

purge-broken-files says (as it’s done before):

Uploading file duplicati-20250216T122101Z.dlist.zip.aes (948.40 KiB) ...

Deleting file duplicati-20250216T122100Z.dlist.zip.aes ...

The operation PurgeBrokenFiles has failed with error: Found 1 file(s) with missing blocklist hashes => Found 1 file(s) with missing blocklist hashes

System.IO.InvalidDataException: Found 1 file(s) with missing blocklist hashes

at Duplicati.Library.Main.Database.LocalDatabase.VerifyConsistency(Int64 blocksize, Int64 hashsize, Boolean verifyfilelists, IDbTransaction transaction)

at Duplicati.Library.Main.Operation.PurgeBrokenFilesHandler.Run(IFilter filter)

at Duplicati.Library.Main.Controller.<>c__DisplayClass43_0.<PurgeBrokenFiles>b__0(PurgeBrokenFilesResults result)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Action`1 method)

at Duplicati.Library.Main.Controller.RunAction[T](T result, Action`1 method)

at Duplicati.Library.Main.Controller.PurgeBrokenFiles(IFilter filter)

at Duplicati.CommandLine.Commands.PurgeBrokenFiles(TextWriter outwriter, Action`1 setup, List`1 args, Dictionary`2 options, IFilter filter)

at Duplicati.CommandLine.Program.ParseCommandLine(TextWriter outwriter, Action`1 setup, Boolean& verboseErrors, String[] args)

at Duplicati.CommandLine.Program.RunCommandLine(TextWriter outwriter, TextWriter errwriter, Action`1 setup, String[] args)

Return code: 100

purge-broken-files says (as it’s done before):

The operation PurgeBrokenFiles has failed with error: Cannot continue because the database is marked as being under repair, but does not have broken files. => Cannot continue because the database is marked as being under repair, but does not have broken files.

ErrorID: CannotListOnDatabaseInRepair

Cannot continue because the database is marked as being under repair, but does not have broken files.

Return code: 100

Now look for some result that can be improved.

From a guess about why purge-broken-files was complaining, I changed blocksize to 100 KB.

This is what the destination actually uses, and some parts of Duplicati notice go by blocksize.

Another Recreate, and purge-broken-files says:

Uploading file duplicati-20250216T122101Z.dlist.zip.aes (948.40 KiB) ...

Deleting file duplicati-20250216T122100Z.dlist.zip.aes ...

Return code: 0

If I look in the job DB Configuration table, it looks like it cleared entry behind RepairInProgress.

Repair now also is willing to run (and makes a successful job log). So some progress on those.

Suspect code is below. Is it thinking the option value is the real blocksize? Is assumption safe?

I already knew that I had only one file big enough to have two blocklists, and had laboriously attempted to hand-build the SQL behind the error. But initially I used 3200 blockhash / block, suceeding every time. I changed it to 32000 and got a failure from the one big blockset I had.

Is that line set up to specifically not log SQL to profiling log? It would’ve been easier if it had.

My manul attempt with original value, prettified:

SELECT COUNT(*)

FROM (

SELECT *

FROM (

SELECT "N"."BlocksetID"

,(("N"."BlockCount" + 3200 - 1) / 3200) AS "BlocklistHashCountExpected"

,CASE

WHEN "G"."BlocklistHashCount" IS NULL

THEN 0

ELSE "G"."BlocklistHashCount"

END AS "BlocklistHashCountActual"

FROM (

SELECT "BlocksetID"

,COUNT(*) AS "BlockCount"

FROM "BlocksetEntry"

GROUP BY "BlocksetID"

) "N"

LEFT OUTER JOIN (

SELECT "BlocksetID"

,COUNT(*) AS "BlocklistHashCount"

FROM "BlocklistHash"

GROUP BY "BlocksetID"

) "G" ON "N"."BlocksetID" = "G"."BlocksetID"

WHERE "N"."BlockCount" > 1

)

WHERE "BlocklistHashCountExpected" != "BlocklistHashCountActual"

)

EDIT 7:

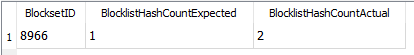

If I change the 3200 to 32000, I get one row back. If I change COUNT(*) to * I get my big file:

I have to say that the error message Duplicati put out was no help at all to fast understanding. Possibly it’s impossible to explain something like this though, so big chase like this is needed?