I’ve read up about this error and attempted several things.

Here’s my “version” of this issue:

One or more errors occurred. (Could not find file "/home/albus/hdd1/tmp/dup-f5d31838-1031-4d68-86b7-b0054102d2a1" (Could not find file "/home/albus/hdd1/tmp/dup-f5d31838-1031-4d68-86b7-b0054102d2a1") (One or more errors occurred. (Cannot access a disposed object. Object name: 'MobileAuthenticatedStream'.)))

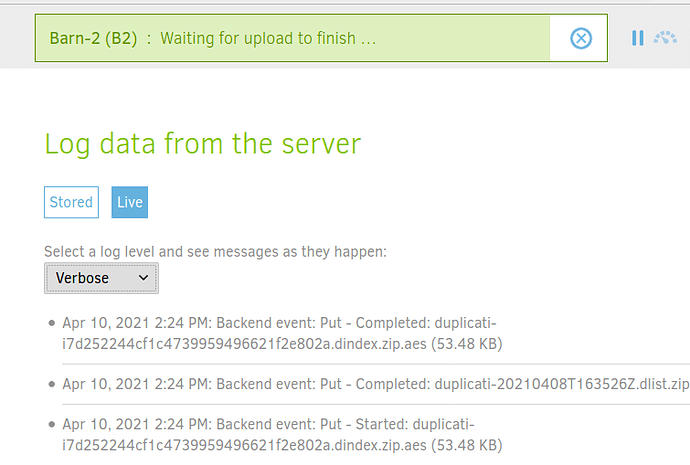

I back up regularly to B-2. The backup has been working OK several times. I’ve been uploading large files (some videos are 10GB or so), and I didn’t have an issue. I’m using a raspberry pi 4 which has a 500GB external mounted to it. I usually restart it once a week, apply patches, etc. Issues started to happen after the last restart.

Here’s what I tried, from what I found in this forum and in github so far:

- moved the /tmp/ folder from its default location (on the root of the RP, too small…) to the external hard drive, where it has room. Still ran into the same issue.

- checked the health of the external drive ( Ext4, encrypted) didn’t find any bad sectors.

- I tested the connection to B2. It is successful.

- I attempted to create a new backup as well. About 20GB out of about 130GB of the backup is uploaded when this issue takes place (the name of the file missing is different each time).

I don’t see any issues with the drive, including permissions (Duplicati is able to write many “chunks” into the drive’s /tmp folder - the chunks on the backup are 350mb).

I suspect maybe I have a file that is just too big to handle somehow? But how can that be, I have about 300GB of empty space on the external.

Clueless at this point… help?

Thanks!