I think the issue is that an early deletion adds actual new cost, while keeping data may be no-extra-cost.

I will detail that more below, and maybe I’ll hear if I understood that correctly after seeing the references.

Backblaze B2 might also be lower cost because there’s no minimum. Are you well below the 1 TB point which was used in that example which confirms my theory about small backups being hit worse on this because of the minimum 1 TB active storage charge that makes some active usage “no extra charge”?

TL;DR Setting backup retention to match Wasabi 90-day retention can help keep storage charge “active”.

If for some reason you want to stay on Wasabi but seek to pay $5.99 per month for a below-1-TB backup, you can encourage backup data to not be declared wasted space by not deleting versions before 90 days.

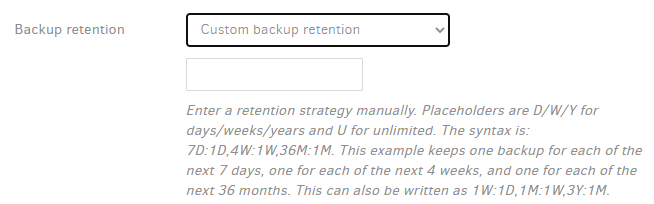

1W:1D,4W:1W,12M:1M is the “Smart backup retention” setting. What do you now use on Options screen?

You could adapt the above to 90D:U,12M:1M to try to hang onto more backups. This will increase Wasabi size (hopefully not past 1 TB, or the costs go up that way instead) and may make database bigger/slower.

By volume, most of the files are probably dblock files which contain the changes found in the source files. Backup will typically upload some number of full dblock files, then a partially filled dblock, then a dlist file.

Deleting a version deletes its dlist and makes some of its dblock file contents wasted space. Compact looks at that but it will trigger on either too much wasted space by percentage or too many small dblocks which will take more work to subdue, but they’re small, so any new ones that get deleted won’t hurt much.

If you really want to study hard on this, look at The COMPACT command, no-auto-compact to do manuals, and auto-compact-interval but setting it to 90 days won’t be sufficient because it may compact newer data whenever 90 day interval lets compact run. Adding 90 day retention will try to minimize data deletions done.