People with Wasabi can correct me, but it sounds like it’s not exactly a fee, but 90 day minimum charge whether or not the file is deleted sooner. It does sound like early deletion might bill days remainder when delete happens because it’s known then, instead of spreading it out over months, but total amount is the same, so any deletion in 90 days is same total. The question is whether there’s a waste in early deletes.

I suppose one might say one wastes potential restores. If this were a simplistic backup system where a backup version was a full set of files, no point in deleting a set earlier, because amount due is the same.

Duplicati doesn’t work like that. It uploads changes to previous version, but over time, any of it obsoletes.

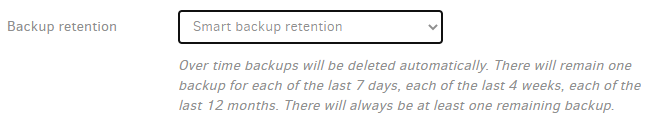

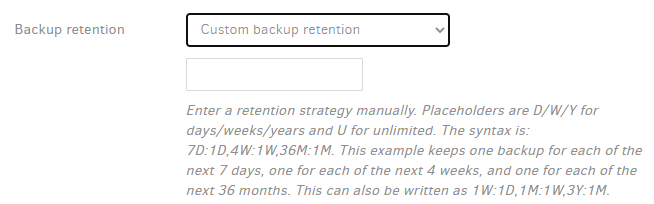

Compacting files at the backend runs when obsolete data (space waste) accumulates, and it has some controls. This is not time-based, but Backup retention on Options screen 5 can be, if you choose that.

Those (plus backup frequency which you’ve already decided on) are the controls you have to tune things.

Probably your goal should be to minimize total spend, but it’s complicated, and might be counter-intuitive, however possibly the no-reason-to-delete-versions-early holds true for Duplicati, just as it would normally.

You also don’t want to get hyper-active compacting, because that will cause a lot of download and upload that you aren’t charged for, but as files get compacted, the old ones get deleted, maybe invoking charges.

If you never compact, then the total space use will grow forever, which will also wind up getting expensive.

Possibly setting no-auto-compact and manually using Compact now button may cost less than automatic.

Because you are likely below the minimum of 1 TB Timed Active Storage, that variety is basically free, so you might seek benefit by using more of it, unlike someone who is past 1 TB so billed for additional use…

I suppose in the below-1-TB case it does look like more of an extra cost beyond the $5.99 active 1 TB fee.