Hello! I’m running Duplicati 2.0.6.3 on Ubuntu Server 22.04.1 (64-bit - Intel).

I tried to create a backup job to upload data to Cloudflare R2 Storage (R2 is a relatively new Cloudflare product - not to be confused with Backblaze B2).

Cloudflare R2 Storage is compatible with the S3 API.

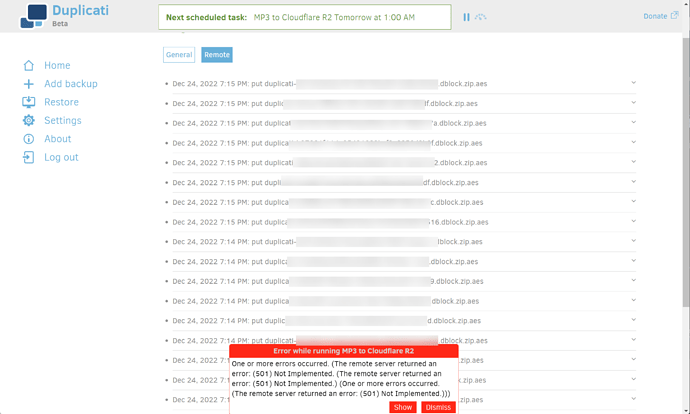

Everything seemed to work nicely until Duplicati attempted to process the backup job. I received the following error:

One or more errors occurred. (The remote server returned an error: (501) Not Implemented. (The remote server returned an error: (501) Not Implemented.) (One or more errors occurred. (The remote server returned an error: (501) Not Implemented.)))

I configured a new backup and set the destination settings as follows:

Storage Type: S3 Compatible

Use SSL: True

Server: Custom Server URL - <REDACTED>.r2.cloudflarestorage.com (I also tried adding https:// before the FQDN)

Bucket Name: <blank>

Bucket Create Region: default

Storage Class: default

Folder Path: default

AWS Access ID: Access ID from R2

AWS Access Key: Access Key from R2

Client Library to Use: Amazon AWS SDK

I selected ‘Test connection’ and received the message “Connection worked!”

I finished the backup wizard by selecting the files I wanted to back up, then set the schedule accordingly.

I chose ‘Run Now’ to kick off the backup job immediately. Duplicati chugged along for a bit, and I received the error message I mentioned earlier.

I can collect some debug logs if that would be helpful. I looked in the usual places on the server’s filesystem to see if I could locate the logs, but I couldn’t find them.