Here is the bugreport.zip

Thanks. That might be relevant. Maybe this issue only happens on messier situations than a straight run?

We’ll probably have some evidence for or against that on future runs, which likely will have fewer hiccups.

Also thanks for that bug report. I’ll have a look (which may or may not solve, but there’s actually a theory).

I’ll post some technical notes as I explore. I’m kind of wondering if the Temporary file from second backup caused your 1 value at the end of the third then hid its Deleting State evidence due to transaction rollback.

When a database-using program ends cleanly, it does a commit to write a consistent transaction into DB.

When it doesn’t (perhaps here), it doesn’t want to write half-done changes, so next open rolls them back.

2021-10-02 08:10:09 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteNonQuery]: ExecuteNonQuery: UPDATE “RemoteVolume” SET “State” = “Deleting” WHERE “Type” = “Files” AND “State” IN (“Uploaded”, “Verified”, “Temporary”) AND “ID” NOT IN (SELECT “VolumeID” FROM “Fileset”) took 0:00:00:06.171

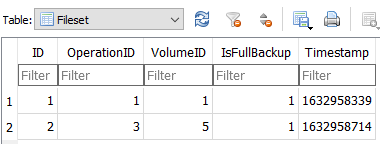

Being Temporary would pass the first part of above, and VolumeID is not in Fileset which has only the first and third backups, so that passes the second, so the number of updated rows winds up as 1 as you saw.

The Temporary state did not always allow a row UPDATE, but this was added in the “Stop now” fix here.

The general issue goes way back. I’m not saying this is the only case, but we might have a lead on one.

Possibly your log will come in handy again, if we need to figure out how things failed when /tmp filled up.

Thanks for the info!

I guess my main question right now is: should I start a raw create-from-backup? Or wait?

Tried it, and:

Start 2021-10-04 05:38:25

End 2021-10-04 06:10:12

Duration 00:31:47

That’s definitely fast enough. This is at 50M blocksize.

Before I move on to 10M I need to wipe the backup to start over. I have a lot of disk but not infinite  I’ll make a bug report of the restore-only task just to preserve the database.

I’ll make a bug report of the restore-only task just to preserve the database.

Anything else?

What’s a raw create-from-backup, and what’s a restore-only task? Does the latter mean post-recreate?

It’s a little unpredictable what will be handy later. Save performance metrics that were mentioned earlier.

I’m not sure how useful databases will be for performance exam. SQL timings are in the profiling logs…

Those get large but probably compress very well. Possibly the slowest SQL will vary with the blocksize.

If nothing further, info even at this level is useful. More info may lead to more understanding, but is more work to develop. I’m not likely to have time for a deep dive until some new volunteers pitch in to help out, even if that just means people with more experience could answer forum questions for people with less.

There’s also the SQL expert that we’re missing, and I’m not likely to become one in any reasonable time.

Still, maybe if slow queries can be found and posted, someone will post ideas on how to improve them…

The raw create from backup is a recreate of the DB with no DB to start. The restore-only task is the config I create with no intention ever to run a backup operation on it.

I have the log from the recreate saved off.

BTW in my recreate operation above, only 1 SQL operation took longer than a second. 1.007 seconds, to be exact.

Keeping the log and BRs, starting over with a backup at blocksize 10M.

Judging by progress since it started, look for a report around Friday.

10M DB Rebuild

Start 2021-10-08 08:06:26

End 2021-10-08 08:44:24

Duration 00:37:58

Longest SQL took 3.8 seconds. One of only two that exceeded 1 second.

The database was considerably bigger - about 227MB compared to 76MB for the 50M

Next try, 5M blocksize

BTW my process has settled down to the following:

- Create a backup config against my 7T data dir with

a. the blocksize being tested

b. an empty 9T drive for a target

c. profiling log to a file

d. 512M volume size - Run the backup (so far this is taking 2-3 days per run)

- Collect the backup log and a bug-report zip

- Create a new config with the 9T drive as destination, with the same volume size, blocksize, and profile logging to an empty file

- Repair the database of the new config

- Capture the time elapsed, the log file and a bug-report zip

Then I wipe the 9T drive, and start over with the next blocksize. So far I have done 50M and 10M. Remaining ones to check are 5M, 1M, 500K and 100K. I suspect my DB repair times will start to get quite a bit longer after 5M.

5M rebuild done

Start 2021-10-12 00:29:38

End 2021-10-12 01:04:18

Duration 00:34:40

Longest SQL 7.9 seconds. One of three that exceeded 1 second.

DB size bloomed to 407MB.

Next: 1M blocks

1M rebuild done. The 1M backup took a chunk of time longer - about 3d9h compared to like 2d12h for earlier. Might be because the server hosting this VM has other work now, so I am not putting a lot of stock in that.

01M DB rebuild time

Start 2021-10-15 12:49:47

End 2021-10-15 13:31:42

Duration 00:41:55

Longest SQLs – two of them – in the 35-45 second range. About 50 over 1 second.

DB size has really swelled now to 1.9GB

Logs are getting much bigger also. The rebuild log is poking at 4G.

Going to prepare a spreadsheet of all results until this point, and then extend it.

Next: 500K blocks.

Stats spreadsheet started here:

I have a feeling sh*'s about to get real in the last two columns.

Well. THIS sucks.

Got to the end of the 500K backup and duplicati just… hung.

Here’s the tail of the log:

2021-10-20 19:11:57 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b946d5c292d2242789f05ddf56074d6fd.dblock.zip.aes (25.96 MB)

2021-10-20 19:11:57 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteNonQuery]: Starting - ExecuteNonQuery: UPDATE "Fileset" SET "IsFullBackup" = True WHERE "ID" = 2;

2021-10-20 19:11:57 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteNonQuery]: ExecuteNonQuery: UPDATE "Fileset" SET "IsFullBackup" = True WHERE "ID" = 2; took 0:00:00:00.000

2021-10-20 19:11:57 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.BackupHandler-BackupMainOperation]: BackupMainOperation took 4:17:20:56.015

2021-10-20 19:11:57 -04 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 25.96 MB in 00:00:00.0355480, 730.19 MB/s

2021-10-20 19:11:57 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b946d5c292d2242789f05ddf56074d6fd.dblock.zip.aes (25.96 MB)

2021-10-20 19:11:58 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitAfterUpload

2021-10-20 19:11:58 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.BackupHandler-VerifyConsistency]: Starting - VerifyConsistency

2021-10-20 19:11:58 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitAfterUpload took 0:00:00:00.174

2021-10-20 19:11:58 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: Starting - ExecuteReader: SELECT "CalcLen", "Length", "A"."BlocksetID", "File"."Path" FROM (

SELECT

"A"."ID" AS "BlocksetID",

IFNULL("B"."CalcLen", 0) AS "CalcLen",

"A"."Length"

FROM

"Blockset" A

LEFT OUTER JOIN

(

SELECT

"BlocksetEntry"."BlocksetID",

SUM("Block"."Size") AS "CalcLen"

FROM

"BlocksetEntry"

LEFT OUTER JOIN

"Block"

ON

"Block"."ID" = "BlocksetEntry"."BlockID"

GROUP BY "BlocksetEntry"."BlocksetID"

) B

ON

"A"."ID" = "B"."BlocksetID"

) A, "File" WHERE "A"."BlocksetID" = "File"."BlocksetID" AND "A"."CalcLen" != "A"."Length"

Been like that for 8 hours.

Suggestions?

Because its last thing logged was the start of an SQL evaluation, break out the performance tools to see whether it’s using resources. If it’s in SQLite, it won’t likely use multiple cores, but might peg a single one. Some SQLite overloads can also do a lot of disk I/O to temporary files with names starting with etilqs.

If this was Windows, I’d say use Sysinternals Process Monitor to look for file activity filtering for the name.

Linux has strace which can attach to a process to record various things, including file activity, but I’m not sure it can filter on path. You can make a big file and filter later. If you try this, use --follow-forks and I think --decode-fds. You could initially start with a broad capture. If too noisy, maybe apply more syscall filtering.

There are Linux tools to get strack traces, which possibly will show whether it’s in SQLite. A look without tools is possible at /proc/PID/task/TID/stack. The stacks inside mono won’t say much meaningful about Duplicati as seen by mono itself. For that, see man mono and DEBUGGING AIDS on SIGQUIT and caveats.

EDIT:

ps -p PID -L can give a thread view. Be sure to look at the child Duplicati, not the parent that started it.

Here is the output from strace -p 610 (said child process)

futex(0x5649d0402a58, FUTEX_WAIT_PRIVATE, 0, NULL) = ? ERESTARTSYS (To be restarted if SA_RESTART is set)

--- SIGRT_3 {si_signo=SIGRT_3, si_code=SI_TKILL, si_pid=610, si_uid=0} ---

rt_sigprocmask(SIG_BLOCK, [RT_4], NULL, 8) = 0

futex(0x5649ce434f00, FUTEX_WAKE_PRIVATE, 1) = 1

rt_sigsuspend(~[RTMIN RT_1 RT_4], 8) = ? ERESTARTNOHAND (To be restarted if no handler)

--- SIGRT_4 {si_signo=SIGRT_4, si_code=SI_TKILL, si_pid=610, si_uid=0} ---

rt_sigreturn({mask=~[KILL STOP RTMIN RT_1]}) = -1 EINTR (Interrupted system call)

rt_sigprocmask(SIG_UNBLOCK, [RT_4], NULL, 8) = 0

futex(0x5649ce434f00, FUTEX_WAKE_PRIVATE, 1) = 0

rt_sigreturn({mask=[]}) = 202

futex(0x5649d0402a58, FUTEX_WAIT_PRIVATE, 0, NULL) = ? ERESTARTSYS (To be restarted if SA_RESTART is set)

--- SIGRT_3 {si_signo=SIGRT_3, si_code=SI_TKILL, si_pid=610, si_uid=0} ---

rt_sigprocmask(SIG_BLOCK, [RT_4], NULL, 8) = 0

futex(0x5649ce434f00, FUTEX_WAKE_PRIVATE, 1) = 1

rt_sigsuspend(~[RTMIN RT_1 RT_4], 8) = ? ERESTARTNOHAND (To be restarted if no handler)

--- SIGRT_4 {si_signo=SIGRT_4, si_code=SI_TKILL, si_pid=610, si_uid=0} ---

rt_sigreturn({mask=~[KILL STOP RTMIN RT_1]}) = -1 EINTR (Interrupted system call)

rt_sigprocmask(SIG_UNBLOCK, [RT_4], NULL, 8) = 0

futex(0x5649ce434f00, FUTEX_WAKE_PRIVATE, 1) = 1

rt_sigreturn({mask=[]}) = 202

futex(0x5649d0402a58, FUTEX_WAIT_PRIVATE, 0, NULL

Each cycle that begins with futex(0x5649d0402a58, FUTEX_WAIT_PRIVATE, 0, NULL takes about 2 minutes. And before someone asks, no disks are full or even close. The worst one is at 87%.

To be frank, interpreting this exceeds my Linux skills.

At this point I just want to kill it. What’s the safest way?

Is the backup usable for performing the rebuild DB test?

oh and where does it stash the bug-report when it creates one? My 500K backup bug-report says it’s created but it won’t download. I need to go get it

I’m not sure if SQLite activity is visible without follow-forks.

Including (if you want to go no further) per-thread CPU use.

There’s not a safe-kill path. Kill and take your chances. Time of kill matters, and current might be good, because this might be near the end of the backup where it’s completed enough to do the following step:

2021-10-20 19:11:58 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.BackupHandler-VerifyConsistency]: Starting - VerifyConsistency

is the kind of thing it would do after the backup. It will also verify consistency at start of the next backup.

Main question I have is – did it get a dlist file on the destination? If so, it might work. If not, next backup might be able to finish things off (possibly only uploading the dlist and no further dblock and dindex files).

I’ve never gone looking for one, but some source code suggests it’s a temporary file (wherever yours are). Filename probably starts with dup- like all the other temporary files, so you might need to inspect it some.

Thanks. Does not look like I got a usable dlist so I am going to try a re-run. Ugh,

Got the bugreport zip, I think.

In many cases (this one is a bit unusual because it’s the initial run, and maybe for other reasons) uploads a dlist for the interrupted backup before it gets going. This is called a synthetic file list, and reflects work so far. The work so far is an amendment to backup files before the interrupted one – but you don’t have one…

State of re-run as of now

2021-10-22 14:24:12 -04 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started

2021-10-22 14:24:12 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Controller-RunBackup]: Starting - Running Backup

2021-10-22 14:24:12 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteScalarInt64]: Starting - ExecuteScalarInt64: INSERT INTO "Operation" ("Description", "Timestamp") VALUES ("Backup", 1634927052); SELECT last_insert_rowid();

2021-10-22 14:24:13 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteScalarInt64]: ExecuteScalarInt64: INSERT INTO "Operation" ("Description", "Timestamp") VALUES ("Backup", 1634927052); SELECT last_insert_rowid(); took 0:00:00:00.108

2021-10-22 14:24:13 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: Starting - ExecuteReader: SELECT "Key", "Value" FROM "Configuration"

2021-10-22 14:24:13 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: ExecuteReader: SELECT "Key", "Value" FROM "Configuration" took 0:00:00:00.017

2021-10-22 14:24:13 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: Starting - ExecuteReader: SELECT "Key", "Value" FROM "Configuration"

2021-10-22 14:24:13 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: ExecuteReader: SELECT "Key", "Value" FROM "Configuration" took 0:00:00:00.000

2021-10-22 14:24:13 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteScalarInt64]: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM "Block" WHERE "Size" > 512000

2021-10-22 14:24:13 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteScalarInt64]: ExecuteScalarInt64: SELECT COUNT(*) FROM "Block" WHERE "Size" > 512000 took 0:00:00:00.069

2021-10-22 14:24:22 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: Starting - ExecuteReader: SELECT "Key", "Value" FROM "Configuration"

2021-10-22 14:24:22 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: ExecuteReader: SELECT "Key", "Value" FROM "Configuration" took 0:00:00:00.000

2021-10-22 14:24:22 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: Starting - ExecuteReader: SELECT "Key", "Value" FROM "Configuration"

2021-10-22 14:24:22 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: ExecuteReader: SELECT "Key", "Value" FROM "Configuration" took 0:00:00:00.000

2021-10-22 14:24:23 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: Starting - ExecuteReader: SELECT "CalcLen", "Length", "A"."BlocksetID", "File"."Path" FROM (

SELECT

"A"."ID" AS "BlocksetID",

IFNULL("B"."CalcLen", 0) AS "CalcLen",

"A"."Length"

FROM

"Blockset" A

LEFT OUTER JOIN

(

SELECT

"BlocksetEntry"."BlocksetID",

SUM("Block"."Size") AS "CalcLen"

FROM

"BlocksetEntry"

LEFT OUTER JOIN

"Block"

ON

"Block"."ID" = "BlocksetEntry"."BlockID"

GROUP BY "BlocksetEntry"."BlocksetID"

) B

ON

"A"."ID" = "B"."BlocksetID"

) A, "File" WHERE "A"."BlocksetID" = "File"."BlocksetID" AND "A"."CalcLen" != "A"."Length"