Amazing Community, i newer exspected so fast and so much responses. Thanks.

My backup result are 122206 files by 50mb size. Yes i startet a a restore where i only select one file.

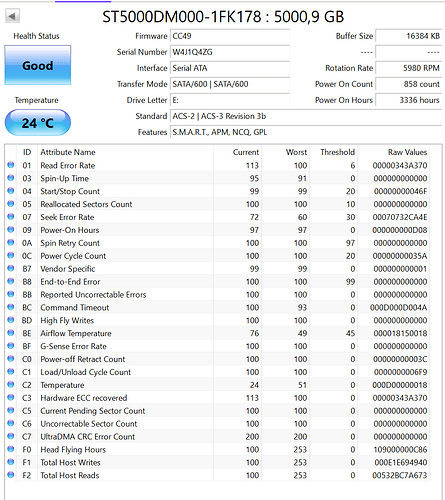

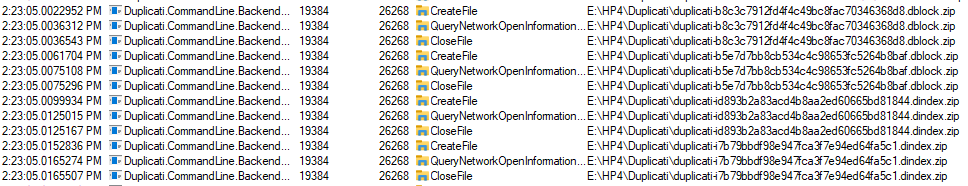

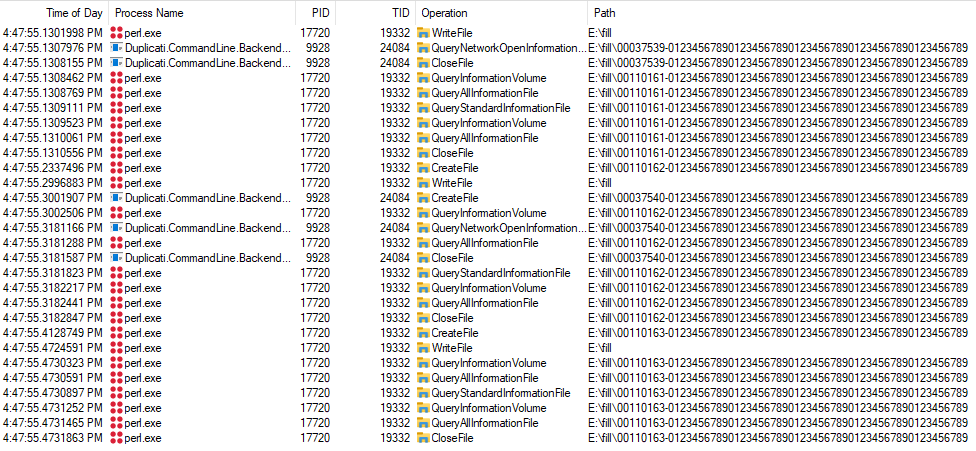

I run Duplicati - 2.0.7.1_beta_2023-05-25 under windows 10. My backup destination is a local disk drive (hdd sata)

It now finished the restore.

between 8:29 until 10:28 the pc was shut down.

the warning The option compression-level is deprecated: Please use the zip-compression-level option instead comes because i added boath params (deprecated and new, to be shure ;-).

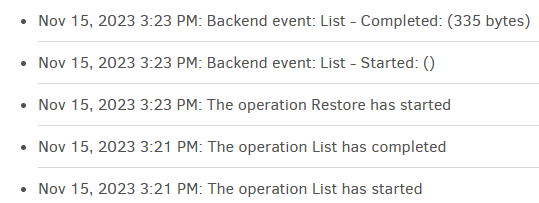

here is my verbose log:

Nov 15, 2023 10:44 PM: The operation Restore has completed

Nov 15, 2023 10:43 PM: Testing restored file integrity: D:\tmp\xxxx.mkv

Nov 15, 2023 10:43 PM: Patching metadata with remote data: D:\tmp\xxxx.mkv

Nov 15, 2023 10:43 PM: Recording metadata from remote data: D:\tmp\xxxx.mkv

Nov 15, 2023 10:43 PM: Backend event: Get - Completed: duplicati-b2d4886f2525344e2ac86bd9da8b67278.dblock.zip (49,99 MB)

Nov 15, 2023 10:43 PM: Backend event: Get - Started: duplicati-b2d4886f2525344e2ac86bd9da8b67278.dblock.zip (49,99 MB)

Nov 15, 2023 10:43 PM: 1 remote files are required to restore

Nov 15, 2023 10:43 PM: Target file is patched with some local data: xxxx.mkv

Nov 15, 2023 10:41 PM: Target file does not exist: xxxx.mkv

Nov 15, 2023 10:39 PM: Restore list contains 68782 blocks with a total size of 6,56 GB

Nov 15, 2023 10:39 PM: Mapping restore path prefix to "Y:\xxxx\" to "D:\tmp\"

Nov 15, 2023 10:39 PM: Needs to restore 1 files (6,56 GB)

Nov 15, 2023 10:39 PM: Searching backup 0 (15.11.2023 07:21:22) ...

Nov 15, 2023 10:39 PM: Backend event: List - Completed: (119,34 KB)

Nov 15, 2023 7:47 PM: Backend event: List - Started: ()

Nov 15, 2023 7:47 PM: The operation Restore has started

Nov 15, 2023 7:46 PM: The operation List has completed

Nov 15, 2023 7:46 PM: The operation List has started

Nov 15, 2023 7:46 PM: The operation List has completed

Nov 15, 2023 7:46 PM: The operation List has started

Nov 15, 2023 7:46 PM: The operation List has completed

Nov 15, 2023 7:46 PM: The operation List has started

Nov 15, 2023 7:46 PM: The operation List has completed

Nov 15, 2023 7:46 PM: The operation List has started

Nov 15, 2023 7:46 PM: The operation List has completed

Nov 15, 2023 7:46 PM: The operation List has started

Nov 15, 2023 7:45 PM: Cannot find any MS SQL Server instance. MS SQL Server is probably not installed.

Nov 15, 2023 7:45 PM: Cannot open WMI provider \\localhost\root\virtualization\v2. Hyper-V is probably not installed.

Nov 15, 2023 7:45 PM: The operation List has completed

Nov 15, 2023 7:45 PM: The operation List has started

Nov 15, 2023 7:45 PM: The option compression-level is deprecated: Please use the zip-compression-level option instead

Nov 15, 2023 7:44 PM: The operation List has completed

Nov 15, 2023 7:44 PM: The operation List has started

Nov 15, 2023 7:44 PM: The option compression-level is deprecated: Please use the zip-compression-level option instead

Nov 15, 2023 7:44 PM: The operation List has completed

Nov 15, 2023 7:44 PM: The operation List has started

Nov 15, 2023 7:44 PM: The option compression-level is deprecated: Please use the zip-compression-level option instead

Nov 15, 2023 7:44 PM: The operation List has completed

Nov 15, 2023 7:44 PM: The operation List has started

Nov 15, 2023 7:44 PM: The option compression-level is deprecated: Please use the zip-compression-level option instead

Nov 15, 2023 7:44 PM: The operation Test has completed

Nov 15, 2023 7:44 PM: Backend event: Get - Completed: duplicati-i60d65d7f339e45f7b47aa1a4d2944905.dindex.zip (135,66 KB)

Nov 15, 2023 7:44 PM: Backend event: Get - Started: duplicati-i60d65d7f339e45f7b47aa1a4d2944905.dindex.zip (135,66 KB)

Nov 15, 2023 7:44 PM: Backend event: Get - Completed: duplicati-20231113T181243Z.dlist.zip (3,60 MB)

Nov 15, 2023 7:44 PM: Backend event: Get - Started: duplicati-20231113T181243Z.dlist.zip (3,60 MB)

Nov 15, 2023 7:44 PM: Backend event: List - Completed: (119,34 KB)

Nov 15, 2023 7:42 PM: Cannot find any MS SQL Server instance. MS SQL Server is probably not installed.

Nov 15, 2023 7:42 PM: Cannot open WMI provider \\localhost\root\virtualization\v2. Hyper-V is probably not installed.

```