That’s one version. Another is just block insert volume. The problem is telling them apart.

The progress bar is a good indicator, and the Processing messages in the log are better.

This morning I was doing a test Recreate but Google Drive gave lots of dindex get errors.

I had 0 retries allowed (also a test), so effect was to force the dblock downloads, like this:

2020-10-14 08:02:51 -04 - [Verbose-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-ProcessingIndexlistVolumes]: Processing indexlist volume 62 of 82

2020-10-14 08:02:51 -04 - [Error-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-IndexFileProcessingFailed]: Failed to process index file: duplicati-i007318f96b63413eb78712cd4d8a3c41.dindex.zip.aes

System.Net.WebException: The remote server returned an error: (403) Forbidden.

2020-10-14 08:02:51 -04 - [Retry-Duplicati.Library.Main.BackendManager-RetryGet]: Operation Get with file duplicati-ib41f94fc6d524443bc36661736178cd1.dindex.zip.aes attempt 1 of 0 failed with message: The remote server returned an error: (403) Forbidden.

System.Net.WebException: The remote server returned an error: (403) Forbidden.

2020-10-14 08:02:51 -04 - [Verbose-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-ProcessingIndexlistVolumes]: Processing indexlist volume 63 of 82

2020-10-14 08:02:51 -04 - [Error-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-IndexFileProcessingFailed]: Failed to process index file: duplicati-ib41f94fc6d524443bc36661736178cd1.dindex.zip.aes

System.Net.WebException: The remote server returned an error: (403) Forbidden.

...

2020-10-14 08:03:28 -04 - [Verbose-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-ProcessingIndexlistVolumes]: Processing indexlist volume 82 of 82

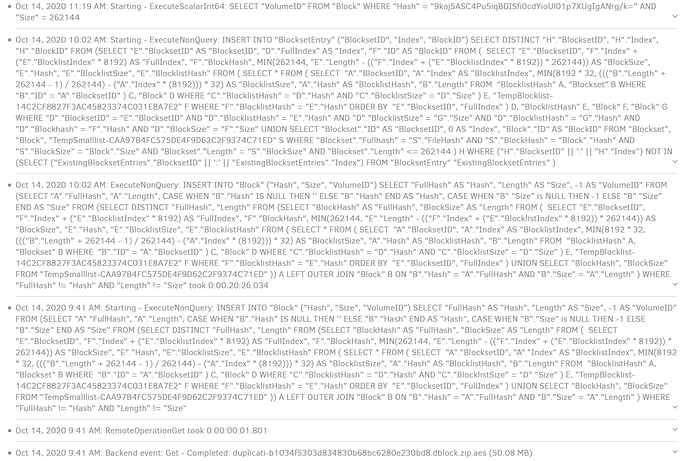

2020-10-14 08:03:40 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteNonQuery]: Starting - ExecuteNonQuery: INSERT INTO "BlocksetEntry" ("BlocksetID", "Index", "BlockID") SELECT DISTINCT "H"."BlocksetID", "H"."Index", "H"."BlockID" FROM (SELECT "E"."BlocksetID" AS "BlocksetID", "D"."FullIndex" AS "Index", "F"."ID" AS "BlockID" FROM (

2020-10-14 08:03:41 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteNonQuery]: ExecuteNonQuery: INSERT INTO "BlocksetEntry" ("BlocksetID", "Index", "BlockID") SELECT DISTINCT "H"."BlocksetID", "H"."Index", "H"."BlockID" FROM (SELECT "E"."BlocksetID" AS "BlocksetID", "D"."FullIndex" AS "Index", "F"."ID" AS "BlockID" FROM (

2020-10-14 08:03:43 -04 - [Verbose-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-ProcessingBlocklistVolumes]: Pass 2 of 3, processing blocklist volume 1 of 26

2020-10-14 08:03:43 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteNonQuery]: Starting - ExecuteNonQuery: INSERT INTO "BlocksetEntry" ("BlocksetID", "Index", "BlockID") SELECT DISTINCT "H"."BlocksetID", "H"."Index", "H"."BlockID" FROM (SELECT "E"."BlocksetID" AS "BlocksetID", "D"."FullIndex" AS "Index", "F"."ID" AS "BlockID" FROM (

In its full multi-line glory, an example INSERT line looks like:

2020-10-14 08:03:43 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteNonQuery]: ExecuteNonQuery: INSERT INTO "BlocksetEntry" ("BlocksetID", "Index", "BlockID") SELECT DISTINCT "H"."BlocksetID", "H"."Index", "H"."BlockID" FROM (SELECT "E"."BlocksetID" AS "BlocksetID", "D"."FullIndex" AS "Index", "F"."ID" AS "BlockID" FROM (

SELECT

"E"."BlocksetID",

"F"."Index" + ("E"."BlocklistIndex" * 3200) AS "FullIndex",

"F"."BlockHash",

MIN(102400, "E"."Length" - (("F"."Index" + ("E"."BlocklistIndex" * 3200)) * 102400)) AS "BlockSize",

"E"."Hash",

"E"."BlocklistSize",

"E"."BlocklistHash"

FROM

(

SELECT * FROM

(

SELECT

"A"."BlocksetID",

"A"."Index" AS "BlocklistIndex",

MIN(3200 * 32, ((("B"."Length" + 102400 - 1) / 102400) - ("A"."Index" * (3200))) * 32) AS "BlocklistSize",

"A"."Hash" AS "BlocklistHash",

"B"."Length"

FROM

"BlocklistHash" A,

"Blockset" B

WHERE

"B"."ID" = "A"."BlocksetID"

) C,

"Block" D

WHERE

"C"."BlocklistHash" = "D"."Hash"

AND

"C"."BlocklistSize" = "D"."Size"

) E,

"TempBlocklist-27BF81D3E2CDA84EB525819B020D8BFE" F

WHERE

"F"."BlocklistHash" = "E"."Hash"

ORDER BY

"E"."BlocksetID",

"FullIndex"

) D, "BlocklistHash" E, "Block" F, "Block" G WHERE "D"."BlocksetID" = "E"."BlocksetID" AND "D"."BlocklistHash" = "E"."Hash" AND "D"."BlocklistSize" = "G"."Size" AND "D"."BlocklistHash" = "G"."Hash" AND "D"."Blockhash" = "F"."Hash" AND "D"."BlockSize" = "F"."Size" UNION SELECT "Blockset"."ID" AS "BlocksetID", 0 AS "Index", "Block"."ID" AS "BlockID" FROM "Blockset", "Block", "TempSmalllist-B9514307EF75024FA1FA5DFBED5F4B68" S WHERE "Blockset"."Fullhash" = "S"."FileHash" AND "S"."BlockHash" = "Block"."Hash" AND "S"."BlockSize" = "Block"."Size" AND "Blockset"."Length" = "S"."BlockSize" AND "Blockset"."Length" <= 102400 ) H WHERE ("H"."BlocksetID" || ':' || "H"."Index") NOT IN (SELECT ("ExistingBlocksetEntries"."BlocksetID" || ':' || "ExistingBlocksetEntries"."Index") FROM "BlocksetEntry" "ExistingBlocksetEntries" ) took 0:00:00:00.221

which looks similar to the reported log line, and is possibly from FindMissingBlocklistHashes, called here.

If that’s the case, then this is probably the dblock-download case, but user logs would solve such doubts.

So it looks like latest Canary may go to dblock download if Recreate exhausts retries on dindex download. Interrupting backup can leave hidden damage so Recreate will download all dblocks then fail from missing blocks #4341 is another way, and for Beta it might be new for 2.0.5.1, offsetting general high improvement.

EDIT: Not saying user report was latest canary (though it doesn’t say). Older release had their own issues.