“Uploaded with size xxxxxxx, but should be yyyyyy.”

I’m getting this type of warning every backup from one of my computers using Duplicati.

Does this imply bad data on the originating drive? Does it imply a bad copy on the backup drive? Does it imply a bad connection between the two (the backup drive is a NAS)?

Is there anything I can do to fix this? I’m just not sure where to start.

Thanks.

To make Duplicati more rubust in handling potential issues like you describe it does the following:

- Creates a dblock (archive) file to be uploaded it makes note of the size in the local SQLite database

- Does the upload to the destination

- Fetches the file size from the destination to compare against the stored value

So the issue is likely NOT bad data on the originating drive unless there’s something wrong with the SQLIte database (in which case I think you’d be seeing lots of other errors).

It COULD be due to a bad copy on the backup drive or to a bad connection corrupting the file during transfer.

It’s not likely, but it is POSSIBLE that your destination reports file size differently than expected - for example, a 1k file stored on disk with a 4k block size could be reported as 1k (file size) OR 4k (disk usage).

A few things I’d start with are:

- Can you provide some text of the actual message?

- Can you tell if it’s the same, different, or ALL files every time?

- Have you tried a test restore yet? (If the restore works, then we may be dealing with the differently-reported file size possibility I mentioned).

- Can you set up a test backup to your local drive (thus removing network and NAS as potential failure points)?

Note that we don’t “need” any of the above, but the more info you provide the better we are likely to be able to help you.

1 Like

So I set up a test backup to a USB connected drive and all is well–no warnings.

Normally, this computer backs up via SFTP to an unRAID server, along with three other computers in my house. All three other computers backup with no warnings or errors and all four are set up exactly the same way. It makes me think that it’s not the storage location.

Could this be caused by a bad WiFi connection? (My next test will be to somehow get Ethernet to this computer and try a cabled connection–but I’m not sure how I’m going to swing that!)

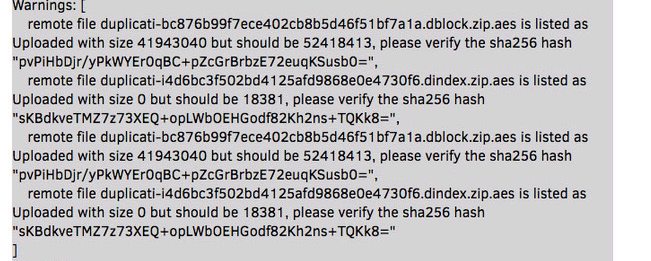

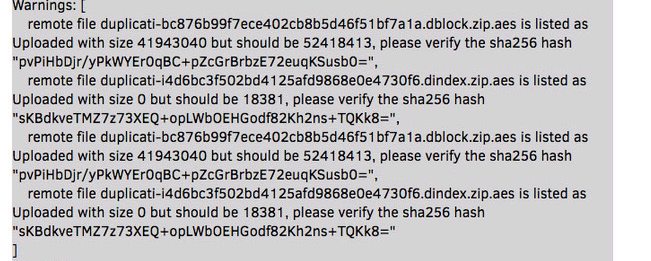

Here is a screenshot of the error text (had to do it as an image–my apologies).

I’ve been using SFTP to unRAID from multiple computers myself and haven’t run into this issue.

The “uploaded with size 0” lines make me wonder if you ran into a disk space issue that. For example:

- the first file uploaded as much as it could before running out of disk space

- the second file couldn’t upload anything (no space)

- some space became available

- the third file uploaded as much as it could before running out of disk space

- the fourth file couldn’t upload anything (no space)

Is this computer connecting to a different share than the others? I’m wondering if in the unRAID share setup you might have specific “Included disk(s)” or “Excluded disk(s)” that are full.

I’ll double-check and report back.

Nope. Lots of room (2.6 TB).

On my unRAID box, I’ve enabled user SSH access and each user has created a separate directory (owned by eachusername:users) inside a master Backups directory (owned by nobody:users). This works great for all the others. I can’t imagine it has anything to do with this individual one, specifically. Also, if I run Docker Safe New Perms, I know that those will go back to nobody:users and still work fine (to put ownership to bed).

Any other thoughts?

What size do the files have when you list them manually?

The size difference for the dblock file is roughly 10MB.

I am thinking that there is some delay from when the files are written to their actual size is returned?

I ssh’d into the box and took a look. All those files are actually 0 length.

Two of my computers are now doing this. The other two haven’t reported any warnings or errors. They’re all setup the same way and backup to the same unRAID box.

Not sure what to do next.

When you ssh’d into the box did you use the same credentials as Duplicati was using?

If so, are you able to create a file of more than 0 size when ssh’d in? If not, are you able to ssh with the Duplicati credentials and try creating a file then (or does that login not have shell access)? If the Duplicati-used credentials don’t have shell access, are you able to test creating a non-0 sized file with an SFTP tool such as FileZilla?

Mostly what I’m trying to check for with all this is potential oddities in per-account disk usage limits and/or permissions (does one account have create but not update for some reason)?

If we can confirm the ability to create non-0 sized files with the same credentials but outside of Duplicati then we’ve removed destination issues as a potential source of the problem (in other words, we’ve likely isolated it to a Duplicati issue).

Another test method could be to create a new small test backup job using the same credentials (but a different destination folder) as the failing one and see if it works correctly. This could help isolate out potential issues with the configuration of the specific failing backup job (perhaps the configuration of that particular job got corrupted, which might explain why others still work as expected).

Sure. I see what you’re getting at.

So yes, I can ssh in with the same account credentials as Duplicati and create/edit files (used Vim to test) in the Duplicati directory (I only tried with one user).

I’ll try a small job and see what happens.

Question: When you use Duplicati over SFTP to unRAID, do you do so as root or as individual users?

Question: When you use Duplicati over SFTP to unRAID, do you do so as root or as individual users?

It’s not a good idea to allow the root user SSH access. You should only allow individual users, and preferably require that they provide their public keys.

Are you using a cache drive in unRAID? Could the issue be related to the mover script?

1 Like