I’m on 2.0.5.1_beta_2020-01-18, Windows 10, backing up to an S3 compatible bucket, and I’m experiencing big issues still.

Restarting the machine, and setting both upload and download caps during the warmup pause period does nothing, upload is set to 50KB/s (<0.5Mbps), but network is still showing Duplicati using an average of 1.5Mbps over the last hour that I’ve been testing it.

Then when I try to stop it to get my limited bandwidth back, “Stop Now” doesn’t work, it displays “Stopping after the current file” instead (and no I didn’t hit the wrong button), even after multiple attempts to use “Stop Now”. After attempting this, things get worse… the progress bar on the current backup job has stopped progressing at all, even though the overview progress bar shows a rate of a little over 100KB/s and continues to fluctuate. Sometimes the main progress rate dissappears, but after an F5 and a quick refresh it appears again. Nothing works to stop it… the only way I’ve been able to stop it was to literally kill the process in the task manager.

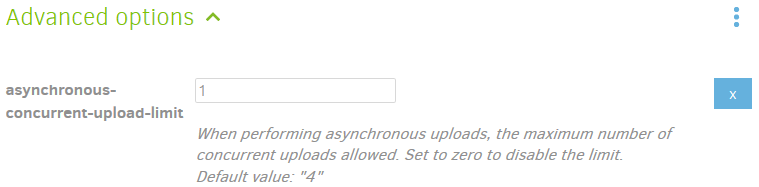

I’ve seen mentions above about multiple threads maybe each limited, but not respecting the overall limit as a group, but I can’t find any settings where I can set a thread limit of 1.

Would be happy to provide any other info you need to diagnose, but would also appreciate any suggestions for a temporary solution. One of my backup tasks has been failing since the beginning of April and is quite out of date now.

Thanks,

Kevin.

EDIT: I see a number of Canary versions but no mention of throttling in the release notes, but even still I’ll give duplicati-2.0.5.107_canary_2020-05-26-x64 a try and see how it goes.

EDIT 2: No joy, same issues continue to persist, including having to Kill the process to stop whatever is going on and preventing “Stop Now” from working. These are continually forcing me to rebuild databases as I corrupt them from these hard stops.