Is this exact sequence required? Is this the GUI control at top of page? Duplicati restarts don’t reset that.

What happens if you just leave it set, then backup? For me, setting upload throttling there works fine for OneDrive and Google Drive. I don’t have S3 to try, but I can’t think of any reason it would work differently.

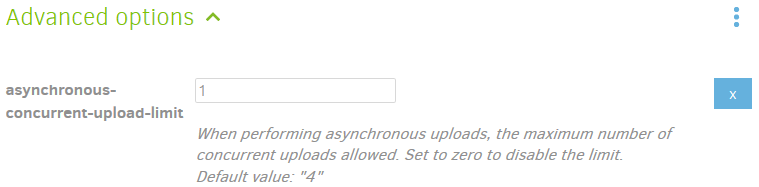

Here are my results at 10KByte/second. One difference is Google Drive has --asynchronous-concurrent-upload-limit of 1 instead of the default 4. I’m not familiar with the code details, but I think when the parallel upload code got added, it couldn’t do each upload at stated speed, or it would exceed the specified limits.

Possibly the math isn’t right yet, however you can certainly throttle even lower to see if you can get any…

OneDrive sample dblock upload:

2020-06-03 07:16:03 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b308c3beeed464101a7b6eaba42aa8340.dblock.zip.aes (27.08 MB)

2020-06-03 08:03:27 -04 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 27.08 MB in 00:47:23.4198389, 9.75 KB/s

2020-06-03 08:03:27 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b308c3beeed464101a7b6eaba42aa8340.dblock.zip.aes (27.08 MB)

Google Drive sample dblock upload:

2020-06-03 09:33:40 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-bb55d347b41d1491482c43ac7c5c8c958.dblock.zip.aes (36.82 MB)

2020-06-03 10:35:08 -04 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 36.82 MB in 01:01:27.9430744, 10.22 KB/s

2020-06-03 10:35:10 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-bb55d347b41d1491482c43ac7c5c8c958.dblock.zip.aes (36.82 MB)

About → Show log → Live → Retry would give you a live log of major events like file uploads, and that should be enough to see whether uploads are moving too fast. The profiling log can do the math for you however it’s probably not worth it for a first shot. They’re huge. A small alternative would be a filtered log.

How is network usage monitored? I watched in Task Manager. Not much else is typically uploading, and Duplicati certainly wasn’t blasting. I even watched packets on Wireshark, and saw the data dribbling out destined for the only Google (or any remote) destination Duplicati had a connection ESTABLISHED with.

There have definitely been some bugs of confusion over throttling direction, but 2.0.5.107 should be fine.

throttle-download and –throttle-upload on the job are alternate ways of throttling. They can also be set in Settings in Duplicati as a global option. I don’t recall who wins if the three spots I mentioned don’t agree.

I think this is just a messaging bug of reusing a message for a different situation. The “Stop now” is not instant, but is closer to it than “Stop after current file” which means the source file (as seen in the GUI). There is a long pipeline between seeing the file and actually getting everything processed and uploaded.

Stay as close to defaults as possible now. Maybe you mean --asynchronous-concurrent-upload-limit as shown above, but also demonstrated that it’s doing well with either 1 or 4 threads, at least in my testing,

You can certainly test a small newly added backup to a local folder. Throttling should work there as well.