OK, here I am again with what I have. Long backups make for slow debugging!

The big news is that I tried your suggestion, @ts678, and tried with –usn-policy off, and it actually works! Backup times are back to 1 hour or less, which is what they were in 2.0.3.3 beta. In fact, the average I’ve seen so far may be even shorter than it was. I’ve run the backup now several times, both by hand and by schedule and I’m confident that it works consistently. So yes, it looks like it is something in the USN code that caused this.

(Incidentally, usn was on in 2.0.3.3 as well, I didn’t change anything in the job definition when I migrated to 2.0.5.1.)

Some other things I’ve managed to gather about things you were asking:

Yes, reading of the etilqs seems to be always in 4096-byte chunks aligned to 4096-byte multiples. I don’t know whether this has to do with sqlite or the cluster size though, cluster size of the disk holding the temporary file is 4096 as well.

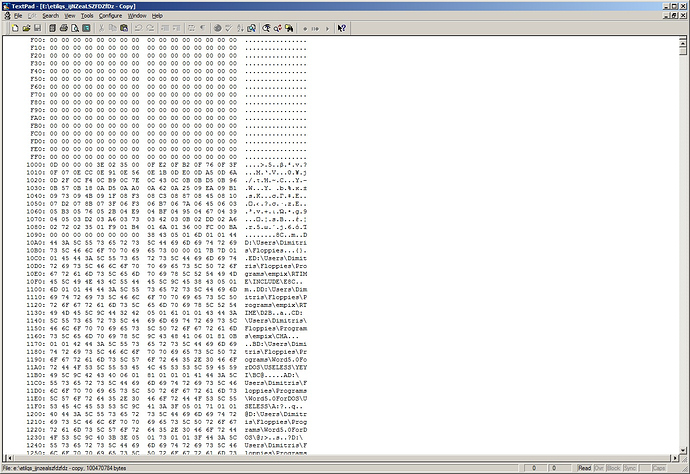

I’ve tried looking at the etilqs file by making a copy of it and opening it in a binary editor. It doesn’t look like a regular .sqlite file format. The “SQLite format 3” magic header is missing. In fact the whole first page (4096 bytes) is binary 0. After that there’s a whole lot of what looks like file and directory names from the backup, separated by some binary bytes. (The file is certainly not 100% text.) Here’s a screenshot of the start of it, I don’t mind the little information that appears:

This goes on like this until the end of the file. Is it every file and directory included in the backup? Could be, the number of entries is certainly in the hundreds of thousands, with the number of files in the backup being around 450.000 currently.

So it looks like this list of files is being read again and again. Why?

Here’s a full log at Retry level of a slow backup:

2020-02-04 18:40:01 +02 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started

2020-02-04 18:41:28 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()

2020-02-04 18:42:17 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (13.00 KB)

2020-02-05 11:00:18 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b640a0199fd6b484cbb0e377922ab334e.dblock.zip.aes (9.94 MB)

2020-02-05 11:01:16 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-20200204T164001Z.dlist.zip.aes (38.96 MB)

2020-02-05 11:06:50 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b640a0199fd6b484cbb0e377922ab334e.dblock.zip.aes (9.94 MB)

2020-02-05 11:06:50 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i87da65374429405c8fcc4511d31f96d6.dindex.zip.aes (112.92 KB)

2020-02-05 11:06:57 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i87da65374429405c8fcc4511d31f96d6.dindex.zip.aes (112.92 KB)

2020-02-05 11:18:56 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-20200204T164001Z.dlist.zip.aes (38.96 MB)

2020-02-05 11:18:56 +02 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-StartCheck]: Start checking if backups can be removed

2020-02-05 11:18:56 +02 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-FramesAndIntervals]: Time frames and intervals pairs: 7.00:00:00 / 1.00:00:00, 28.00:00:00 / 7.00:00:00, 365.00:00:00 / 31.00:00:00

2020-02-05 11:18:56 +02 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-BackupList]: Backups to consider: 4/2/2020 4:36:28 μμ, 4/2/2020 1:41:36 μμ, 3/2/2020 1:13:23 μμ, 24/1/2020 12:38:47 πμ, 15/1/2020 9:00:00 μμ, 8/1/2020 9:00:00 μμ, 3/12/2019 12:00:00 μμ, 2/11/2019 8:09:07 πμ, 26/9/2019 6:00:00 μμ, 20/8/2019 6:00:00 πμ, 15/7/2019 6:00:00 πμ, 8/6/2019 4:13:22 μμ

2020-02-05 11:18:56 +02 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-BackupsToDelete]: Backups outside of all time frames and thus getting deleted:

2020-02-05 11:18:56 +02 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-AllBackupsToDelete]: All backups to delete: 4/2/2020 4:36:28 μμ

2020-02-05 11:18:56 +02 - [Information-Duplicati.Library.Main.Operation.DeleteHandler-DeleteRemoteFileset]: Deleting 1 remote fileset(s) ...

2020-02-05 11:19:42 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Started: duplicati-20200204T143628Z.dlist.zip.aes (38.95 MB)

2020-02-05 11:19:45 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Completed: duplicati-20200204T143628Z.dlist.zip.aes (38.95 MB)

2020-02-05 11:19:45 +02 - [Information-Duplicati.Library.Main.Operation.DeleteHandler-DeleteResults]: Deleted 1 remote fileset(s)

2020-02-05 11:20:20 +02 - [Information-Duplicati.Library.Main.Database.LocalDeleteDatabase-CompactReason]: Compacting not required

2020-02-05 11:20:20 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()

2020-02-05 11:21:08 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (13.00 KB)

2020-02-05 11:21:09 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-20200204T164001Z.dlist.zip.aes (38.96 MB)

2020-02-05 11:23:18 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-20200204T164001Z.dlist.zip.aes (38.96 MB)

2020-02-05 11:23:18 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-i53d744acc00c410e866f17c34f286945.dindex.zip.aes (39.39 KB)

2020-02-05 11:23:19 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-i53d744acc00c410e866f17c34f286945.dindex.zip.aes (39.39 KB)

2020-02-05 11:23:20 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-be5f64ec2952b4f74a1b4b7df488aac4b.dblock.zip.aes (50.00 MB)

2020-02-05 11:24:43 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-be5f64ec2952b4f74a1b4b7df488aac4b.dblock.zip.aes (50.00 MB)

I don’t think this gives any useful information, it all looks nice and straight… until you notice how much time there is between lines 3 and 4.

So there we are. I don’t follow everything else you’re talking about too well, not being a .NET programmer and not being familiar with the code. I tried the dnSpy debugger, but can’t make easy progress with it. Like I said, not being familiar with any of this stuff would make for a very long learning stage for me and right now I have neither the time nor the inclination for it. Given that with no USN things seem to be running fine, I consider this solved for me, albeit with a workaround rather than a clean solution. So thanks, everyone, for the time you took to look into this and offering your ideas, suggestions and expertise, I couldn’t have progressed without you!

Still, don’t hesitate to ask if there is any additional information I may be able to gather. This is easy to replicate (just turn usn back on), so I could do some more digging, if needed.