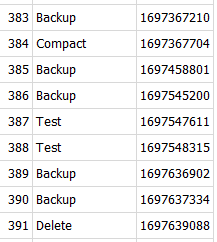

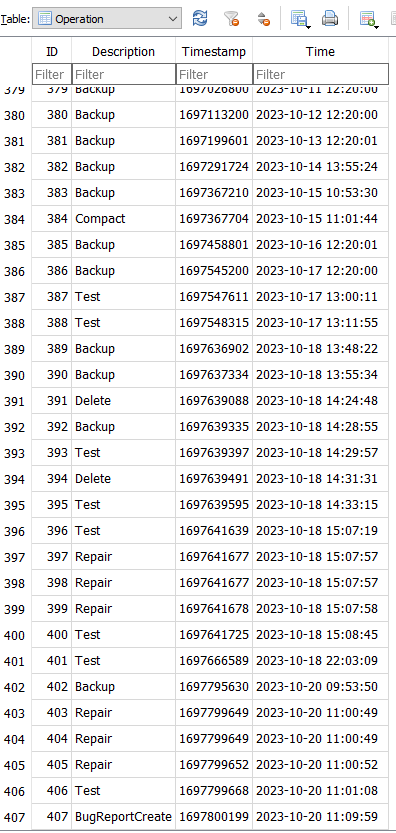

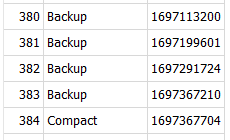

looks a little earlier than the question about Compact, then the Test. The log situation looks like this:

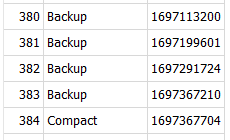

so I’m wondering (and asked about) whether something didn’t complete. Check Operation UTC time:

380 October 12, 2023 12:20:00 PM

381 October 13, 2023 12:20:01 PM

382 October 14, 2023 1:55:24 PM

383 October 15, 2023 10:53:30 AM

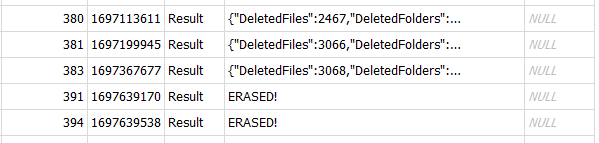

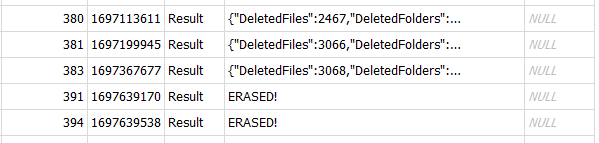

Notice that 382 made no log, which makes me wonder what happened in there but we may not know.

383 log looks like it’s cleaning up from 382 not finishing well, and need increases for clues on history:

"Messages":[

"2023-10-15 11:53:30 +01 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (3.91 KB)"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-KeepIncompleteFile]: keeping protected incomplete remote file listed as Uploading: duplicati-20231014T135524Z.dlist.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-bd2722d010ec748b58425f8a98c0a07a7.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-ba6332017268d4c9dadc0eea95a05a083.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b035645e1ba5b44019bbb8e4b9e3a4ada.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Temporary: duplicati-b668e45677e9b4a63a34ae349df445f05.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Temporary: duplicati-b86ba3f6b522943cfbca7a126e153d8c9.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-b178bc563fec146e29150c34b5881af4c.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-bad55f0e957ea46f383ea80d0f6631efa.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-b976063604c42429595ec7fc2d7e81119.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-bac69f4529b2642c29173566138f7356e.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-be71cdb6bec414d95bc097820f75d0112.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-ba2f8108c05df4b0fa3addf23ea4b72b3.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-bd82c252457964df68af57268b9e28967.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-bb1a85b7fa0a14fc4a24caf258311004c.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-b2e1e98f014da4c8ea553c83b4ed7c5ce.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-b98ce13ac37e44b0187b31a57ac41523b.dblock.zip.aes"

"2023-10-15 11:54:13 +01 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Deleting: duplicati-b55568858a2d64f4aac7286036c44deda.dblock.zip.aes"

],"Warnings":[],"Errors":[]

EDIT 1:

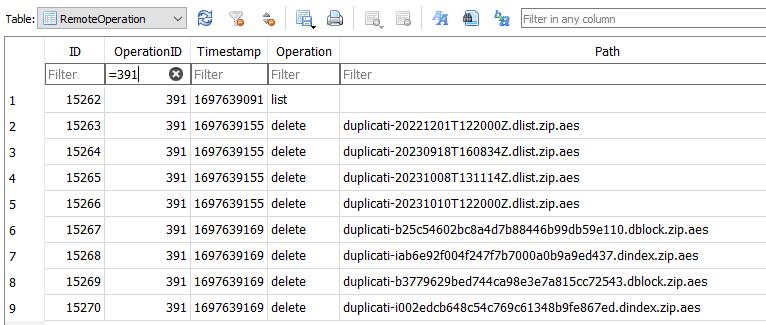

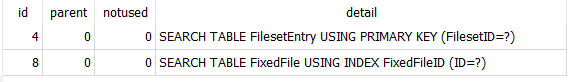

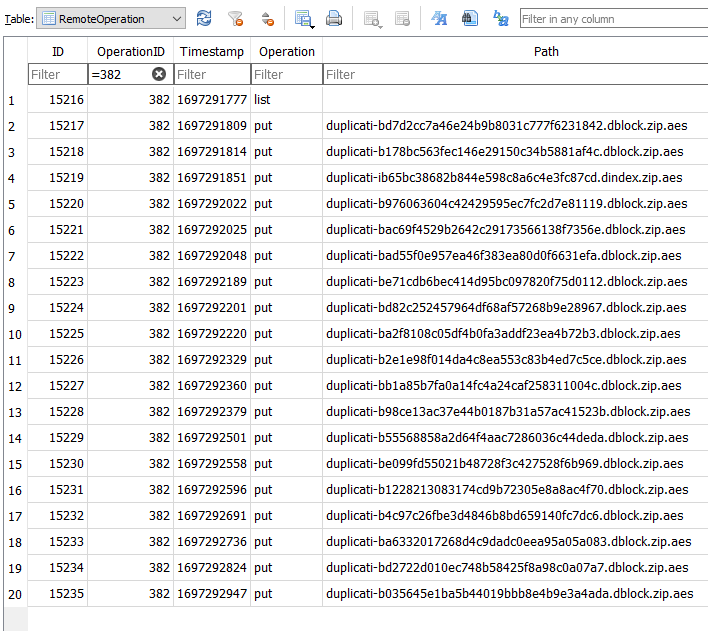

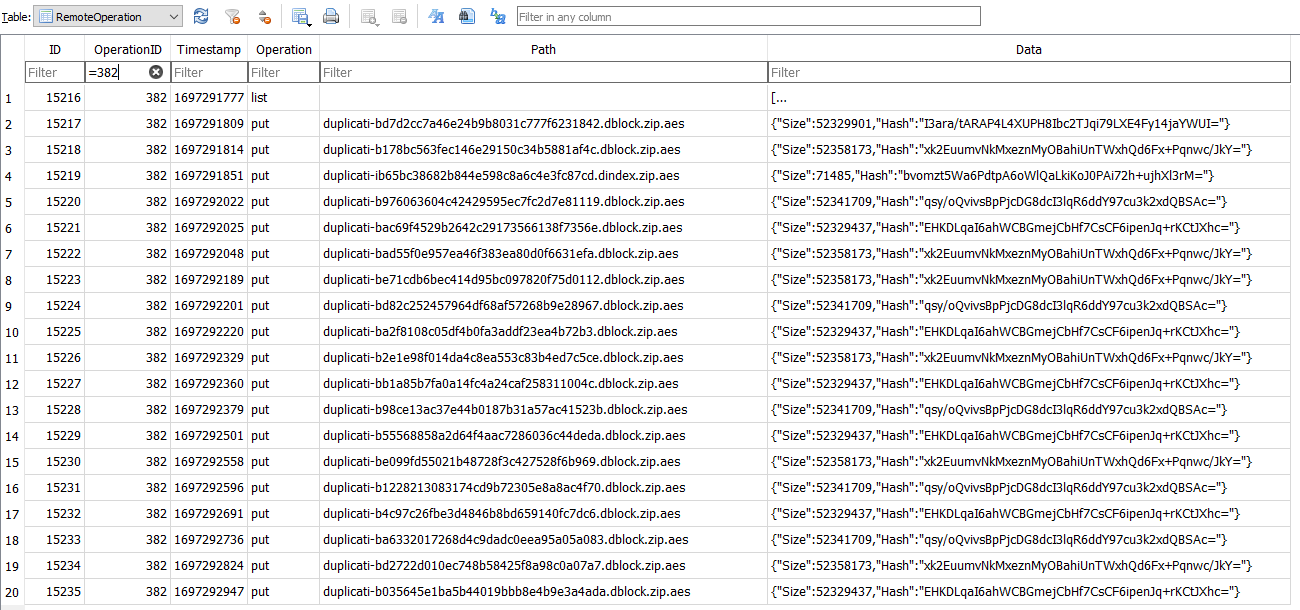

RemoteOperation table for 382 looks odd. Too few dindex and no dlist at the end (did it error early)?

EDIT 2:

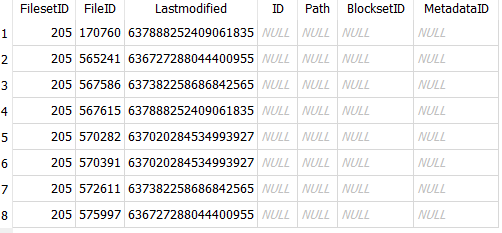

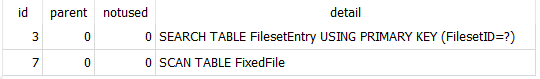

Although database records can’t always be trusted due to potential transaction rollback, check files

from ID 382 at the list at the start of 383. The Data value can be put in a spreadsheet, and sorted.

{“Name”:“duplicati-bd7d2cc7a46e24b9b8031c777f6231842.dblock.zip.aes”

{“Name”:“duplicati-ib65bc38682b844e598c8a6c4e3fc87cd.dindex.zip.aes”

are the answer of what’s on Destination and dated Oct 14. What kind of storage type is destination?

EDIT 3:

It looks like some network (probably Internet) connected service, going down and killing the backup.

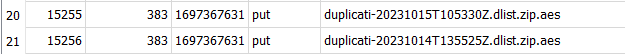

This is visible in the full-width view of the RemoteOperation table where one can see the put retries:

Retries are done using a different name, so you have to look at the Size and Hash to see the retries.

Default number-of-retries is 5, and when the last one fails, you’re done which should cause a popup.

About → Show log → Stored should also show it. Again asking for some information on runs history.

The network failure allowed two files up on Oct 14, as previously named – one dblock and its dindex.

The dindex put was at October 14, 2023 1:57:31 PM UTC, and it looks like local time is an hour later.

The last attempt at a put was October 14, 2023 2:15:47 PM UTC, so check server log a little beyond.

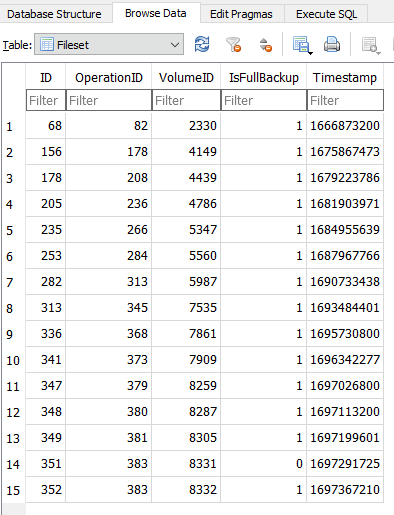

The Restore dropdown of versions should show a partial backup on Oct 14 despite likely early failure:

Backup 383 while it’s trying to clean up the mess from 382 uploads a synthetic filelist of work so far, basically what backup 381 left for 382 plus whatever 382 managed to pick up before its early death.

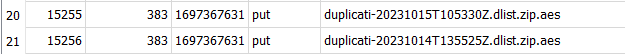

The list at the start of 383 showed no dlist file from 382. dlist is late, and network had already failed.

383 cleanups from 382 mentioned “listed as Uploading: duplicati-20231014T135524Z.dlist.zip.aes”.

Interestingly, 383 on Oct 15 uploads the dlist for 382 late at the same second that it uploads its own:

The hhmmss went from 135524 to 135525. This is typical of a replacement dlist (unlike dblock plan).