I have been a long term Duplicati user and have a large (2.5 TB) backup which is sent to three targets: two remote hosts over SSH and a local external HDD. Over the years I’ve had many issues with Duplicati in one way or another, the biggest and most frequent issue being that of corrupted local databases, which easily grow to 2-3 GB in size (even with a large block size). On some occasions I repair the local DB, which does work, but as you can imagine it is slow. Because I have three targets, when I hit issues I generally prefer to reset a backup rather than repair the DB. To do this I will:

-

Access the backup files and delete all of them.

-

Use the Duplicati GUI to go to the “Advanced”->“Database” page and choose to “Delete” the database.

-

Start the backup manually, or wait for it to run automatically, and then Duplicati takes the combination of “no files at target” and “no database” to mean that it should start the backup fresh and off it goes. Great.

Last week I upgraded to v2.1.0.2 from whatever the previous beta version was (am not on canary) and started to run into some issues with one of my targets. Now I suspect that there is a faulty HDD at play, which is why I’m not too worried about the error messages (invalid HMAC, don’t trust content) and am not going to explore that issue at this time. What I want to do is reset the backup, but in the process I have found that the process above no longer works!

This is what I have done:

-

Delete all of the files at the target.

-

Delete the local DB.

-

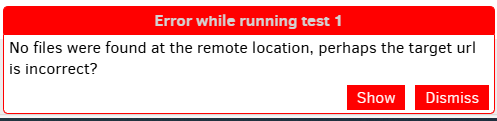

Try to run the backup. The software reports “No filelists found on the remote destination!” and for some reason still thinks it knows there are 63 versions in the backup. How can that be? The version information is supposed to be in the database, isn’t it?

To try and fix this I have attempted the following, checking the status after each step:

-

Ensured not only the database file is deleted but any of the backup files.

-

Restarted Duplicati.

-

Exported the configuration, deleted the configuration (ensuring the tick boxes for deleting the files and the database are checked), and then imported the configuration fresh.

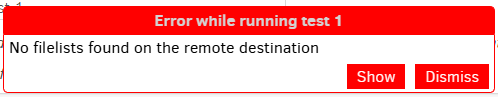

Yet despite doing all of that the issue persists. After exporting and importing the GUI no longer claims that any versions are available, but still fails to run a backup, reporting “No filelists found on the remote destination”.

Now what I don’t want to do is completely reset the software and lose my other two backups in the process.

I can post log files here but it seems to me that this might be a situation that has simply not come up during testing, especially since few people wish to willingly delete a backup and start over. For those reasons I doubt the log files will be useful, but let me know if you disagree.

For now I have just disabled this backup by turning off the schedule, but it’s annoying to be down a target. In all other regards, and despite the DB corruption issues, this is fantastic software and I am more than willing to persevere. Thank you to all the contributors.