Was this on Backblaze B2 S3 API? Does it seem to timeout less than on B2 native API?

I went to look for a simple tester for B2, and wound up using the BackendTester tool like:

C:\Duplicati\duplicati-2.1.0.124_canary_2025-07-11-win-x64-gui>Duplicati.CommandLine.BackendTester "b2://BUCKET/timeout?auth-username=REDACTED&auth-password=REDACTED&read-write-timeout=15" --reruns=1

7/23/2025 8:54:31 PM

[20:54:31 774] Starting run no 0

[20:54:34 153] Read Write Timeout set to 60000 ms

[20:54:34 155] Generating file 0 (27.02 MiB)

[20:54:34 637] Generating file 1 (41.98 MiB)

[20:54:35 438] Generating file 2 (15.40 MiB)

[20:54:35 686] Generating file 3 (45.93 MiB)

[20:54:36 455] Generating file 4 (7.67 MiB)

[20:54:36 587] Generating file 5 (5.95 MiB)

[20:54:36 722] Generating file 6 (7.18 MiB)

[20:54:36 819] Generating file 7 (28.29 MiB)

[20:54:37 405] Generating file 8 (33.61 MiB)

[20:54:38 048] Generating file 9 (37.19 MiB)

[20:54:38 711] Uploading wrong files ...

[20:54:38 711] Generating file 10 (1.26 KiB)

[20:54:38 727] Uploading file 0, 1.26 KiB ... done! in 591 ms (~2.14 KiB/s)

[20:54:39 320] Uploading file 0, 1.26 KiB ... done! in 581 ms (~2.17 KiB/s)

[20:54:39 902] Uploading file 9, 1.26 KiB ... done! in 341 ms (~3.70 KiB/s)

[20:54:40 244] Uploading files ...

[20:54:40 244] Uploading file 0, 27.02 MiB ... done! in 7309 ms (~3.70 MiB/s)

[20:54:47 554] Uploading file 1, 41.98 MiB ... [20:55:21 500] Failed to upload file 1, error message: System.TimeoutException: The operation has timed out.

at Duplicati.StreamUtil.TimeoutObservingStream.ReadImplAsync(Byte[] buffer, Int32 offset, Int32 count, CancellationToken cancellationToken)

at Duplicati.StreamUtil.ThrottleEnabledStream.ReadAsync(Byte[] buffer, Int32 offset, Int32 count, CancellationToken cancellationToken)

at Duplicati.StreamUtil.TimeoutObservingStream.ReadImplAsync(Byte[] buffer, Int32 offset, Int32 count, CancellationToken cancellationToken)

at System.IO.Stream.<CopyToAsync>g__Core|27_0(Stream source, Stream destination, Int32 bufferSize, CancellationToken cancellationToken)

at System.Net.Http.StreamToStreamCopy.<CopyAsync>g__DisposeSourceAsync|1_0(Task copyTask, Stream source)

at System.Net.Http.HttpContent.<CopyToAsync>g__WaitAsync|56_0(ValueTask copyTask)

at System.Net.Http.HttpConnection.SendRequestContentAsync(HttpRequestMessage request, HttpContentWriteStream stream, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpConnection.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpConnection.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpConnectionPool.SendWithVersionDetectionAndRetryAsync(HttpRequestMessage request, Boolean async, Boolean doRequestAuth, CancellationToken cancellationToken)

at System.Net.Http.RedirectHandler.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpClient.<SendAsync>g__Core|83_0(HttpRequestMessage request, HttpCompletionOption completionOption, CancellationTokenSource cts, Boolean disposeCts, CancellationTokenSource pendingRequestsCts, CancellationToken originalCancellationToken)

at Duplicati.Library.Backend.Backblaze.B2.PutAsync(String remotename, Stream stream, CancellationToken cancelToken)

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.CommandLine.BackendTester.Program.Uploadfile(String localfilename, Int32 i, String remotefilename, IBackend backend, Boolean disableStreaming, Int64 throttle, Int32 readWriteTimeout), remote name: gHQBTkjh2eHRH9pHhQDPTjbLX after 33946 ms

[20:55:21 513] Uploading file 2, 15.40 MiB ... done! in 2258 ms (~6.82 MiB/s)

[20:55:23 772] Uploading file 3, 45.93 MiB ... done! in 13738 ms (~3.34 MiB/s)

[20:55:37 511] Uploading file 4, 7.67 MiB ... done! in 9771 ms (~803.51 KiB/s)

[20:55:47 283] Uploading file 5, 5.95 MiB ... done! in 6736 ms (~904.69 KiB/s)

[20:55:54 020] Uploading file 6, 7.18 MiB ... done! in 5763 ms (~1.25 MiB/s)

[20:55:59 784] Uploading file 7, 28.29 MiB ... [20:56:26 539] Failed to upload file 7, error message: System.TimeoutException: The operation has timed out.

at Duplicati.StreamUtil.TimeoutObservingStream.ReadImplAsync(Byte[] buffer, Int32 offset, Int32 count, CancellationToken cancellationToken)

at Duplicati.StreamUtil.ThrottleEnabledStream.ReadAsync(Byte[] buffer, Int32 offset, Int32 count, CancellationToken cancellationToken)

at Duplicati.StreamUtil.TimeoutObservingStream.ReadImplAsync(Byte[] buffer, Int32 offset, Int32 count, CancellationToken cancellationToken)

at System.IO.Stream.<CopyToAsync>g__Core|27_0(Stream source, Stream destination, Int32 bufferSize, CancellationToken cancellationToken)

at System.Net.Http.StreamToStreamCopy.<CopyAsync>g__DisposeSourceAsync|1_0(Task copyTask, Stream source)

at System.Net.Http.HttpContent.<CopyToAsync>g__WaitAsync|56_0(ValueTask copyTask)

at System.Net.Http.HttpConnection.SendRequestContentAsync(HttpRequestMessage request, HttpContentWriteStream stream, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpConnection.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpConnection.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpConnectionPool.SendWithVersionDetectionAndRetryAsync(HttpRequestMessage request, Boolean async, Boolean doRequestAuth, CancellationToken cancellationToken)

at System.Net.Http.RedirectHandler.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpClient.<SendAsync>g__Core|83_0(HttpRequestMessage request, HttpCompletionOption completionOption, CancellationTokenSource cts, Boolean disposeCts, CancellationTokenSource pendingRequestsCts, CancellationToken originalCancellationToken)

at Duplicati.Library.Backend.Backblaze.B2.PutAsync(String remotename, Stream stream, CancellationToken cancelToken)

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.CommandLine.BackendTester.Program.Uploadfile(String localfilename, Int32 i, String remotefilename, IBackend backend, Boolean disableStreaming, Int64 throttle, Int32 readWriteTimeout), remote name: HYF81nf after 26754 ms

[20:56:26 541] Uploading file 8, 33.61 MiB ... done! in 6487 ms (~5.18 MiB/s)

[20:56:33 029] Uploading file 9, 37.19 MiB ... done! in 15008 ms (~2.48 MiB/s)

[20:56:48 037] Verifying file list ...

[20:56:48 118] *** File 2 with name 8HwksHAbUmi3PVTMtMLKpi1wpOLqp6WsAirPZRGpca4M7qbWQBVboUr4cyWTxhxp8 was uploaded but not found afterwards

[20:56:48 118] *** File 8 with name MyTFPA4 was uploaded but not found afterwards

[20:56:48 119] Downloading files

[20:56:48 120] Downloading file 0 ... done in 2231 ms (~12.11 MiB/s)

[20:56:50 352] Checking hash ... done

[20:56:50 577] Downloading file 1 ... done in 2589 ms (~16.21 MiB/s)

[20:56:53 168] Checking hash ... done

[20:56:53 647] Downloading file 2 ... [20:56:54 058] failed

*** Error: Duplicati.Library.Interface.FileMissingException: The requested file does not exist

at Duplicati.Library.Backend.Backblaze.B2.GetAsync(String remotename, Stream stream, CancellationToken cancellationToken)

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.CommandLine.BackendTester.Program.Run(List`1 args, Dictionary`2 options, Boolean first) after 409 ms

[20:56:54 059] Checking hash ... [20:56:54 059] failed

*** Downloaded file was corrupt

[20:56:54 060] Downloading file 3 ... done in 3001 ms (~15.30 MiB/s)

[20:56:57 062] Checking hash ... done

[20:56:57 539] Downloading file 4 ... done in 519 ms (~14.76 MiB/s)

[20:56:58 061] Checking hash ... done

[20:56:58 131] Downloading file 5 ... done in 451 ms (~13.18 MiB/s)

[20:56:58 585] Checking hash ... done

[20:56:58 649] Downloading file 6 ... done in 518 ms (~13.85 MiB/s)

[20:56:59 169] Checking hash ... done

[20:56:59 235] Downloading file 7 ... done in 1715 ms (~16.49 MiB/s)

[20:57:00 952] Checking hash ... done

[20:57:01 256] Downloading file 8 ... [20:57:01 658] failed

*** Error: Duplicati.Library.Interface.FileMissingException: The requested file does not exist

at Duplicati.Library.Backend.Backblaze.B2.GetAsync(String remotename, Stream stream, CancellationToken cancellationToken)

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.CommandLine.BackendTester.Program.Run(List`1 args, Dictionary`2 options, Boolean first) after 401 ms

[20:57:01 659] Checking hash ... [20:57:01 659] failed

*** Downloaded file was corrupt

[20:57:01 660] Downloading file 9 ... done in 2176 ms (~17.09 MiB/s)

[20:57:03 836] Checking hash ... done

[20:57:04 135] Deleting files...

[20:57:04 135] Deleting file 0

[20:57:04 219] Deleting file 1

[20:57:04 302] Deleting file 2

[20:57:04 385] *** Failed to delete file 8HwksHAbUmi3PVTMtMLKpi1wpOLqp6WsAirPZRGpca4M7qbWQBVboUr4cyWTxhxp8, message: Duplicati.Library.Interface.FileMissingException: The requested file does not exist

at Duplicati.Library.Backend.Backblaze.B2.DeleteAsync(String remotename, CancellationToken cancellationToken)

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.CommandLine.BackendTester.Program.Run(List`1 args, Dictionary`2 options, Boolean first)

[20:57:04 386] Deleting file 3

[20:57:04 550] Deleting file 4

[20:57:04 644] Deleting file 5

[20:57:04 728] Deleting file 6

[20:57:04 812] Deleting file 7

[20:57:04 898] Deleting file 8

[20:57:04 979] *** Failed to delete file MyTFPA4, message: Duplicati.Library.Interface.FileMissingException: The requested file does not exist

at Duplicati.Library.Backend.Backblaze.B2.DeleteAsync(String remotename, CancellationToken cancellationToken)

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.CommandLine.BackendTester.Program.Run(List`1 args, Dictionary`2 options, Boolean first)

[20:57:04 979] Deleting file 9

[20:57:05 232] Checking retrieval of non-existent file...

[20:57:05 423] Caught expected FileMissingException

[20:57:05 435] Checking DNS names used by this backend...

api.backblazeb2.com

api001.backblazeb2.com

f001.backblazeb2.com

C:\Duplicati\duplicati-2.1.0.124_canary_2025-07-11-win-x64-gui>

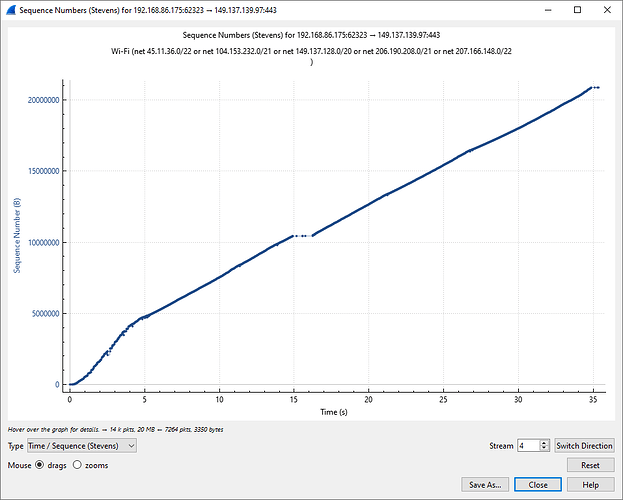

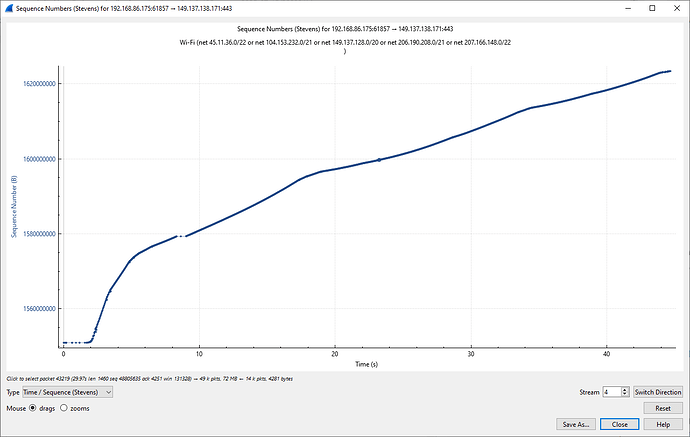

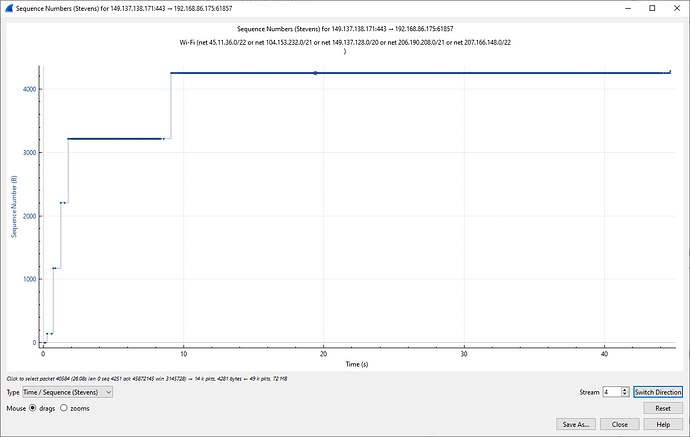

Summary of Wireshark TCP stream that looked like the several uploads until timeout:

87 54:38 start of stream

95 54:38 start of upload

25006 54:47 start of upload that timed out at 55:21

72818 55:23 B2 sends FIN and Duplicati PC sends RST

45 second TCP run, and its upload activity is like:

Roughly 9 seconds in on the graph is the last upload that Duplicati timed out.

Not seeing anything that I would call a 15 second stalled connection in there.

As a side note, TCP level stalls will retry awhile, so Duplicati can timeout first.

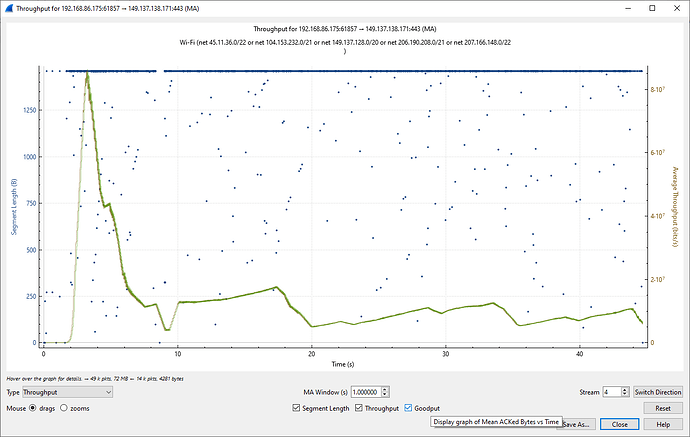

But I’m not sure there’s any such network hiccup here. FWIW, downstream is:

so if one is motivated enough, one might be able to label the other uploads in it.

Interestingly the two timed out uploads completed then got verified successfully.

There were also two that Duplicati thought it uploaded that didn’t actually arrive.

EDIT 1:

The hover-over definition of Goodput, which capture tool wasn’t able to capture:

“Display graph of mean ACKd Bytes vs Time”. Throughput is Transmitted Bytes.

They seem to track very closely though. Throughput is brown. Goodput is green.