@SamSirry, can you provide a link to where you saw this in the code?

It is here at line 176:

duplicati/Duplicati/Library/Backend/File/FileBackend.cs at master · duplicati/duplicati · GitHub

Thanks for looking digging into this!

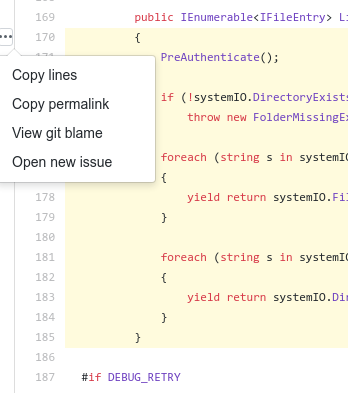

In case you want to direct link to specific code lines on GitHub in the future - you can click just to the left of the line numbers and drag (or shift-click) to select a block of lines.

Once you’ve done that you should get some ellipses at the top left of the select on which you can click and select “Copy permalink” as shown here.

Pasting that link into your post here will result in something like this:

Thanks @SamSirry. At a quick glance, the code you linked to doesn’t appear to perform any redundant directory listings. I think the directory contents are enumerated just a single time. This is

due to lazy evaluation of IEnumerable<T> objects and how iterators work with the yield return statement in C#:

However, we should try to figure out what’s causing the slow performance you’re experiencing.

Thank you for looking into this

I understand now how the lazy evaluation and yield return works, however, the part that I’m not trusting here is how EnumerateFileSystemEntries() tracks its position.

systemIO.EnumerateFileSystemEntries(m_path)

Like, isn’t it possible that because it is lazy evaluation, it is not loading the whole file list and keeping it in memory; perhaps it just keeps the current file index as a state, and so every time the next element is requested, the file listing needs to gett retrieved from the remote machine.

I know this is tightly related to how the SSH/SFTP backend classes work, but I’m having a hard time following the objects in the code without the proper IDE.

I hope I could clarify my point properly. Please tell me to elaborate if there are any ambiguities.

Ah, I think I understand your question now. I believe that the iterator methods like System.IO.Directory.EnumerateFiles will get the file listing by asking the OS/filesystem for the “next” entry since the last query, as opposed to obtaining the entire listing and storing it in some sort of cache. This helps allow for potential huge memory savings as it avoids allocating large arrays to store the file lists.

I’m not certain about this at all though, it’s just a guess. Perhaps digging into the mono source will provide us with more details.

I haven’t put this on a debugger, but are you sure FileBackend.cs is relevant? I think similar “ssh” code is:

I’m new enough to C# that I went to read Yield return in C#, and saw how it iterates that return on request.

A yield return appears to be a “pull” mechanism, so a concern I had was whether possibly Duplicati wasn’t iterating fast enough and some ssh thing timed out, as protocols sometimes do, SSHNET’s code might be here and looks like it just stores file entries to a List as fast as it can, then Duplicati gets the List to iterate. Assuming I read this correctly, that resolves my worry that Duplicati processing was breaking the sftp list…

What does “debug output” mean? Is that –log-level=Profiling? Can you post log output from the 4 hour list?

2018-11-29 15:44:41 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()

2018-11-29 15:44:41 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (4 bytes)

2018-11-29 15:44:41 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.BackendManager-RemoteOperationList]: RemoteOperationList took 0:00:00:00.000

shows the very fast timing of this tiny example. When it says “4 bytes” I think it means 4 items, specifically those below the header below. I got the URL from the job’s Commandline menu. Is your list slow this way?

C:\Program Files\Duplicati 2>Duplicati.Commandline.BackendTool.exe list "file://C:\Duplicati Backups\local test 3\\"

Name Dir/File LastChange Size

duplicati-20181001T235722Z.dlist.zip File 10/1/2018 7:57:23 PM 779 bytes

duplicati-b0d4ebc7b3e5a40bfa8e2850b2691b277.dblock.zip File 10/1/2018 7:57:22 PM 1.80 KB

duplicati-i30f423829bac4552b04674a761bb135c.dindex.zip File 10/1/2018 7:57:22 PM 759 bytes

duplicati-verification.json File 11/29/2018 3:44:41 PM 587 bytes

C:\Program Files\Duplicati 2>

With the units error (I think the code is used to doing things like Get and Put which give a byte count), that’s about 244 bytes per file, which might be reasonable for long paths. Does your network monitor show steady traffic coming from the remote SSH server, or does its transfer finish early, then Duplicati processes results? Some Duplicati code also looks like it does a quick read, but there are probably multiple places that get lists.

For a given backup, do all the RemoteOperationList times come out about the same time, or does it vary? Does the SSH server maintain any logs of its own that may directly show the number of lists, and their times? Have you timed the directory list with any other sftp tool, such as psftp, WinSCP, or maybe even a Linux sftp?

Yes, and I’m not sure investigation was going well, so I propose this alternative path. Feel free to discuss. ![]() Right now, what I’ve heard is that “On a certain backup destination” List is “some times” slow. Need more info.

Right now, what I’ve heard is that “On a certain backup destination” List is “some times” slow. Need more info.

Sorry for being unable to follow up for a good while as I was on a visit to a parallel universe.

I will try to answer my best now as I’ve lost or can’t find some files related to the diagnostic of this issue.

Right now, what I’ve heard is that “On a certain backup destination” List is “some times” slow. Need more info.

Well, one of my backup destinations is through a bit unstable connection over VPN to a home DSL line in another continent. I know it’s unstable because some pings time out some times. Aside from that the connection isn’t that bad, because after the aforementioned delay in the List() command, the upload goes nicely at 300 KBps which is almost my top upload speed, and can go on for hours without a noticeable issue.

A yield return appears to be a “pull” mechanism, so a concern I had was whether possibly Duplicati wasn’t iterating fast enough and some ssh thing timed out…

I believe Duplicati processing is not slow at all. The system appears in total rest when the issue happens.

To be fair, the occurrence of this problem has been rare recently, but I have upgraded to the most recent canary version as of this writing and I noticed the issue happening once.

Other jobs run just fine, but they have either local destinations (on same PC) or over SSH on a LAN machine, but over the same VPN mentioned above.

What does “debug output” mean?

I was just viewing the debug from the web interface. Yes, using the “Profiling” level.

I once logged it to a file and it was the same thing. No details at all. I lost the file, but it just says starting List(), and after that List() is completed. No details.

Ah! It just happened a while ago, and luckily the newest version of Duplicati keeps the log details:

"Messages": [

"2019-01-19 07:01:11 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started",

"2019-01-19 07:01:48 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()",

"2019-01-19 07:50:50 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (41.12 KB)",

"2019-01-19 07:50:51 - [Information-Duplicati.Library.Main.Operation.BackupHandler-SkipUsnForVolume]: Performing full scan for volume \"D:\\\"",

"2019-01-19 07:51:50 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b20f18645a4e84e20b8f78debe81ba9b3.dblock.zip.aes (200.03 KB)",

"2019-01-19 07:52:04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b20f18645a4e84e20b8f78debe81ba9b3.dblock.zip.aes (200.03 KB)",

"2019-01-19 07:52:04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i74697cb8af004c05991830b6f1c1fc84.dindex.zip.aes (1.12 KB)",

"2019-01-19 07:52:05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i74697cb8af004c05991830b6f1c1fc84.dindex.zip.aes (1.12 KB)",

"2019-01-19 07:52:06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-20190119T040054Z.dlist.zip.aes (12.71 MB)",

"2019-01-19 07:52:56 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-20190119T040054Z.dlist.zip.aes (12.71 MB)",

"2019-01-19 07:52:56 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-StartCheck]: Start checking if backups can be removed",

....

As you can see, 12MB are uploaded in 10 seconds, so the connection was fine. I even tested the connection while List() was stalled, and it was working normally.

The job contains some 100,000 files.

Does your network monitor show steady traffic coming from the remote SSH server, or does its transfer finish early, then Duplicati processes results?

It’s been a while and I seem to have lost (or just can’t find) the screenshots and files related to the issue, but from what I remember, it would be a small amount of transfer, then long silence, then another little transfer, then silence, and so on. It doesn’t seem like Duplicati was doing any processing during the silence (no CPU activity). It’s more like it’s just waiting for data to arrive. And as I’ve mentioned in an earlier comment: while the amount transferred is reported in KBytes, it’s actually a few megabytes. This is why I assumed that the whole remote file list was being transferred mutliple times.

Does the SSH server maintain any logs of its own that may directly show the number of lists, and their times?

I’m not sure I can find that info. I’m not so keen with Linux.

Have you timed the directory list with any other sftp tool, such as psftp, WinSCP, or maybe even a Linux sftp?

Yes, I opened a SSH consol manually remotely and used the ls command just to see how fast it goes. It was very fast, and didn’t notice any hiccup in listing the 15k+ remote files.

Thanks again for your help and follow up. I’d be happy if I can be of further help.

I have an update on the issue…

So the problem was that the data transfer was going very slow during the remote-file listing(*) step.

It was happening on certain destinations only. With further investigation with networking tools, it turns out that the connection to the country where the remote files are located is not stable. Specifically, some times TCP/UDP packets go but the answer never comes back.

Although the connection to these destinations happens over a VPN, but the same VPN is working very fine with other destinations (not in that country, though).

Interestingly, the solution was to do something like a plunger to the connection. Sending large ICMP (Echo) packets (something like 10KB every second) to the remote host over a period of 5-10 minutes gets the connection working again. This is a weird phenomenon to me. If anyone knows about it, please teach me something new.

However, the same issue remains that the List() operation actually downloads way much more data than anticipated; something like 13.6MB for a listing of around 61,250 remote files!

* Sorry, I couldn’t help it.

13.6MB doesn’t seem outrageous for 61k files. That’s only about 222 Bytes per file. Sure it’s not 1:1 with the characters in each file name, but it’s expected that it’s formatted in some form of JSON, XML or otherwise that will add a bit of data around each name.

If under 14 MB is so slow, how long does a test sample of 1 set (dlist, dindex, dblock) take I wonder? Just the dblock will usually be 50 MB. As for downloaded amounts, packet loss can cause retransmissions by sender. If you can get onto destination to run netstat -s, you can see retransmissions compared to the total sends.

Here the remote log shows one last

17 May 2019 01:37: get duplicati-bdc9c787b0a5b42fd8778a782d624d0b7.dblock.zip.aes

And then it had been stuck ever since, and the only solution seems to be the cancel the backup and restart. And since I have no direct monitoring (I shouldn’t need to baby sit the backup application) it is not noticed. Now this is a fairly large backup set, but really a 24 hour timeout of actions (like a watchdog) is not that bad of an idea. Of a single dblock/listing or whatever takes longer than 24 hours, probably something is up and the backup should be retried.

Hello @EraYaN and welcome to the forum!

–number-of-retries controls how many upload/download tries happen, but first need is setting timeouts.

Storage Providers lists some of them, but there are also generic ones such as –http-operation-timeout potentially applicable to multiple HTTP-based providers. I don’t think these have been finely tuned to any particular scenario (and scenarios vary a LOT), and I suspect some of these timeouts are set to infinite.

2.0.4.16 and on silently drop uploads getting TaskCanceledException from HTTP timeout #3772 gets in speculation over that, while finding that .NET default 100-second timeout can be too short on OneDrive. Canary has added parallel uploads (a long-awaited feature) but I suspect this slows any given upload…

There’s no higher-level watchdog currently as far as I know, but it might be easier to add than verifying every backend has a semi-reasonable timer. This possibly also gets in some of the current challenges manually pausing/stopping backups, so possibly solving that will be helpful before trying to automate it.

Thank you for the reply. It seems the S3 Compatible storage backend --s3-ext-maxidletime = 50000 option. Assuming this is seconds, this might not work as at least I expect, since it should be around 13 or so hours. And it was stuck for half a month.

I’m not to sure how to set up a minimal working example properly, since it’s fairly hard to test against all network conditions, maybe someone can try some mininet solution with some intermittent connections configured.

I think 50000 is in milliseconds. See AWS SDK for .NET V3 API Documentation, which is relevant because Duplicati uses that SDK. I’m not seeing that Duplicati changes that default (unusually, the code for both the listing and setting of available s3-ext- options is dynamic based on what the DLL can support), so maybe network isn’t the issue in your hang. Setting up a –log-file at as high a –log-file-log-level as you can tolerate (profiling is huge, while something like retry is not so bad) might give more information on “stuck” spot.

Another possibility, I suppose, is that the SDK is timing out but whatever that does isn’t noticed in Duplicati. Deliberately trying to force timeouts on a test backup (e.g. by setting timeout to 1 millisecond) might reveal.

I’ve sometimes attempted intermittent network tests by disconnecting things, e.g. disconnecting the Wi-Fi, but sometimes the error (e.g. a DNS lookup failure) isn’t the one I was really hoping to get. Tough to target.

Hi, I found this thread and a several similar reports about the verifying taking forever.

I am running 2.0.4.23_beta_2019-07-14 on Unraid in a container.

Current backup set is about 1.7TB, using B2.

Backup has been on verifying for a few days now.

Unraid docker usage shows that Duplicati is running at 12% CPU, and one CPU thread thread at near 100%, possibly indicating a tight loop issue.

Server status:

lastPgEvent : {"BackupID":"3","TaskID":3,"BackendAction":"List","BackendPath":null,"BackendFileSize":-1,"BackendFileProgress":0,"BackendSpeed":-1,"BackendIsBlocking":false,"CurrentFilename":null,"CurrentFilesize":0,"CurrentFileoffset":0,"Phase":"Backup_PreBackupVerify","OverallProgress":0,"ProcessedFileCount":0,"ProcessedFileSize":0,"TotalFileCount":2038822,"TotalFileSize":1812991290750,"StillCounting":false}

updaterState : Waiting

Last live profiling log entry is from the 5th, today is the 9th:

Aug 5, 2019 9:15 AM:

Including path as no filters matched: /source/backup/Profiles/DESKTOP-UUIL10M/ProgramData/Adobe/AAMUpdater/1.0/

It is still running, anything I can do help troubleshoot?

Duplicati.Server users 50% CPU with nothing scheduled is some troubleshooting. Some translates to Linux, some doesn’t. One advantage of Linux over Windows is at least some allow “ls -lu” to detect file reads, so you can try ls -lurt ~/.config/Duplicati to see what’s been read. Also try -lrt and -lcrt.

Basically, troubleshooting could start with triage. You know CPU is busy. What’s up with disk and net?

Large-scale disk stats might be possible with things like sar or iotop. Network might try netstat or ntop.

If you’re still at Backup_PreBackupVerify, your status bar probably says “Verifying backend data …”, however not every step is shown, and so it might be somewhere a bit later. Still, it narrows the scope.

How deep into troubleshooting do you want to go? Are you talking about code and Linux system calls?

strace with -p on the child mono process, and using -f, seems to give a nice view of what’s going on in some selected area, and triage to the area is made easier with canned packages of system calls, e.g.:

-e trace=network

Trace all the network related system calls.

-e trace=desc

Trace all file descriptor related system calls.

I haven’t done C# debugging on mono yet. Tools do exist, but some need a process restart, and it might work out best if troubleshooting is done on the live problem – unless you think problem is reproducible…

For Windows try:

- Start Task Manager

- Change to the Performance tab

- At the bottom click on “Open Resource Monitor”

- Click on the “Disk” tab

- Tick the box next to any Duplicati process

It will show you any disk access activity and you can sort by read/write bytes per sec or both to see the troublesome file(s).

Not much of an advantage really is it

Console to the container:

# ls -lurt ~/.config/Duplicati

total 0

drwxr-xr-x 1 abc users 52 May 23 21:23 updates

# sar

sh: 2: sar: not found

# iotop

sh: 3: iotop: not found

# netstat

sh: 4: netstat: not found

# ntop

sh: 5: ntop: not found

# strace

sh: 6: strace: not found

#

Still on verifying.

Server state:

lastPgEvent : {"BackupID":"3","TaskID":3,"BackendAction":"List","BackendPath":null,"BackendFileSize":-1,"BackendFileProgress":0,"BackendSpeed":-1,"BackendIsBlocking":false,"CurrentFilename":null,"CurrentFilesize":0,"CurrentFileoffset":0,"Phase":"Backup_PreBackupVerify","OverallProgress":0,"ProcessedFileCount":0,"ProcessedFileSize":0,"TotalFileCount":2038822,"TotalFileSize":1820078666823,"StillCounting":false}You need to find the Duplicati config. That’s clearly not it. If it runs as a different user, run ls as that user. There should be many files with .sqlite as the suffix. You can’t even get as far as the current spot without.

Sometimes people will use the –server-datafolder option or DUPLICATI_HOME variable to keep things of value outside the container itself. I do little with containers, but it might also account for fewer commands. Probably there’s some desire to have a minimal set of packages. What IS available that might be useful?

Does the apropos command work? That might fish around for relevant things that are probably available.