Hi,

Duplicati backed up my files twice a week. Last week I noticed that the last successful backup was on 2023-06-19 (took 40 minutes). I tried manually starting the backup, a few reboots of my system (Windows 8.1 Pro), updated to 2.0.7.1_beta_2023-05-25, waited many hours, … No success.

About 5 minutes after starting a backup, UI shows that it verifies backend data. And this message never disappears…

Verbose Live Log in the UI shows the following entries (it’s been 15 hours since the last log message - still showing that it verifies backend data):

- Aug. 2023 19:27: Backend event: List - Started: ()

- Aug. 2023 19:21: Die Operation Backup wurde gestartet

- Aug. 2023 19:20: C:\ProgramData\Duplicati\updates\2.0.7.1\win-tools\gpg.exe

- Aug. 2023 19:19: Server gestartet und hört auf 127.0.0.1, Port 8200

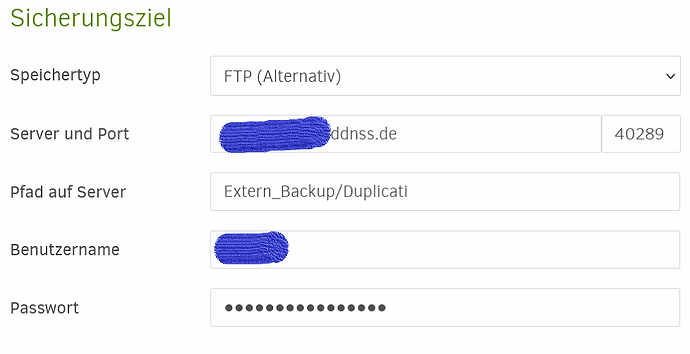

My backup destination is FTP. I tried accessing the FTP-Server manually and noticed that Listing all files in the folder takes around 30-60 seconds as there are 45,000 .zip.aes files. Maybe that’s enough time to run into issues?

In the backup configuration I pressed the button to check the FTP connection. Then verbose live log showed me:

- Aug. 2023 09:52: Cannot open WMI provider \localhost\root\virtualization\v2. Hyper-V is probably not installed.

- Aug. 2023 09:52: Cannot find any MS SQL Server instance. MS SQL Server is probably not installed.

Thanks

Michael